I think it doesn’t come up often here because people don’t get that far, maybe… or their AI is so simple/game-specific that it’s just cobbled together as whatever they needed.

I know that’s often true in my case… all of it.

Which isn’t to say that an ES isn’t ever involved in the AI. But you should be careful to know the exact reasons why. For example, in Mythruna, I can store general “user data” style properties on an entity. I use this in my object scripts to set/retrieve script-specific info. I do this for convenience because the ES data is already persisted… but the scripts are the only system to use it, these components are never retrieved in an “ES” way, etc… They are technically not part of the ES managed game state in that sense.

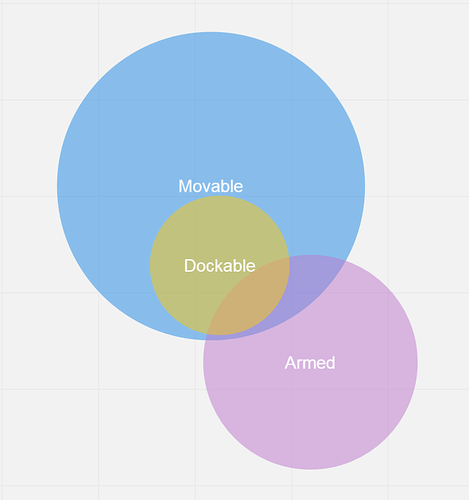

And even sometimes it might make sense to expose state to the ES if it means you can get nice bulk operations. Let me morph your Armed example into something I can use maybe to illustrate different approaches and their trade offs.

Let’s say that “Armed” represents some state that says the gun can shoot. This is because it has charged up or collected enough resources or is not in disrepair or some other complex state conditions. It is necessary to complicate in this way else there is likely no point to having an Armed component at all.

In that example, we have a few choices…

In a behavior system, when it is time to check for a behavior transition (either because one behavior has ended or because our behavior is constantly checking for some interruptible conditions) we would check all of the necessary prerequisites for our gun to decide if we transition into the “shooting the bad guys” behavior.

In a state machine style system, one of the exit conditions for our current state might be to check to see if we are armed, performing similar logic to the above based on the type of gun we have.

In both cases, there may be a whole bunch of states/behaviors that never check for this. For example, the “find health now at all costs” behavior is likely to override anything else and not bother checking for “am I armed right now” and transitioning to any of the “kill the bad guy” behaviors.

The idea of being “Armed” in a component sense is duplicate information that is implicit in the data elsewhere. For example, it’s reflecting ‘loaded’ state or ‘in good repair’, etc… However, as the number of conditions that roll up into an “Armed” state change, it may be desirable to do these as a system that can efficiently operate on a ton of small entities all at once. Be careful, this flexibility spirals fast, though.

One system could watch for for ammo counts and set the FullAmmo component. Another system could watch for the power level and set the FullCharge component. Another system could watch for the repair level and set the FullyFunctional component. If you still deem it necessary to have an Armed component that rolls these up then another system could have different entity sets for all of those and set Armed for every entity that is in all sets. (Easily checked with three loops.)

Alternately, you could flip it over and have all guns be armed by default unless specifically “Off”. In that case, you flip each of the systems and still combine into an “Off” state.

Alternately alternately, you treat “On/Off” as a debuff… in the sense that each of those three systems is adding an “Off” buff entity. Then the “Off debuff” system sets the “Off” on the target entity for any entity it finds pointed to by the off debuff. Then you can collapse out the system that combines the specific “Loaded”, “Charged”, etc. components into a more general off debuff system. (It also makes more sense to debuff since any of those negative conditions means ‘off’ rather than having to combine many ‘on’ conditions into an AND.)

Anyway, If you have a hundred thousand entities and the state transition between Armed and not Armed happens often… and the AI will be checking that frequently… then the above might make sense. If the rolled up conditions are few, the transitions relatively infrequent, or the AI checks relatively infrequent, then it may not make sense.

It’s important to also point out (probably) that the same state probably applies to the player’s gun.

So, either the player and AI are checking for a few conditional states before they know they can fire… or you have a handful of systems monitoring these and the player/AI are just looking at the “Off” state of their gun.

Point being: the player is just another actor sharing the same state… which means we know we haven’t done anything wrong. Whether it’s overly complicated or overly flexible/overly engineered for the task is dependent on the factors already mentioned.