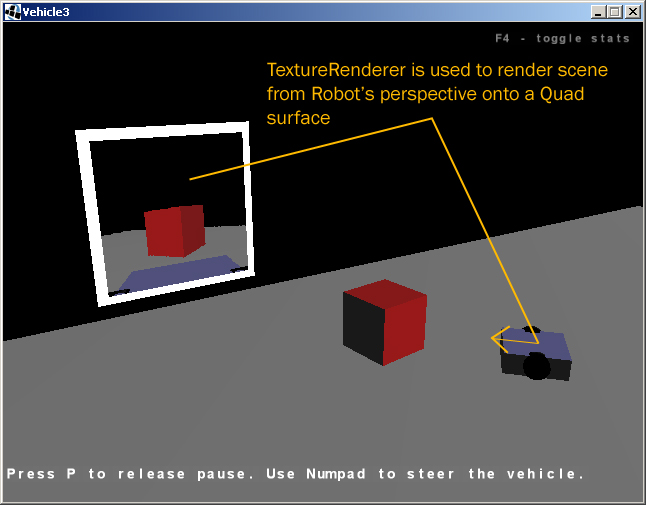

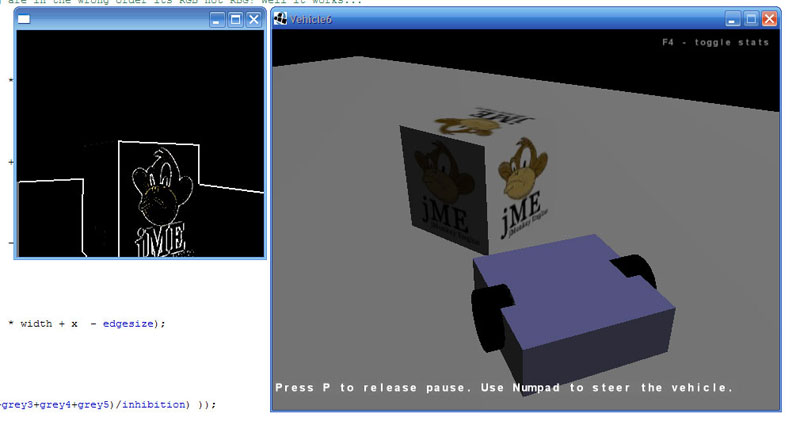

Finally got it working :D Now offscreen-rendering the Robot's perspective, applying post-processing (in this case lateral-inhibition/edge detection) and displaying the result on a separate SWT Shell.

If anyone else needs help with this, I think I can help now. Thanks again to everyone who helped me.

This is what I did in simpleInitGame() to create the OffscreenRenderer:

/*Create the OffscreenRenderer*/

osRenderer = ((LWJGLDisplaySystem) display).createOffscreenRenderer(320, 320);

osRenderer.setBackgroundColor(new ColorRGBA(0f, 0f, 0f, 1f));

if ( osRenderer.isSupported() ) {

osRenderer.getCamera().setFrustumPerspective(45f, 4f/3f, 1, 200);

osRenderer.getCamera().setLocation(new Vector3f(0, 0, 75f));

} else {

//LOGGER.debug( "Offscreen rendering is not supported!");

}

/*I attached it to a Node so I can make that node follow the Vehicle*/

camNodeOS = new CameraNode("Camera Node Offscreen", osRenderer.getCamera());

camNodeOS.setLocalTranslation(new Vector3f(0, 50, -50));

camNodeOS.updateGeometricState(0, true);

camNodeOS.setLocalTranslation(-1, 2, 0);

Quaternion q2 = new Quaternion().fromAngleNormalAxis(90 * FastMath.DEG_TO_RAD, new Vector3f(0, 1, 0));

camNodeOS.setLocalRotation(q2);

// Attach the CameraNode to the Vehicle

vehicle.attachChild(camNodeOS); // Remember "vehicle" is attached to the rootNode

Here is the code in SimpleInitGame() to create second shell to display the result from processing the Offscreen image. The "image" is a org.eclipse.swt.graphics.Image that is accessible to simpleRender() and simpleInitGame().

display2 = new Display ();

shell = new Shell(display2, SWT.SHELL_TRIM | SWT.DOUBLE_BUFFERED);

shell.setSize(320, 320);

shell.addPaintListener(new PaintListener(){

public void paintControl(PaintEvent e){

if(image != null){

e.gc.drawImage(image, 0, 0);

}

}

});

shell.open ();

in simpleRender() I tell the Offscreen renderer to Render and retrieve the ImageData from the OffscreenRenderer:

osRenderer.render(rootNode);

IntBuffer buffer = osRenderer.getImageData();

// Create a new ImageData Object for the retrieved (and possibly modified) pixels

imgData = new ImageData(width, height, 32, new PaletteData(0xFF0000, 0x00FF00, 0x0000FF));

/* Later I access the pixels via */

for (int y = 0; y < height; y++) {

for (int x = 0; x < width; x++) {

int p = buffer.get((height - y - 1) * width + x);

/* Do Postprocessing on Pixels Here */

// Write the pixels to the new imData Object

imgData.setPixel(x, y, p);

}

}

// Then Update the Secondary Shell using the new "imgData"

//Clear the Image Data Resources

if(image != null){

image.dispose();

}

//Create the new Image Data

image = new org.eclipse.swt.graphics.Image(display2, imgData);

//Redraw the Shell we created in simpleInitGame

if(shell.isDisposed() == false){

shell.redraw();

}