@pspeed :

ok, but if jme already sort, why render 200 geometries with the same material is slower (and a lot slower) than rendering a batch node of these 200 geometries ? At least last time i checked it was true, maybe it’s not true anymore.

But when you batch you only have one object… so where would the light level live if not in the mesh?

Well, i agree with that but … why not storing it in a material but in a variable (so it will never lead to a recompilation of the shader, so you can let the current context like it already is).

I think that say to the graphic card “use this single variable in the shader” costs less than “use this array containing only a single value, do linear interpolation on similar values etc.”

Something like mesh.setSingleFloat(blabla); (like we have setBuffer)

Or even define some “user” buffer. The user will define consts like this

const int the_index_used_for_ligjt_level = 0;

const int the_foo_used_for_bar = 1;

etc.

then he binds his buffer with the light level in the first case, the foo in the second case etc.

It seems that i am trying to re-create what a material already does and it’s not entirely wrong, but it’s because the material swapping cost so much and it’s so easy to kill the framerate just by having a lot of geometries in the scene. I know you shouldn’t have a lot of geometries, but it’s where the “flow” of the engine brings you.

And people end with texture atlas and playing with texcoord and using buffer and textures for what they are not (for example i am using a texture as a palette in my code). People try to inject datas from other way than the material because its main purpose (give datas to the shader) costs so much (and a part of that comes from conditional compilation : because the value of a boolean can entirely change the code of the shader, you can’t assume that materials with even only one difference between them can be swapped by just changing this value)

But i talk too much, once again it would fit better in a specific thread.

And maybe it’s already possible to do that, i am not a professional with jme (even if i use it regularly for several years).

Any plans to make it compatible with user created WADs (i.e. Brutal Doom?)

I don’t plan to re-create doom. Even the vanilla. I only try to create a basic library that would be a good “starting point” for anyone willing to recreate it. If i can manage animation, fix every bugs in textures, give access to every data in a usable and handy way … well, it would be great and my “job” on that would be over.

Especially, i don’t plan to re-create the A.I. of doom. As it doesn’t exists in the WAD, i would need to redo everything from scratch and with a lot of reverse engineering and … well, nope.

I already plan to implement “portals” (not like in the game with this name at all, read this paragraph) to only display sectors that are actually in the view. It will be less effective than the “subsectors + BSP tree” thing that exists in the WAD, but still better than display everything all the time.

And i can try to implement the DECORATE language used in zdoom and by brutal doom. I already implemented several languages (with byaccj library, bison and yacc for Java).

But recreate doom is not in my plan, and never has been.

Sorry.

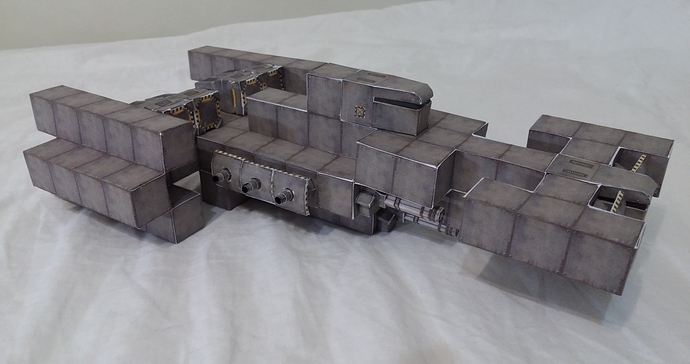

(and if someone wants to know : work in progress, fixing bug, clarifying code etc  )

)