Hi everyone,

I’ve recently been working on enhancing the sound system within the demo I am currently developing. I’ve found that the current jME API appears to lack support for implementing custom AudioRenderer solutions beyond the existing lwjgl and joal implementations. This presents a significant limitation. Furthermore, extending the functionality of the current AudioRenderer seems to require modifications to the engine’s core codebase, which is challenging given the complexity of the existing source.

JmeDesktopSystem.newAudioRenderer()

// API doesn't really allow for writing custom AudioRenderer

// implementations using AppSettings.

@Override

public AudioRenderer newAudioRenderer(AppSettings settings) {

initialize(settings);

AL al;

ALC alc;

EFX efx;

if (settings.getAudioRenderer().startsWith("LWJGL")) {

al = newObject("com.jme3.audio.lwjgl.LwjglAL");

alc = newObject("com.jme3.audio.lwjgl.LwjglALC");

efx = newObject("com.jme3.audio.lwjgl.LwjglEFX");

} else if (settings.getAudioRenderer().startsWith("JOAL")) {

al = newObject("com.jme3.audio.joal.JoalAL");

alc = newObject("com.jme3.audio.joal.JoalALC");

efx = newObject("com.jme3.audio.joal.JoalEFX");

} else {

throw new UnsupportedOperationException(

"Unrecognizable audio renderer specified: "

+ settings.getAudioRenderer());

}

if (al == null || alc == null || efx == null) {

return null;

}

return new ALAudioRenderer(al, alc, efx);

}

// why put a Listener object in the LegacyApplication class?

// why does the Listener class have such a generic, non-specialized name?

// why not AudioListener?

private void initAudio() {

if (settings.getAudioRenderer() != null && context.getType() != Type.Headless) {

audioRenderer = JmeSystem.newAudioRenderer(settings);

audioRenderer.initialize();

AudioContext.setAudioRenderer(audioRenderer);

listener = new Listener();

audioRenderer.setListener(listener);

}

}

It also became apparent that many capabilities of the OpenAL library, such as Effects and Sound Filters, have not been fully utilized in the current jme integration.

ALAudioRenderer.setEnvironment()

// ALAudioRenderer only supports ReverbEffect (alias Environment)

@Override

public void setEnvironment(Environment env) {

checkDead();

synchronized (threadLock) {

if (audioDisabled || !supportEfx) {

return;

}

efx.alEffectf(reverbFx, EFX.AL_REVERB_DENSITY, env.getDensity());

efx.alEffectf(reverbFx, EFX.AL_REVERB_DIFFUSION, env.getDiffusion());

efx.alEffectf(reverbFx, EFX.AL_REVERB_GAIN, env.getGain());

efx.alEffectf(reverbFx, EFX.AL_REVERB_GAINHF, env.getGainHf());

efx.alEffectf(reverbFx, EFX.AL_REVERB_DECAY_TIME, env.getDecayTime());

efx.alEffectf(reverbFx, EFX.AL_REVERB_DECAY_HFRATIO, env.getDecayHFRatio());

efx.alEffectf(reverbFx, EFX.AL_REVERB_REFLECTIONS_GAIN, env.getReflectGain());

efx.alEffectf(reverbFx, EFX.AL_REVERB_REFLECTIONS_DELAY, env.getReflectDelay());

efx.alEffectf(reverbFx, EFX.AL_REVERB_LATE_REVERB_GAIN, env.getLateReverbGain());

efx.alEffectf(reverbFx, EFX.AL_REVERB_LATE_REVERB_DELAY, env.getLateReverbDelay());

efx.alEffectf(reverbFx, EFX.AL_REVERB_AIR_ABSORPTION_GAINHF, env.getAirAbsorbGainHf());

efx.alEffectf(reverbFx, EFX.AL_REVERB_ROOM_ROLLOFF_FACTOR, env.getRoomRolloffFactor());

// attach effect to slot

efx.alAuxiliaryEffectSloti(reverbFxSlot, EFX.AL_EFFECTSLOT_EFFECT, reverbFx);

}

}

ALAudioRenderer.updateFilter()

// ALAudioRenderer only supports LowPassFilter

private void updateFilter(Filter f) {

int id = f.getId();

if (id == -1) {

ib.position(0).limit(1);

efx.alGenFilters(1, ib);

id = ib.get(0);

f.setId(id);

objManager.registerObject(f);

}

if (f instanceof LowPassFilter) {

LowPassFilter lpf = (LowPassFilter) f;

efx.alFilteri(id, EFX.AL_FILTER_TYPE, EFX.AL_FILTER_LOWPASS);

efx.alFilterf(id, EFX.AL_LOWPASS_GAIN, lpf.getVolume());

efx.alFilterf(id, EFX.AL_LOWPASS_GAINHF, lpf.getHighFreqVolume());

} else {

throw new UnsupportedOperationException("Filter type unsupported: "

+ f.getClass().getName());

}

f.clearUpdateNeeded();

}

I temporarily paused development on the demo to thoroughly review the OpenAL documentation and identify untapped potential.

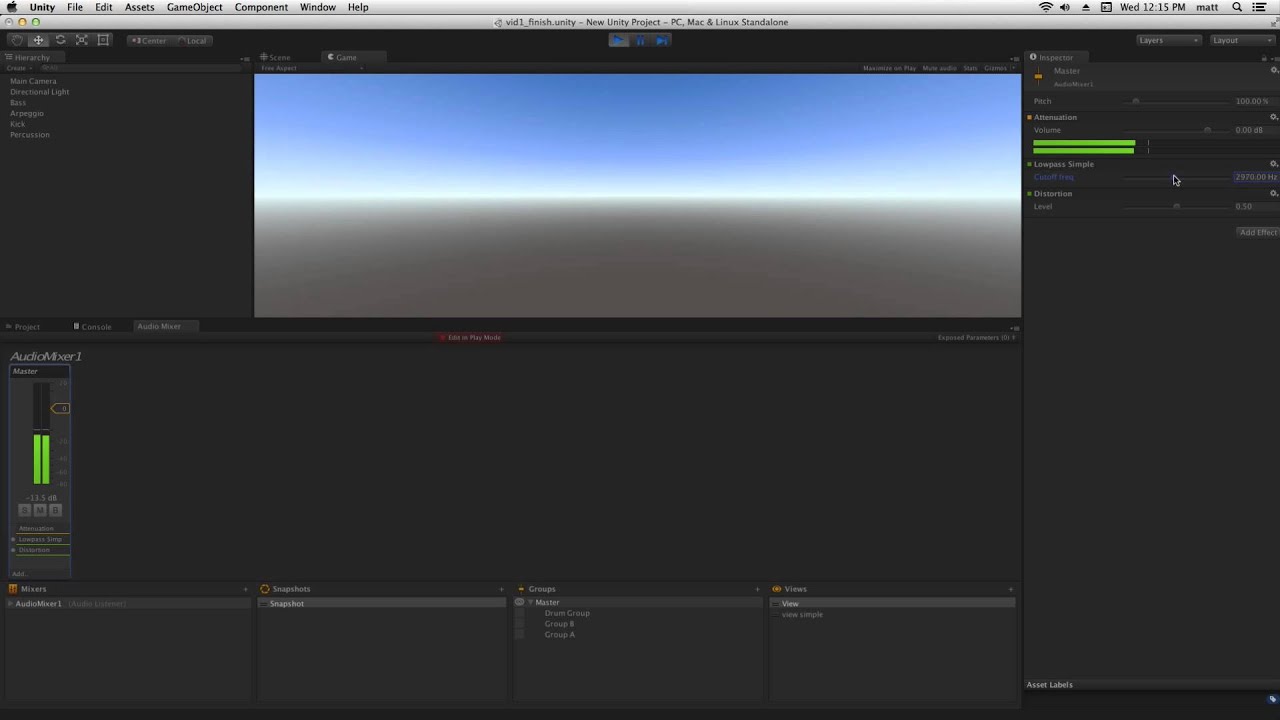

Based on my findings, I discovered a method to bypass the standard AudioRenderer initialization process and integrate my own custom SoundManager, offering a significantly simpler and more modular API. Attached is a screenshot showcasing the functionality I have currently implemented:

public static void main(String[] args) {

AppSettings settings = new AppSettings(true);

settings.setResolution(640, 480);

settings.setAudioRenderer(null); // disable jME AudioRenderer

Test_SoundManager app = new Test_SoundManager();

app.setSettings(settings);

app.setShowSettings(false);

app.setPauseOnLostFocus(false);

app.start();

}

At this stage, I am still working on integrating multichannel audio management, audio streams, and a dedicated WAV loader. However, I have successfully implemented:

SoundManagerSoundSourceSoundBufferSoundListenerAudioEffects: Chorus, Compressor, Distortion, Echo, Flanger, PitchShift, ReverbAudioFilters: LowPassFilter & HighPassFilter- the capability to modify the distance attenuation model equation.

OGGLoader

I am eager to explore the extent to which I can improve the audio system and integrate advanced features, drawing inspiration from concepts found in engines like Unity, specifically the AudioMixer and AudioMixerGroup.

Edit:

Maybe something to think about for jme4

In the meantime, here is the new look of my editor for the current jme AudioNodes: