Hello,

About 2 years ago (or 1 year and 7 months ago), I posted a thread: Regarding the development of the core engine, I would like to know what everyone thinks - #27 by Ali_RS. I originally planned to complete this work at that time, but later had to temporarily leave JME3 due to being busy with work and survival. However, I have continued to follow JME3.

During the time away, I mainly worked on graphics related roles developing UE4/UE5 engines. 《Dead by Daylight》 Mobile is a recent mobile game I participated in launching, built with UE4. Although I’m still quite busy recently, I maintain the same goals from 2 years ago - I’m happy to provide any technologies I can implement for JMonkeyEngine3, and hope to merge them into the current core engine. Currently, I’ve organized some of my previous modifications to JME3, which include:

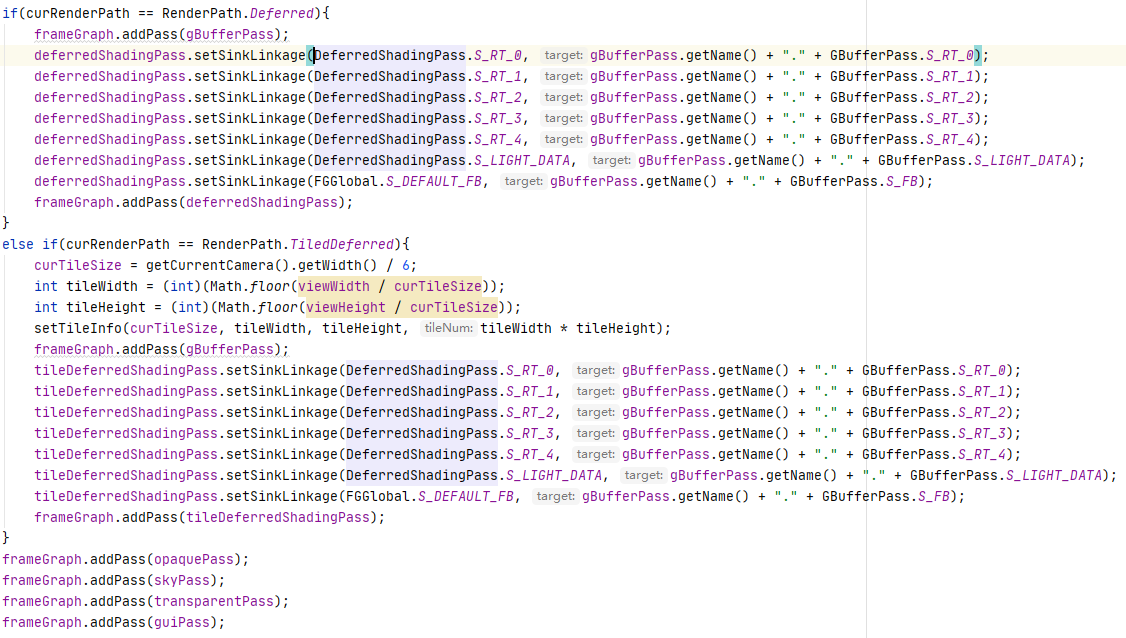

- Framegraph (Scenegraph organizes scene objects, while Framegraph organizes modern rendering workflows, see: https://www.gdcvault.com/play/1024612/FrameGraph-Extensible-Rendering-Architecture-in). I’ve implemented a basic framework which isn’t perfect but can work and is compatible with JME3.4 core.

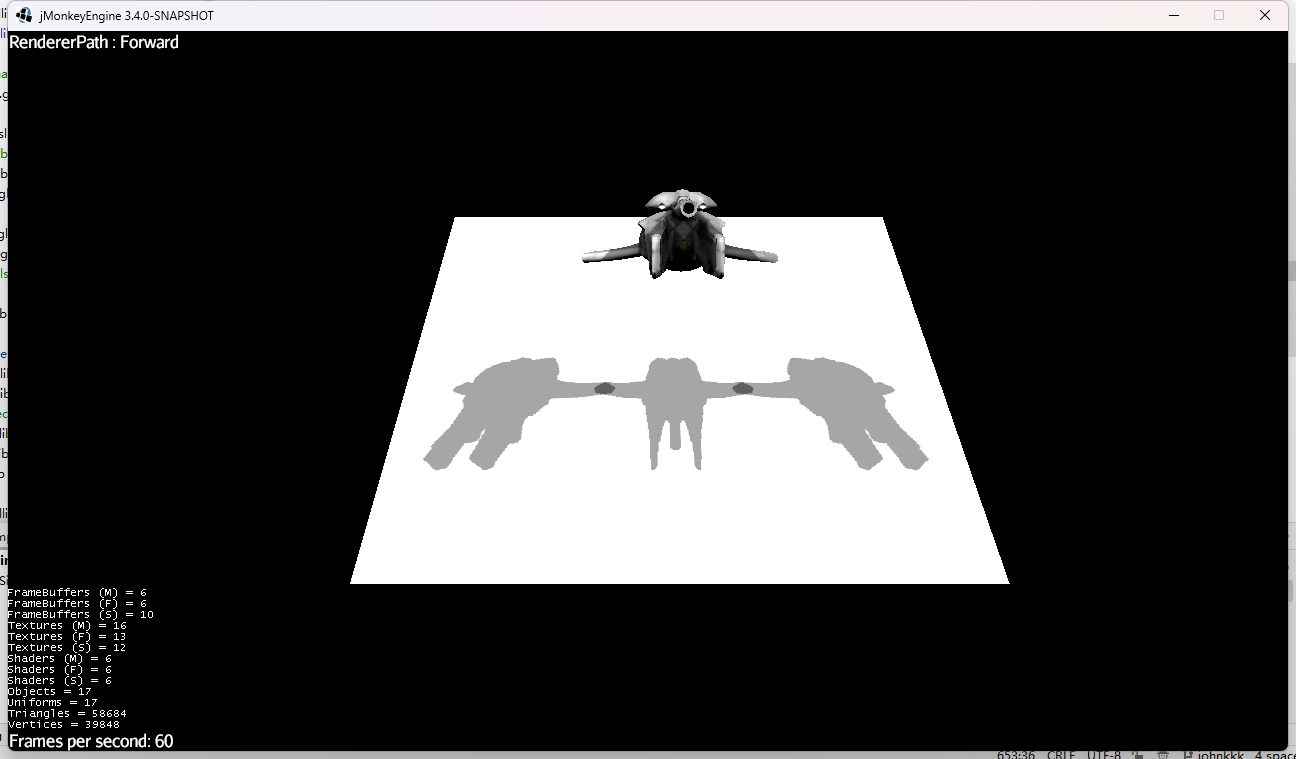

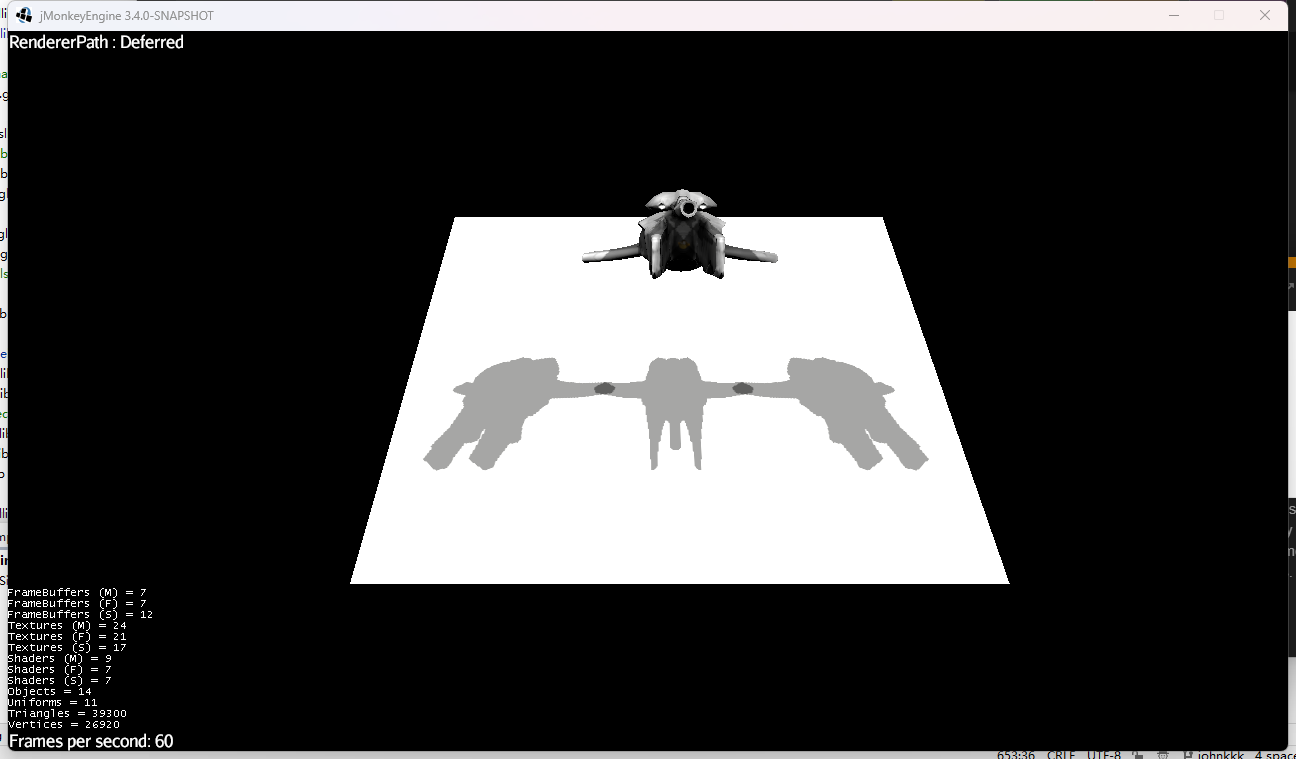

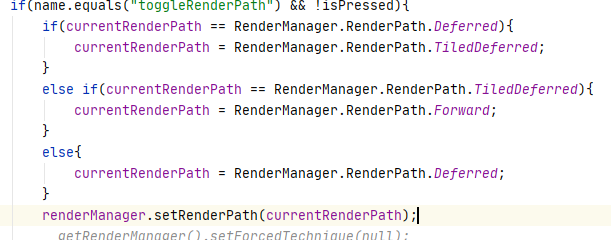

- Implemented Forward, Deferred, TileBasedDeferred, Forward+ and other render paths. They are fully compatible with current JME3 core (and should be compatible with most existing code), and have almost zero invasiveness for existing projects. You can switch render paths at runtime with just a function call.

- Global Illumination (I originally planned to implement two main GI methods). Some may already know JME3’s PBR indirect lighting is similar to UE/U3D/Godot’s SkyLight + ReflectionProbes. If you’ve used other engines, you’ll notice they have ReflectionProbes, LightProbes, and SkyLights. For UE4/UE5/Unity3d, SkyLight is the same as JME3 PBR indirect lighting environment diffuse SH, while ReflectionProbes are like JME3’s PBR indirect specular IBL. However, for real GI, SkyLight and environment reflections aren’t enough. For Unity3D, Godot, and UE4 (UE5 now uses Lumen fully), their GI is described as: Lightmap/Lightprobes/LPV/vxGI/DDGI+SSGI/ReflectionProbes+SkyLight. There are many combined techniques, see Godot’s doc for details: Introduction to global illumination — Godot Engine (stable) documentation in English. So I’ve implemented a mainstream LightProbe system (but probe placement needs visualization tools, pure code is too painful, so it’s not perfect yet), I’ve explained details in code comments. I defined it as LightProbeVolume for now to be compatible with current JME3 core, but some issues may need discussion with core devs - perhaps a separate thread?

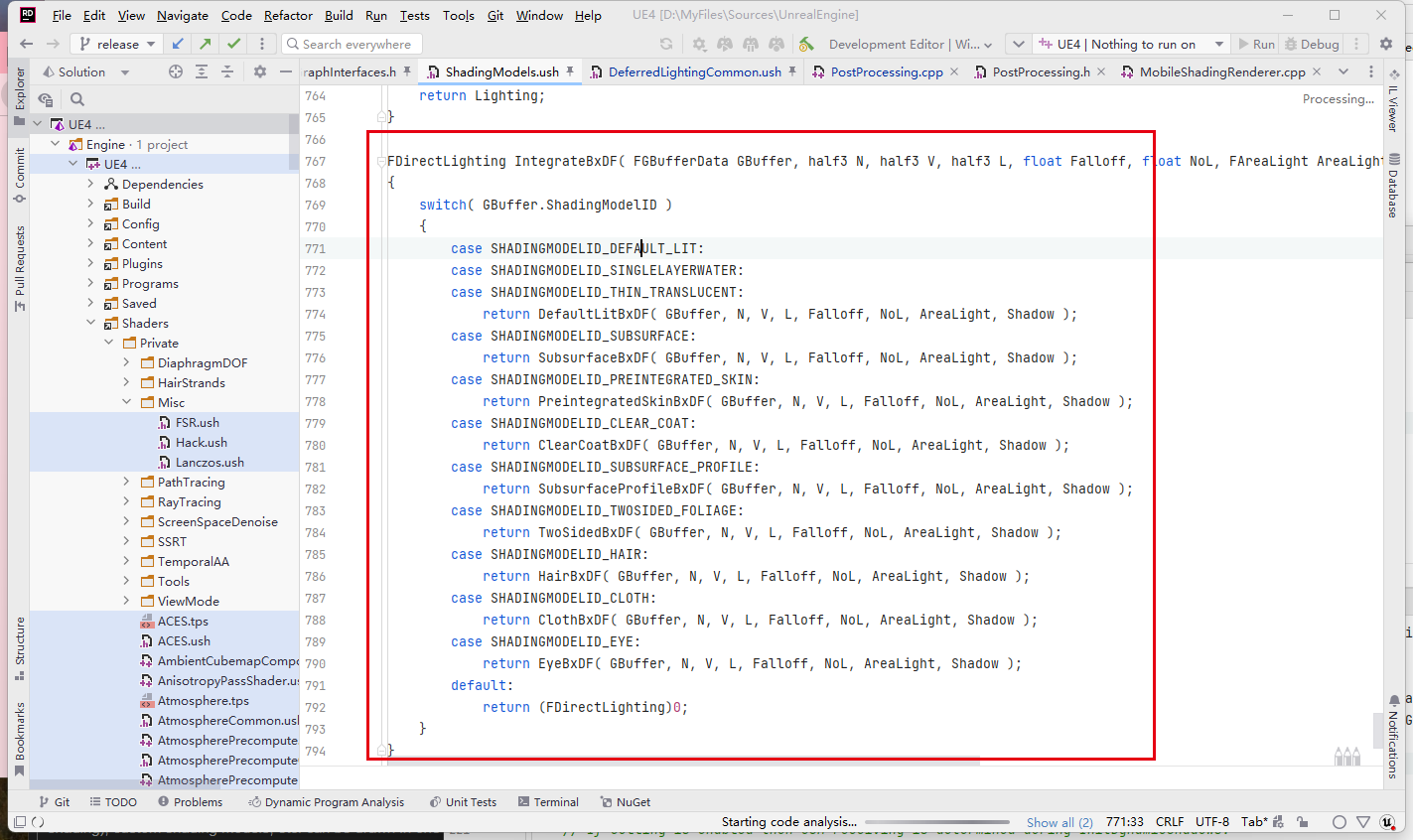

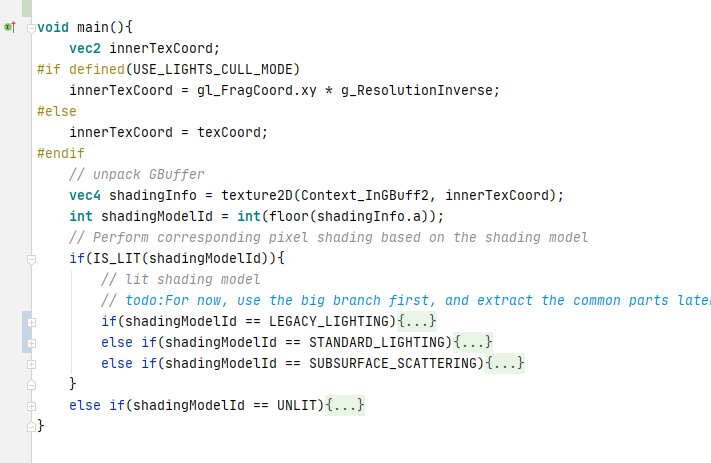

- ShadingModel - with multiple render paths now, ShadingModels are needed to shade materials properly under deferred rendering (similar to UE4).

There may still be bugs in the above, but that’s ongoing work I can maintain/fix regularly. What I want to confirm is whether these can be merged into the current core? I may implement more advanced graphics features later (UE4’s HierarchicalInstancedStaticMesh, GPU Driven Pipeline, state of the art OcclusionCull, variable rate shading, AMD FSR, skin rendering etc).

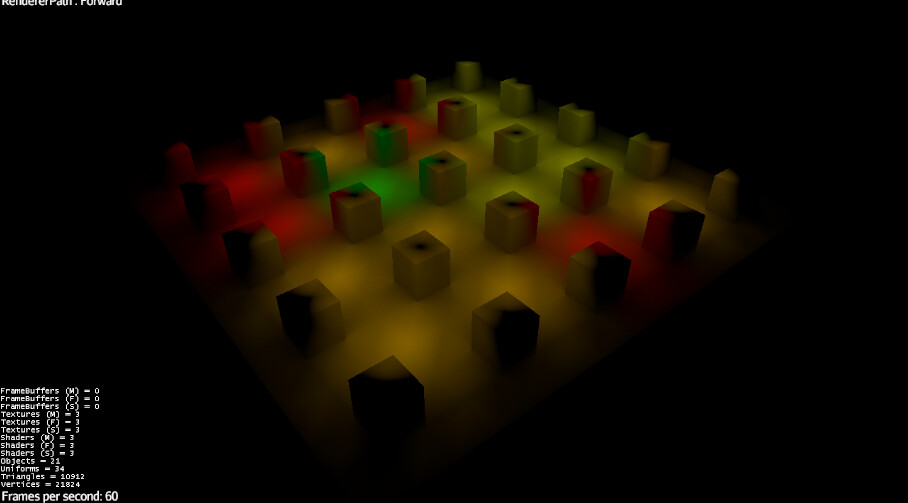

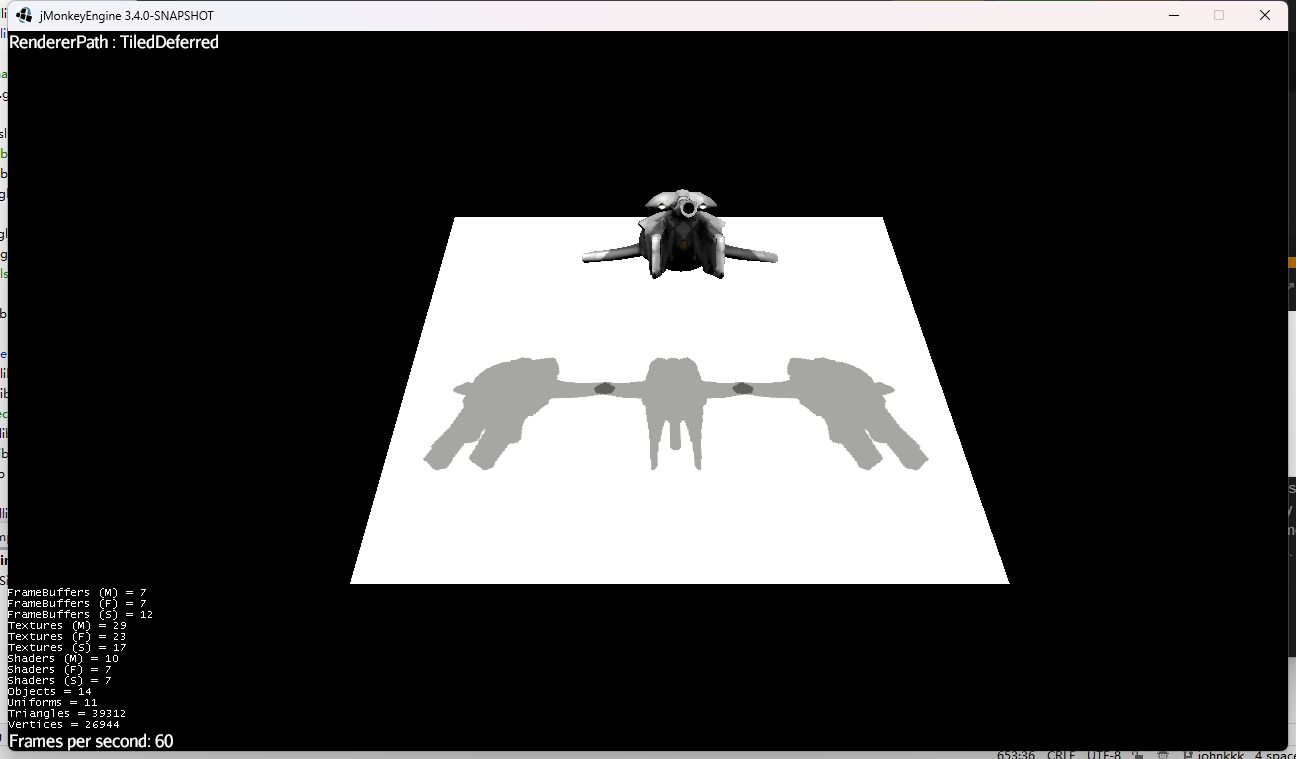

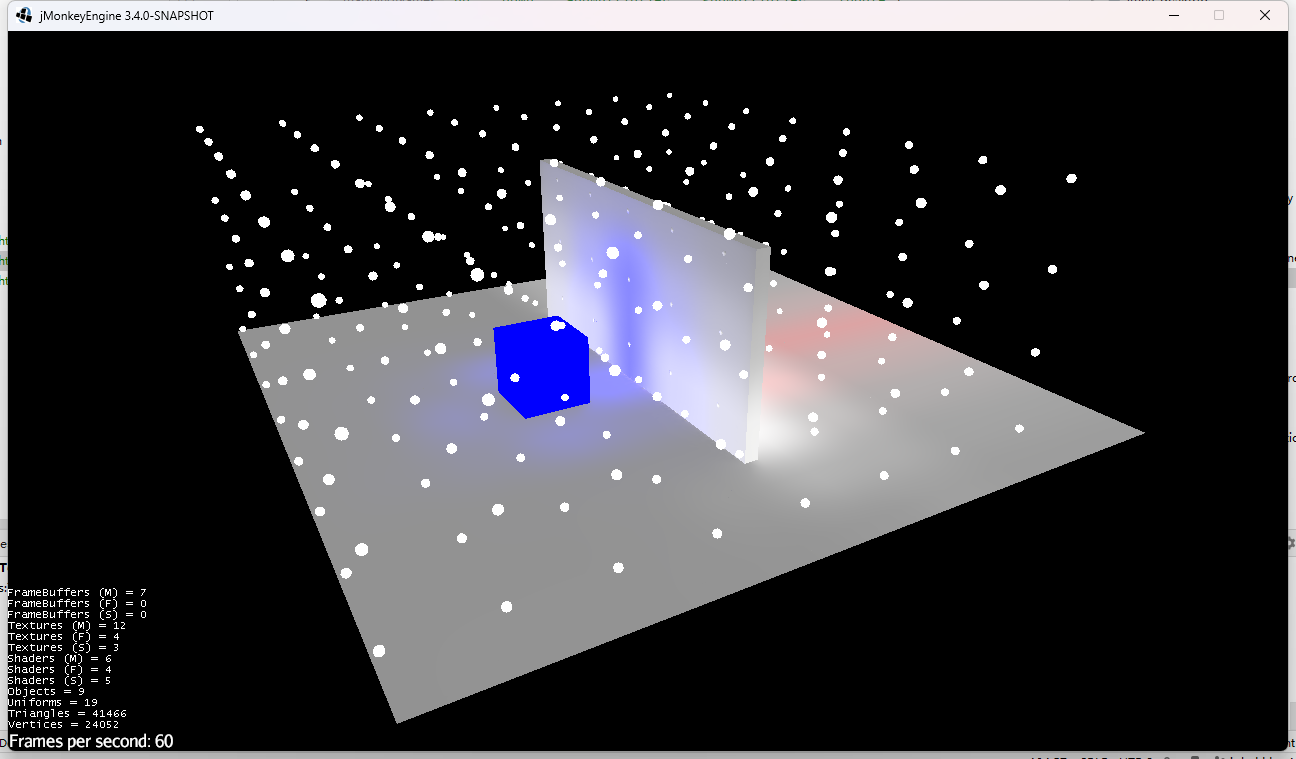

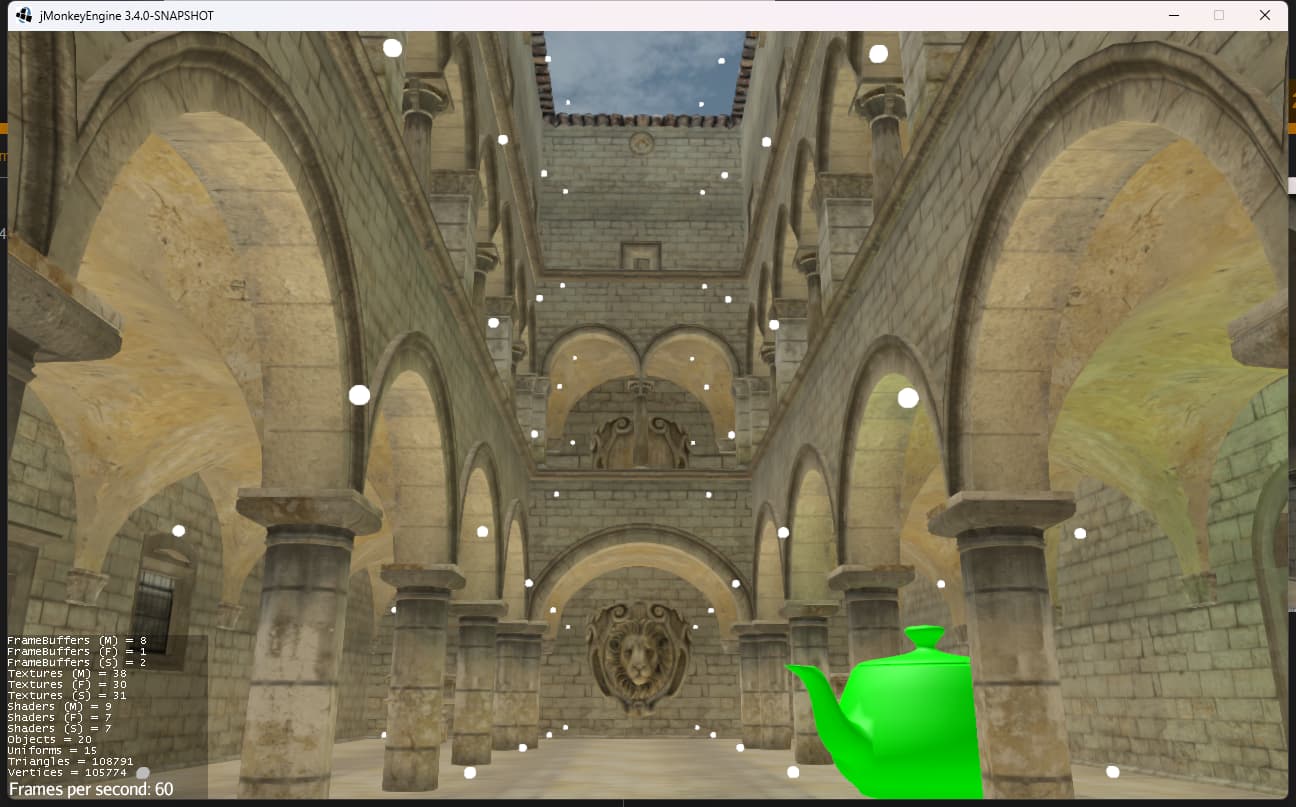

Below are some example screenshots of tests. The following shows a comparison of the differences when switching between Forward, Deferred, and TileBasedDeferred render paths at runtime:

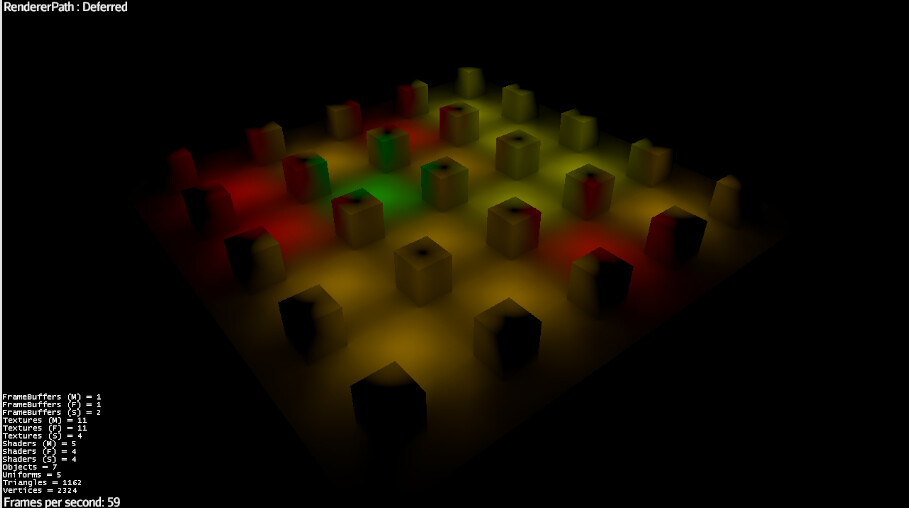

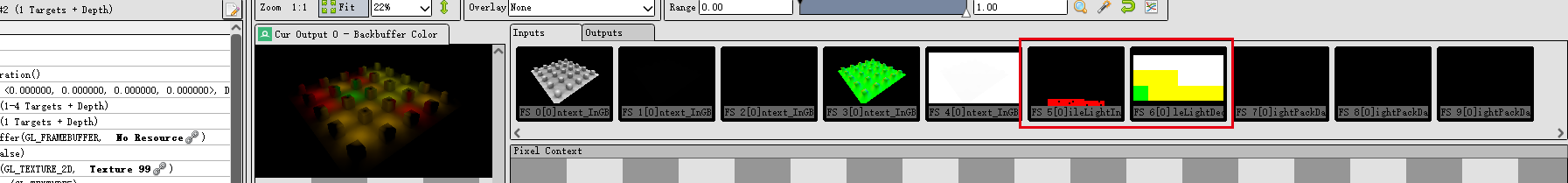

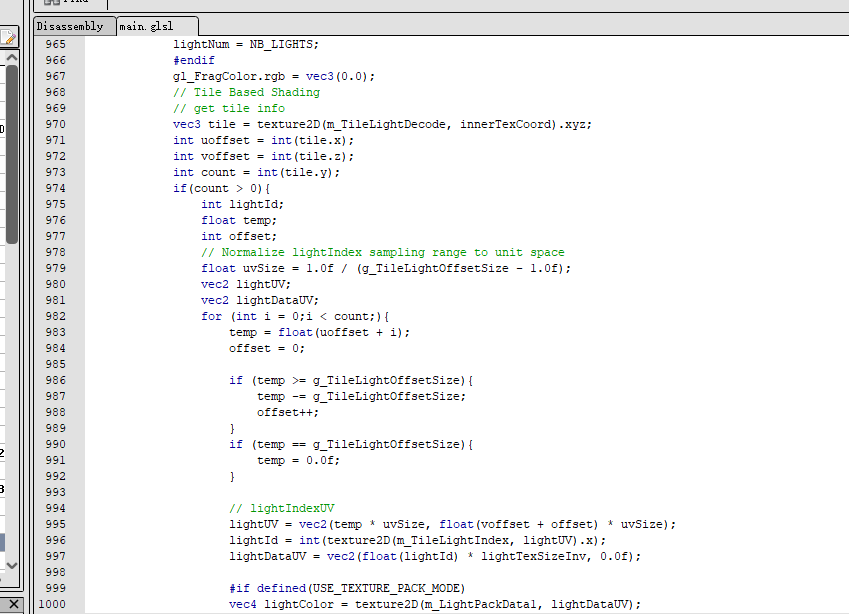

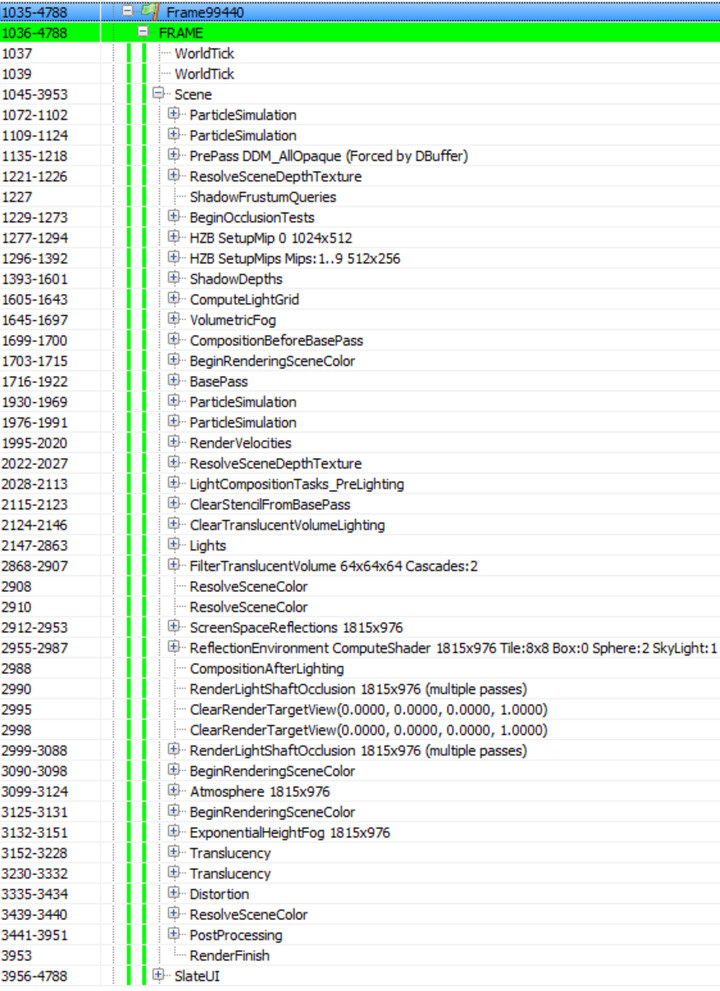

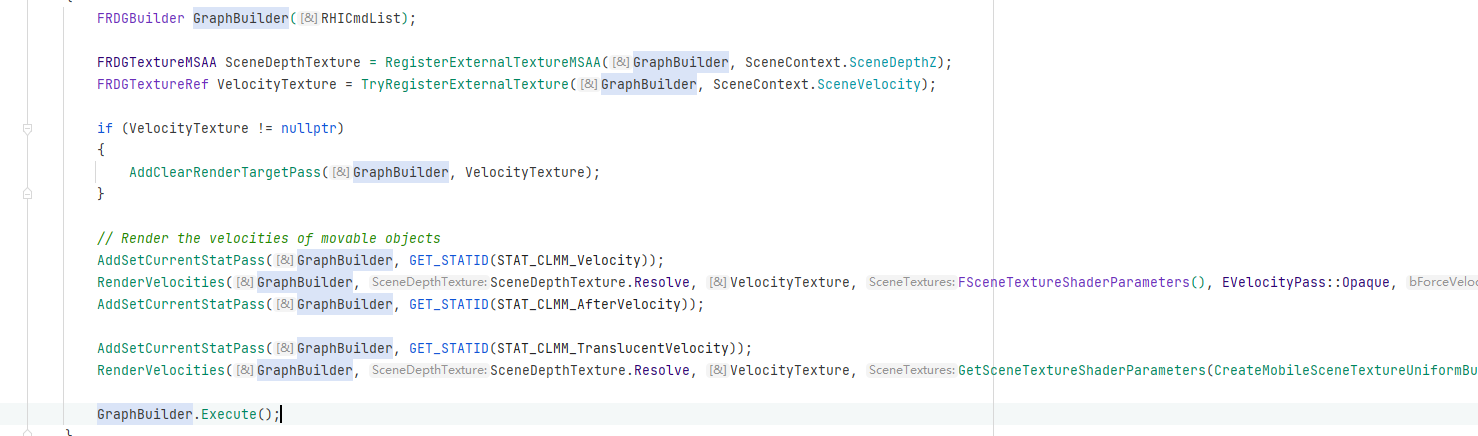

For generality, I did not optimize the Deferred rendering enough - it uses 4 RTs + 1 Depth by default. This references the UE4 renderer design, which is sufficient for most advanced rendering data packing needs. I pack the LightData information into textures instead of UniformBuffers (although I retain UniformBuffer fallback options). Below is an example of the data in one frame:

For TileBasedDeferred, in theory it is faster than Deferred. It has a tile culling operation for PointLights - this data is packed into a fetch texture and light source texture:

For shadows, under deferred rendering you can only use post-process shadows, so you are limited to using ShadowFilters. However, unlike before, in the future I may add visibility information, storing Cast, Receiver, Mask etc. data into the G-Buffer, so more complex shadow logic can be implemented during the post-process shadow pass.

I noticed @zzuegg implemented a better shadow filtering effect here (Illuminas - Deferred Shading and more), I may consider porting that in.

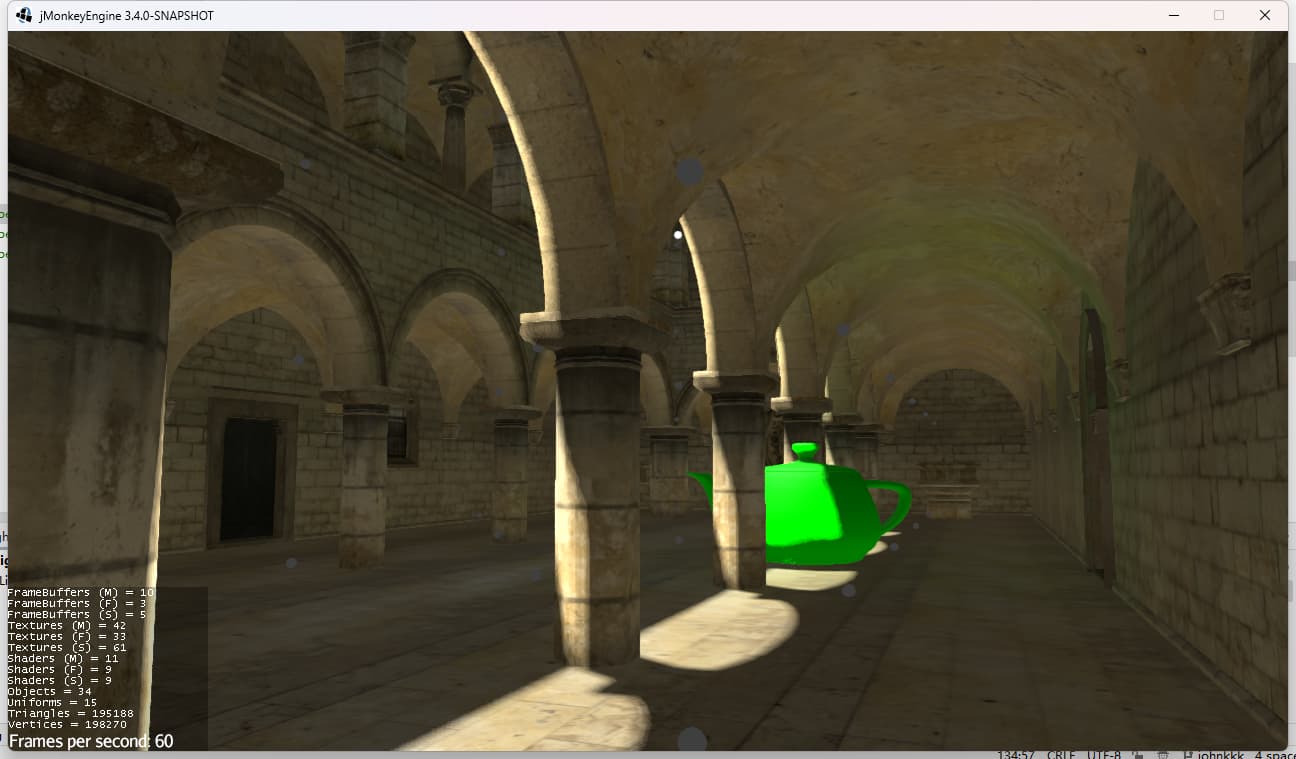

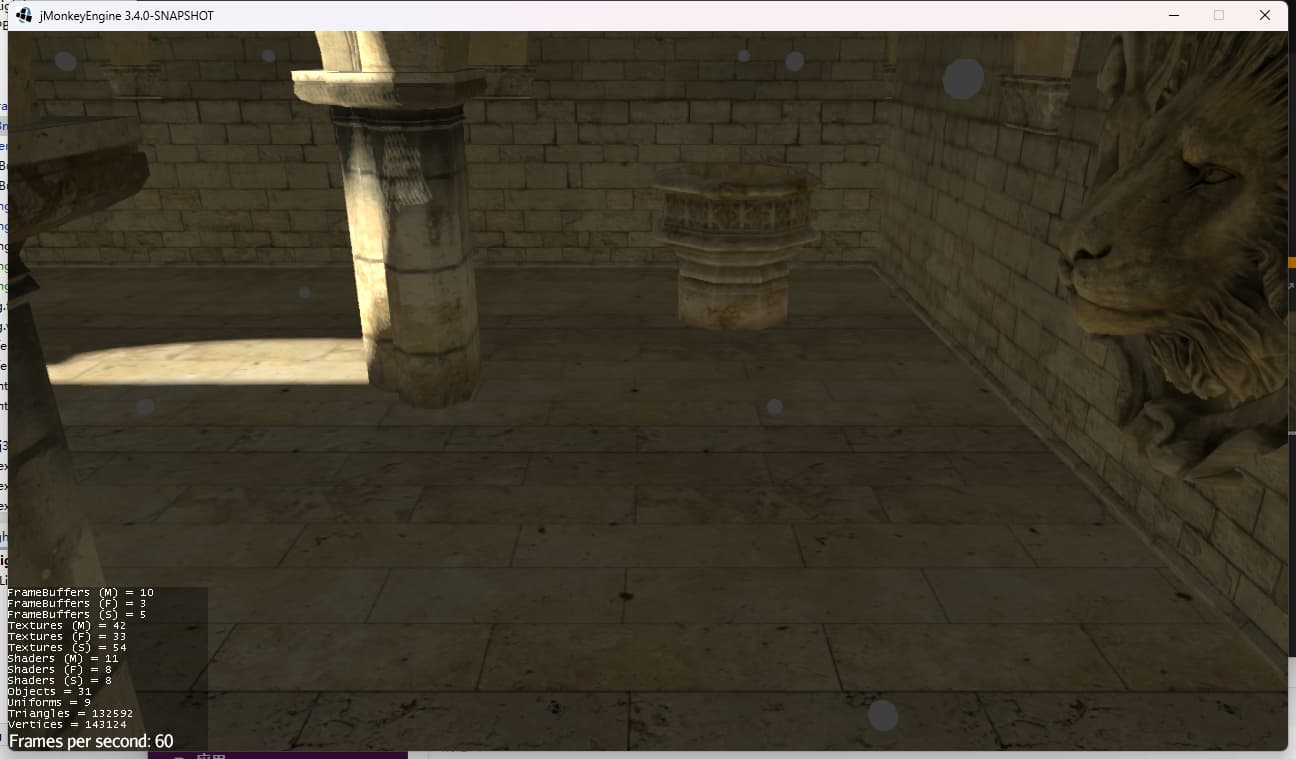

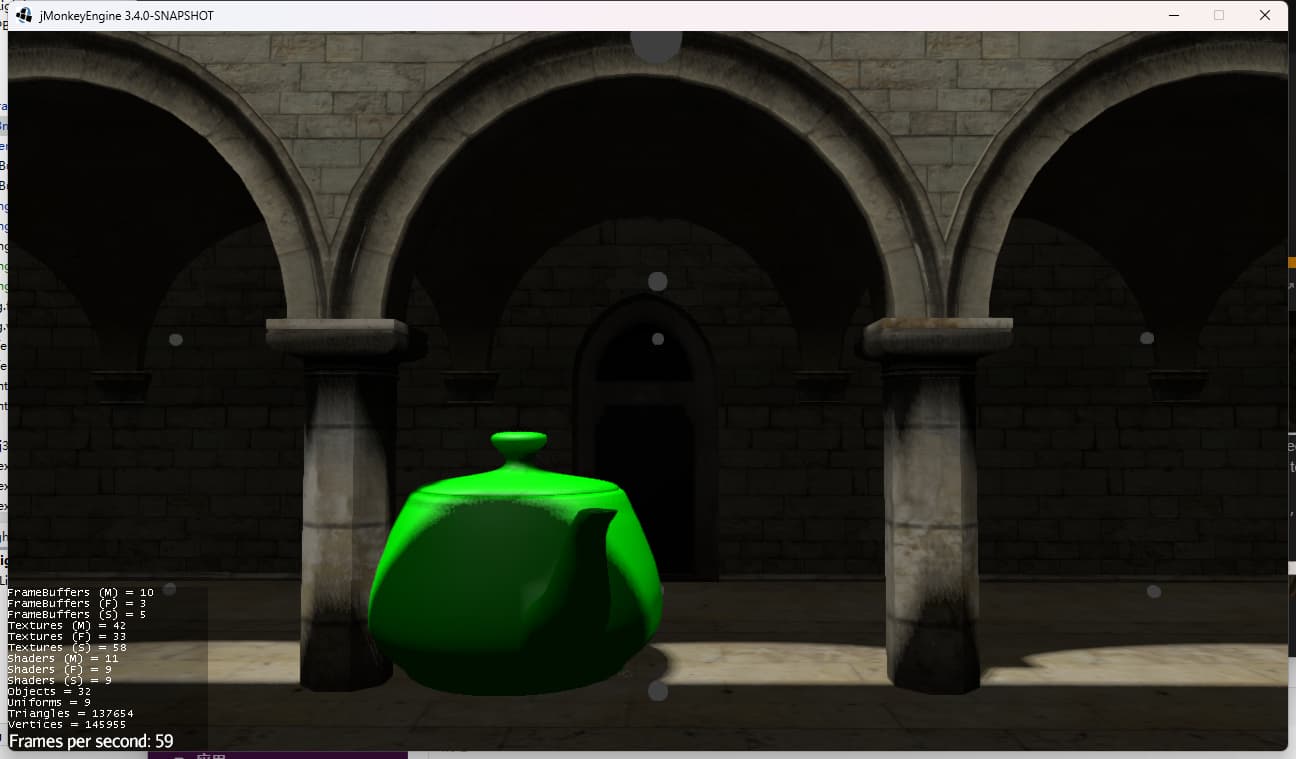

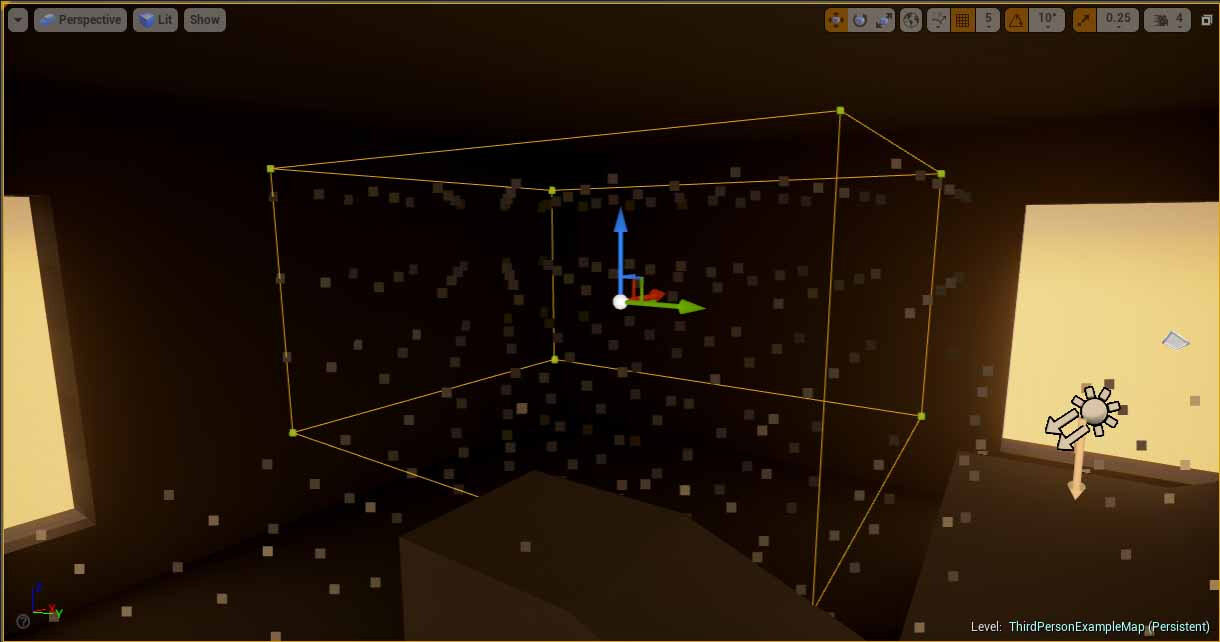

Next is the current LightProbeVolume implementation I have done. I referenced many materials including Unity3D’s lightprobe groups, Godot’s approach, and UE4’s Lightmass, then implemented a LightProbeVolume relatively compatible with JME3:

Note that the image is not PBR, but Phong Lighting + GI. As I mentioned, advanced graphics rendering requires adjusting some existing workflows in JME3, so I plan to refine it further after discussing with core developers.

Note the difference between constant ambient lighting and the LightProbeVolume,The first image uses constant ambient lighting, the second image uses a LightProbeVolume:

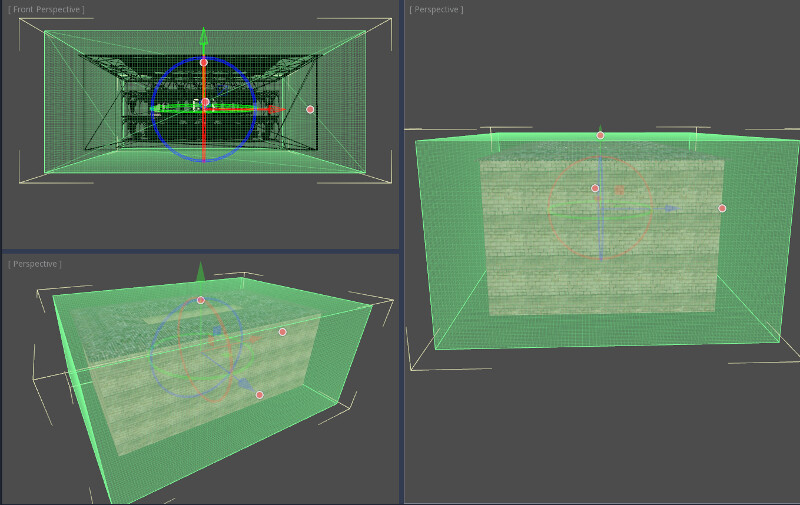

Since it is not working in an HDR pipeline, the colors are actually inaccurate - this can be fixed in future adjustments. But for now let’s look at the differences between the new LightProbeVolume and LightProbe in JME3. First, the most obvious difference is: the number of probes is very high, and the stored data includes not just irradiance but also data to prevent light leaks in GI, similar to Unity3D:

Processing: image.png…

As you can see, there is a wall between the blue box and red box, so GI does not leak between them.

Alright, I need some feedback, the most important point is: whether I can merge it into the core module (although my code is JME3.4, JME3.6 core shouldn’t have changed too much), cheers!