I think it’s time to explain my current implementation details here, mainly what is ShadingModel and how to associate it with DeferredRenderPath.

Regarding built-in shading models, I currently added 4 of them, namely LEGACY_LIGHTING (i.e. Phong Lighting), STANDARD_LIGHTING (i.e. PBRLighting), UNLIT (ColoredTextured, Unshaded, Terrain, actually can be any material shader that only needs to output a single color value), SUBSURFACE_SCATTERING (real skin shading, subsurface scattering materials). More may be included later such as EYE (eye shading model), Hair, Cloth (fabrics).

The shading model determines shading results. Simply put, for DeferredShading, there are usually two passes, first the GBufferPass, then the DeferredShadingPass. GBufferPass is responsible for packing data required for computing specified shading model, and DeferredShadingPass fetches data and shades according to the specified shading model.

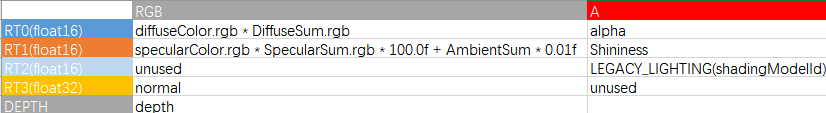

To make it general, GBuffer allocated 4 RTs and 1 Depth, let’s look at the GBuffer for LEGACY_LIGHTING, as follows:

Then the GBuffer for STANDARD_LIGHTING, as follows:

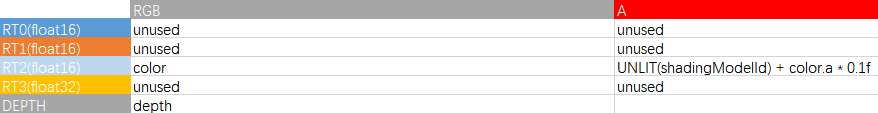

The GBuffer for UNLIT, as follows:

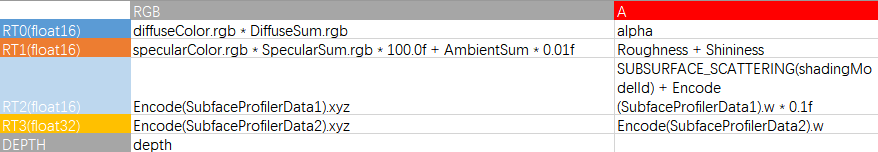

Finally the GBuffer for SUBSURFACE_SCATTERING, as follows:

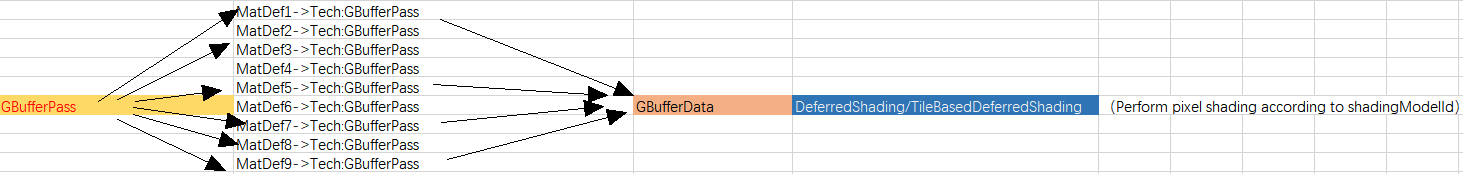

The overall flow is as below:

I think if the custom shader shading model you use conforms to built-in lighting, pbrLighting, unlit, then you just need to add a Technique (named GBufferPass) in your custom material, and pack data into GBufferData following the specified format for that shadingModel.

However, if you need a custom shading model, currently you may need to modify the global shader DeferredShading.frag and TileBasedDeferredShading.frag based on engine source code.

I’m really thinking whether I should provide an external interface for JME3 users to copy DeferredShading.frag and TileBasedDeferredShading.frag for modification, and directly set it to the pipeline to override default global shaders loaded, so extending custom shading models won’t require modifying engine source code.