Hi there !

when importing a gITF model from blender , i get this error in JME SDK , it seems that something goes wrong with triangulating faces of the mesh , inspite of i am already triangulated all faces in blender .

My Mesh :

Hi there !

when importing a gITF model from blender , i get this error in JME SDK , it seems that something goes wrong with triangulating faces of the mesh , inspite of i am already triangulated all faces in blender .

My Mesh :

How did you determine that? I didn’t see anything about it in the output.

JME won’t do any triangulating.

well, message is simple, but reason not.

Maybe your gltf were linking texture from some external location. Or maybe you incorrectly use importer.

Myself, when using SDK, i did not use Importer, just copy gltf into project and convert to j3o when needed.

Because the same problem happened with me before , & my solution was to evaluate a triangulated fan face rather than a Ngon Face for the mesh in blender after generating a cylinder & jme imported that successfully , now I am doing the samething plus selecting all faces & triangulating them after modelling & it does not work ![]()

@oxplay2 i have created the mesh normally in blender with no texturing …just modelling ,

Yes , me too but the same problem exist the importer & copying the object are the same but I have given the importer a try to see if I can see the object in the overview plate or not , and I am not seeing it plus jme Editor cannot render it

I know that nothing goes wrong with jme j3o binary converter , but there’s something that’s done wrongly everytime I export from blender & import into jme

If you use something like JMEC to convert it to j3o from the command line then maybe it will give more information. I kind of doubt it but it might be worth a try.

@pspeed I will try that one & tell you if it works , thank you

I don’t know if it will work any better since it’s using the same basic JME code (though there is always the chance)… but sometimes the SDK swallows useful error information. So there’s maybe a 20% chance that JMEC may provide better error information.

Edit: but for example, if there is an exception then we will see the stack trace 100%.

About SDK swallows errors , yes i agree

@oxplay2 found that when copying & converting to j3o format & it rarely appears

EDIT : i was doing just a trial to be sure & found that

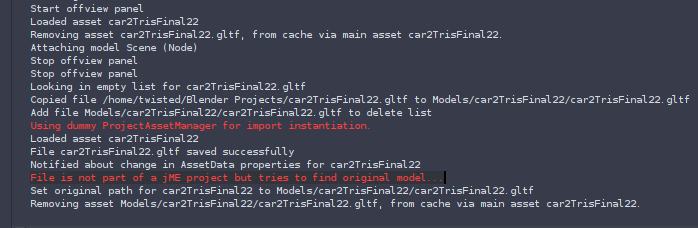

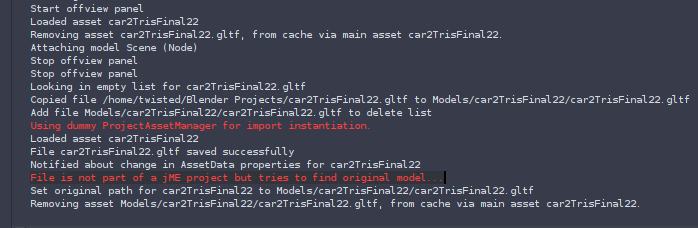

EDIT2: the full log

removing asset Models/car2TrisFinal22.gltf, from cache via main asset car2TrisFinal22.

Removing asset Common/MatDefs/Light/PBRLighting.j3md, from cache via main asset car2TrisFinal22.

Loaded asset car2TrisFinal22

Bad compile of:

1 #version 110

2 #define SRGB 1

3 #define FRAGMENT_SHADER 1

4 #define NORMAL_TYPE -1.0

5 #define SINGLE_PASS_LIGHTING 1

6 #define NB_LIGHTS 3

7 #define NB_PROBES 0

8 #extension GL_ARB_shader_texture_lod : enable

9 // -- begin import Common/ShaderLib/GLSLCompat.glsllib --

10 #if defined GL_ES

11 # define hfloat highp float

12 # define hvec2 highp vec2

13 # define hvec3 highp vec3

14 # define hvec4 highp vec4

15 # define lfloat lowp float

16 # define lvec2 lowp vec2

17 # define lvec3 lowp vec3

18 # define lvec4 lowp vec4

19 #else

20 # define hfloat float

21 # define hvec2 vec2

22 # define hvec3 vec3

23 # define hvec4 vec4

24 # define lfloat float

25 # define lvec2 vec2

26 # define lvec3 vec3

27 # define lvec4 vec4

28 #endif

29

30 #if __VERSION__ >= 130

31 # ifdef GL_ES

32 out highp vec4 outFragColor;

33 # else

34 out vec4 outFragColor;

35 #endif

36 # define texture1D texture

37 # define texture2D texture

38 # define texture3D texture

39 # define textureCube texture

40 # define texture2DLod textureLod

41 # define textureCubeLod textureLod

42 # define texture2DArray texture

43 # if defined VERTEX_SHADER

44 # define varying out

45 # define attribute in

46 # elif defined FRAGMENT_SHADER

47 # define varying in

48 # define gl_FragColor outFragColor

49 # endif

50 #else

51 # define isnan(val) !(val<0.0||val>0.0||val==0.0)

52 #endif

53

54

55 // -- end import Common/ShaderLib/GLSLCompat.glsllib --

56 // -- begin import Common/ShaderLib/PBR.glsllib --

57 #ifndef PI

58 #define PI 3.14159265358979323846264

59 #endif

60

61 //Specular fresnel computation

62 vec3 F_Shlick(float vh, vec3 F0){

63 float fresnelFact = pow(2.0, (-5.55473*vh - 6.98316) * vh);

64 return mix(F0, vec3(1.0, 1.0, 1.0), fresnelFact);

65 }

66

67 vec3 sphericalHarmonics( const in vec3 normal, const vec3 sph[9] ){

68 float x = normal.x;

69 float y = normal.y;

70 float z = normal.z;

71

72 vec3 result = (

73 sph[0] +

74

75 sph[1] * y +

76 sph[2] * z +

77 sph[3] * x +

78

79 sph[4] * y * x +

80 sph[5] * y * z +

81 sph[6] * (3.0 * z * z - 1.0) +

82 sph[7] * (z * x) +

83 sph[8] * (x*x - y*y)

84 );

85

86 return max(result, vec3(0.0));

87 }

88

89

90 float PBR_ComputeDirectLight(vec3 normal, vec3 lightDir, vec3 viewDir,

91 vec3 lightColor, vec3 fZero, float roughness, float ndotv,

92 out vec3 outDiffuse, out vec3 outSpecular){

93 // Compute halfway vector.

94 vec3 halfVec = normalize(lightDir + viewDir);

95

96 // Compute ndotl, ndoth, vdoth terms which are needed later.

97 float ndotl = max( dot(normal, lightDir), 0.0);

98 float ndoth = max( dot(normal, halfVec), 0.0);

99 float hdotv = max( dot(viewDir, halfVec), 0.0);

100

101 // Compute diffuse using energy-conserving Lambert.

102 // Alternatively, use Oren-Nayar for really rough

103 // materials or if you have lots of processing power ...

104 outDiffuse = vec3(ndotl) * lightColor;

105

106 //cook-torrence, microfacet BRDF : http://blog.selfshadow.com/publications/s2013-shading-course/karis/s2013_pbs_epic_notes_v2.pdf

107

108 float alpha = roughness * roughness;

109

110 //D, GGX normaal Distribution function

111 float alpha2 = alpha * alpha;

112 float sum = ((ndoth * ndoth) * (alpha2 - 1.0) + 1.0);

113 float denom = PI * sum * sum;

114 float D = alpha2 / denom;

115

116 // Compute Fresnel function via Schlick's approximation.

117 vec3 fresnel = F_Shlick(hdotv, fZero);

118

119 //G Shchlick GGX Gometry shadowing term, k = alpha/2

120 float k = alpha * 0.5;

121

122 /*

123 //classic Schlick ggx

124 float G_V = ndotv / (ndotv * (1.0 - k) + k);

125 float G_L = ndotl / (ndotl * (1.0 - k) + k);

126 float G = ( G_V * G_L );

127

128 float specular =(D* fresnel * G) /(4 * ndotv);

129 */

130

131 // UE4 way to optimise shlick GGX Gometry shadowing term

132 //http://graphicrants.blogspot.co.uk/2013/08/specular-brdf-reference.html

133 float G_V = ndotv + sqrt( (ndotv - ndotv * k) * ndotv + k );

134 float G_L = ndotl + sqrt( (ndotl - ndotl * k) * ndotl + k );

135 // the max here is to avoid division by 0 that may cause some small glitches.

136 float G = 1.0/max( G_V * G_L ,0.01);

137

138 float specular = D * G * ndotl;

139

140 outSpecular = vec3(specular) * fresnel * lightColor;

141 return hdotv;

142 }

143

144 vec3 integrateBRDFApprox( const in vec3 specular, float roughness, float NoV ){

145 const vec4 c0 = vec4( -1, -0.0275, -0.572, 0.022 );

146 const vec4 c1 = vec4( 1, 0.0425, 1.04, -0.04 );

147 vec4 r = roughness * c0 + c1;

148 float a004 = min( r.x * r.x, exp2( -9.28 * NoV ) ) * r.x + r.y;

149 vec2 AB = vec2( -1.04, 1.04 ) * a004 + r.zw;

150 return specular * AB.x + AB.y;

151 }

152

153 // from Sebastien Lagarde https://seblagarde.files.wordpress.com/2015/07/course_notes_moving_frostbite_to_pbr_v32.pdf page 69

154 vec3 getSpecularDominantDir(const in vec3 N, const in vec3 R, const in float realRoughness){

155 vec3 dominant;

156

157 float smoothness = 1.0 - realRoughness;

158 float lerpFactor = smoothness * (sqrt(smoothness) + realRoughness);

159 // The result is not normalized as we fetch in a cubemap

160 dominant = mix(N, R, lerpFactor);

161

162 return dominant;

163 }

164

165 vec3 ApproximateSpecularIBL(samplerCube envMap,sampler2D integrateBRDF, vec3 SpecularColor , float Roughness, float ndotv, vec3 refVec, float nbMipMaps){

166 float Lod = sqrt( Roughness ) * (nbMipMaps - 1.0);

167 vec3 PrefilteredColor = textureCubeLod(envMap, refVec.xyz,Lod).rgb;

168 vec2 EnvBRDF = texture2D(integrateBRDF,vec2(Roughness, ndotv)).rg;

169 return PrefilteredColor * ( SpecularColor * EnvBRDF.x+ EnvBRDF.y );

170 }

171

172 vec3 ApproximateSpecularIBLPolynomial(samplerCube envMap, vec3 SpecularColor , float Roughness, float ndotv, vec3 refVec, float nbMipMaps){

173 float Lod = sqrt( Roughness ) * (nbMipMaps - 1.0);

174 vec3 PrefilteredColor = textureCubeLod(envMap, refVec.xyz, Lod).rgb;

175 return PrefilteredColor * integrateBRDFApprox(SpecularColor, Roughness, ndotv);

176 }

177

178

179 float renderProbe(vec3 viewDir, vec3 worldPos, vec3 normal, vec3 norm, float Roughness, vec4 diffuseColor, vec4 specularColor, float ndotv, vec3 ao, mat4 lightProbeData,vec3 shCoeffs[9],samplerCube prefEnvMap, inout vec3 color ){

180

181 // lightProbeData is a mat4 with this layout

182 // 3x3 rot mat|

183 // 0 1 2 | 3

184 // 0 | ax bx cx | px | )

185 // 1 | ay by cy | py | probe position

186 // 2 | az bz cz | pz | )

187 // --|----------|

188 // 3 | sx sy sz sp | -> 1/probe radius + nbMipMaps

189 // --scale--

190 // parallax fix for spherical / obb bounds and probe blending from

191 // from https://seblagarde.wordpress.com/2012/09/29/image-based-lighting-approaches-and-parallax-corrected-cubemap/

192 vec3 rv = reflect(-viewDir, normal);

193 vec4 probePos = lightProbeData[3];

194 float invRadius = fract( probePos.w);

195 float nbMipMaps = probePos.w - invRadius;

196 vec3 direction = worldPos - probePos.xyz;

197 float ndf = 0.0;

198

199 if(lightProbeData[0][3] != 0.0){

200 // oriented box probe

201 mat3 wToLocalRot = mat3(lightProbeData);

202 wToLocalRot = inverse(wToLocalRot);

203 vec3 scale = vec3(lightProbeData[0][3], lightProbeData[1][3], lightProbeData[2][3]);

204 #if NB_PROBES >= 2

205 // probe blending

206 // compute fragment position in probe local space

207 vec3 localPos = wToLocalRot * worldPos;

208 localPos -= probePos.xyz;

209 // compute normalized distance field

210 vec3 localDir = abs(localPos);

211 localDir /= scale;

212 ndf = max(max(localDir.x, localDir.y), localDir.z);

213 #endif

214 // parallax fix

215 vec3 rayLs = wToLocalRot * rv;

216 rayLs /= scale;

217

218 vec3 positionLs = worldPos - probePos.xyz;

219 positionLs = wToLocalRot * positionLs;

220 positionLs /= scale;

221

222 vec3 unit = vec3(1.0);

223 vec3 firstPlaneIntersect = (unit - positionLs) / rayLs;

224 vec3 secondPlaneIntersect = (-unit - positionLs) / rayLs;

225 vec3 furthestPlane = max(firstPlaneIntersect, secondPlaneIntersect);

226 float distance = min(min(furthestPlane.x, furthestPlane.y), furthestPlane.z);

227

228 vec3 intersectPositionWs = worldPos + rv * distance;

229 rv = intersectPositionWs - probePos.xyz;

230

231 } else {

232 // spherical probe

233 // paralax fix

234 rv = invRadius * direction + rv;

235

236 #if NB_PROBES >= 2

237 // probe blending

238 float dist = sqrt(dot(direction, direction));

239 ndf = dist * invRadius;

240 #endif

241 }

242

243 vec3 indirectDiffuse = vec3(0.0);

244 vec3 indirectSpecular = vec3(0.0);

245 indirectDiffuse = sphericalHarmonics(normal.xyz, shCoeffs) * diffuseColor.rgb;

246 vec3 dominantR = getSpecularDominantDir( normal, rv.xyz, Roughness * Roughness );

247 indirectSpecular = ApproximateSpecularIBLPolynomial(prefEnvMap, specularColor.rgb, Roughness, ndotv, dominantR, nbMipMaps);

248

249 #ifdef HORIZON_FADE

250 //horizon fade from http://marmosetco.tumblr.com/post/81245981087

251 float horiz = dot(rv, norm);

252 float horizFadePower = 1.0 - Roughness;

253 horiz = clamp( 1.0 + horizFadePower * horiz, 0.0, 1.0 );

254 horiz *= horiz;

255 indirectSpecular *= vec3(horiz);

256 #endif

257

258 vec3 indirectLighting = (indirectDiffuse + indirectSpecular) * ao;

259

260 color = indirectLighting * step( 0.0, probePos.w);

261 return ndf;

262 }

263

264

265

266

267

268 // -- end import Common/ShaderLib/PBR.glsllib --

269 // -- begin import Common/ShaderLib/Parallax.glsllib --

270 #if (defined(PARALLAXMAP) || (defined(NORMALMAP_PARALLAX) && defined(NORMALMAP))) && !defined(VERTEX_LIGHTING)

271 vec2 steepParallaxOffset(sampler2D parallaxMap, vec3 vViewDir,vec2 texCoord,float parallaxScale){

272 vec2 vParallaxDirection = normalize( vViewDir.xy );

273

274 // The length of this vector determines the furthest amount of displacement: (Ati's comment)

275 float fLength = length( vViewDir );

276 float fParallaxLength = sqrt( fLength * fLength - vViewDir.z * vViewDir.z ) / vViewDir.z;

277

278 // Compute the actual reverse parallax displacement vector: (Ati's comment)

279 vec2 vParallaxOffsetTS = vParallaxDirection * fParallaxLength;

280

281 // Need to scale the amount of displacement to account for different height ranges

282 // in height maps. This is controlled by an artist-editable parameter: (Ati's comment)

283 parallaxScale *=0.3;

284 vParallaxOffsetTS *= parallaxScale;

285

286 vec3 eyeDir = normalize(vViewDir).xyz;

287

288 float nMinSamples = 6.0;

289 float nMaxSamples = 1000.0 * parallaxScale;

290 float nNumSamples = mix( nMinSamples, nMaxSamples, 1.0 - eyeDir.z ); //In reference shader: int nNumSamples = (int)(lerp( nMinSamples, nMaxSamples, dot( eyeDirWS, N ) ));

291 float fStepSize = 1.0 / nNumSamples;

292 float fCurrHeight = 0.0;

293 float fPrevHeight = 1.0;

294 float fNextHeight = 0.0;

295 float nStepIndex = 0.0;

296 vec2 vTexOffsetPerStep = fStepSize * vParallaxOffsetTS;

297 vec2 vTexCurrentOffset = texCoord;

298 float fCurrentBound = 1.0;

299 float fParallaxAmount = 0.0;

300

301 while ( nStepIndex < nNumSamples && fCurrHeight <= fCurrentBound ) {

302 vTexCurrentOffset -= vTexOffsetPerStep;

303 fPrevHeight = fCurrHeight;

304

305

306 #ifdef NORMALMAP_PARALLAX

307 //parallax map is stored in the alpha channel of the normal map

308 fCurrHeight = texture2D( parallaxMap, vTexCurrentOffset).a;

309 #else

310 //parallax map is a texture

311 fCurrHeight = texture2D( parallaxMap, vTexCurrentOffset).r;

312 #endif

313

314 fCurrentBound -= fStepSize;

315 nStepIndex+=1.0;

316 }

317 vec2 pt1 = vec2( fCurrentBound, fCurrHeight );

318 vec2 pt2 = vec2( fCurrentBound + fStepSize, fPrevHeight );

319

320 float fDelta2 = pt2.x - pt2.y;

321 float fDelta1 = pt1.x - pt1.y;

322

323 float fDenominator = fDelta2 - fDelta1;

324

325 fParallaxAmount = (pt1.x * fDelta2 - pt2.x * fDelta1 ) / fDenominator;

326

327 vec2 vParallaxOffset = vParallaxOffsetTS * (1.0 - fParallaxAmount );

328 return texCoord - vParallaxOffset;

329 }

330

331 vec2 classicParallaxOffset(sampler2D parallaxMap, vec3 vViewDir,vec2 texCoord,float parallaxScale){

332 float h;

333 #ifdef NORMALMAP_PARALLAX

334 //parallax map is stored in the alpha channel of the normal map

335 h = texture2D(parallaxMap, texCoord).a;

336 #else

337 //parallax map is a texture

338 h = texture2D(parallaxMap, texCoord).r;

339 #endif

340 float heightScale = parallaxScale;

341 float heightBias = heightScale* -0.6;

342 vec3 normView = normalize(vViewDir);

343 h = (h * heightScale + heightBias) * normView.z;

344 return texCoord + (h * normView.xy);

345 }

346 #endif

347 // -- end import Common/ShaderLib/Parallax.glsllib --

348 // -- begin import Common/ShaderLib/Lighting.glsllib --

349 /*Common function for light calculations*/

350

351

352 /*

353 * Computes light direction

354 * lightType should be 0.0,1.0,2.0, repectively for Directional, point and spot lights.

355 * Outputs the light direction and the light half vector.

356 */

357 void lightComputeDir(in vec3 worldPos, in float lightType, in vec4 position, out vec4 lightDir, out vec3 lightVec){

358 float posLight = step(0.5, lightType);

359 vec3 tempVec = position.xyz * sign(posLight - 0.5) - (worldPos * posLight);

360 lightVec = tempVec;

361 float dist = length(tempVec);

362 #ifdef SRGB

363 lightDir.w = (1.0 - position.w * dist) / (1.0 + position.w * dist * dist);

364 lightDir.w = clamp(lightDir.w, 1.0 - posLight, 1.0);

365 #else

366 lightDir.w = clamp(1.0 - position.w * dist * posLight, 0.0, 1.0);

367 #endif

368 lightDir.xyz = tempVec / vec3(dist);

369 }

370

371 /*

372 * Computes the spot falloff for a spotlight

373 */

374 float computeSpotFalloff(in vec4 lightDirection, in vec3 lightVector){

375 vec3 L=normalize(lightVector);

376 vec3 spotdir = normalize(lightDirection.xyz);

377 float curAngleCos = dot(-L, spotdir);

378 float innerAngleCos = floor(lightDirection.w) * 0.001;

379 float outerAngleCos = fract(lightDirection.w);

380 float innerMinusOuter = innerAngleCos - outerAngleCos;

381 float falloff = clamp((curAngleCos - outerAngleCos) / innerMinusOuter, step(lightDirection.w, 0.001), 1.0);

382

383 #ifdef SRGB

384 // Use quadratic falloff (notice the ^4)

385 return pow(clamp((curAngleCos - outerAngleCos) / innerMinusOuter, 0.0, 1.0), 4.0);

386 #else

387 // Use linear falloff

388 return falloff;

389 #endif

390 }

391

392 // -- end import Common/ShaderLib/Lighting.glsllib --

393

394 varying vec2 texCoord;

395 #ifdef SEPARATE_TEXCOORD

396 varying vec2 texCoord2;

397 #endif

398

399 varying vec4 Color;

400

401 uniform vec4 g_LightData[NB_LIGHTS];

402 uniform vec3 g_CameraPosition;

403 uniform vec4 g_AmbientLightColor;

404

405 uniform float m_Roughness;

406 uniform float m_Metallic;

407

408 varying vec3 wPosition;

409

410

411 #if NB_PROBES >= 1

412 uniform samplerCube g_PrefEnvMap;

413 uniform vec3 g_ShCoeffs[9];

414 uniform mat4 g_LightProbeData;

415 #endif

416 #if NB_PROBES >= 2

417 uniform samplerCube g_PrefEnvMap2;

418 uniform vec3 g_ShCoeffs2[9];

419 uniform mat4 g_LightProbeData2;

420 #endif

421 #if NB_PROBES == 3

422 uniform samplerCube g_PrefEnvMap3;

423 uniform vec3 g_ShCoeffs3[9];

424 uniform mat4 g_LightProbeData3;

425 #endif

426

427 #ifdef BASECOLORMAP

428 uniform sampler2D m_BaseColorMap;

429 #endif

430

431 #ifdef USE_PACKED_MR

432 uniform sampler2D m_MetallicRoughnessMap;

433 #else

434 #ifdef METALLICMAP

435 uniform sampler2D m_MetallicMap;

436 #endif

437 #ifdef ROUGHNESSMAP

438 uniform sampler2D m_RoughnessMap;

439 #endif

440 #endif

441

442 #ifdef EMISSIVE

443 uniform vec4 m_Emissive;

444 #endif

445 #ifdef EMISSIVEMAP

446 uniform sampler2D m_EmissiveMap;

447 #endif

448 #if defined(EMISSIVE) || defined(EMISSIVEMAP)

449 uniform float m_EmissivePower;

450 uniform float m_EmissiveIntensity;

451 #endif

452

453 #ifdef SPECGLOSSPIPELINE

454

455 uniform vec4 m_Specular;

456 uniform float m_Glossiness;

457 #ifdef USE_PACKED_SG

458 uniform sampler2D m_SpecularGlossinessMap;

459 #else

460 uniform sampler2D m_SpecularMap;

461 uniform sampler2D m_GlossinessMap;

462 #endif

463 #endif

464

465 #ifdef PARALLAXMAP

466 uniform sampler2D m_ParallaxMap;

467 #endif

468 #if (defined(PARALLAXMAP) || (defined(NORMALMAP_PARALLAX) && defined(NORMALMAP)))

469 uniform float m_ParallaxHeight;

470 #endif

471

472 #ifdef LIGHTMAP

473 uniform sampler2D m_LightMap;

474 #endif

475

476 #if defined(NORMALMAP) || defined(PARALLAXMAP)

477 uniform sampler2D m_NormalMap;

478 varying vec4 wTangent;

479 #endif

480 varying vec3 wNormal;

481

482 #ifdef DISCARD_ALPHA

483 uniform float m_AlphaDiscardThreshold;

484 #endif

485

486 void main(){

487 vec2 newTexCoord;

488 vec3 viewDir = normalize(g_CameraPosition - wPosition);

489

490 vec3 norm = normalize(wNormal);

491 #if defined(NORMALMAP) || defined(PARALLAXMAP)

492 vec3 tan = normalize(wTangent.xyz);

493 mat3 tbnMat = mat3(tan, wTangent.w * cross( (norm), (tan)), norm);

494 #endif

495

496 #if (defined(PARALLAXMAP) || (defined(NORMALMAP_PARALLAX) && defined(NORMALMAP)))

497 vec3 vViewDir = viewDir * tbnMat;

498 #ifdef STEEP_PARALLAX

499 #ifdef NORMALMAP_PARALLAX

500 //parallax map is stored in the alpha channel of the normal map

501 newTexCoord = steepParallaxOffset(m_NormalMap, vViewDir, texCoord, m_ParallaxHeight);

502 #else

503 //parallax map is a texture

504 newTexCoord = steepParallaxOffset(m_ParallaxMap, vViewDir, texCoord, m_ParallaxHeight);

505 #endif

506 #else

507 #ifdef NORMALMAP_PARALLAX

508 //parallax map is stored in the alpha channel of the normal map

509 newTexCoord = classicParallaxOffset(m_NormalMap, vViewDir, texCoord, m_ParallaxHeight);

510 #else

511 //parallax map is a texture

512 newTexCoord = classicParallaxOffset(m_ParallaxMap, vViewDir, texCoord, m_ParallaxHeight);

513 #endif

514 #endif

515 #else

516 newTexCoord = texCoord;

517 #endif

518

519 #ifdef BASECOLORMAP

520 vec4 albedo = texture2D(m_BaseColorMap, newTexCoord) * Color;

521 #else

522 vec4 albedo = Color;

523 #endif

524

525 #ifdef USE_PACKED_MR

526 vec2 rm = texture2D(m_MetallicRoughnessMap, newTexCoord).gb;

527 float Roughness = rm.x * max(m_Roughness, 1e-4);

528 float Metallic = rm.y * max(m_Metallic, 0.0);

529 #else

530 #ifdef ROUGHNESSMAP

531 float Roughness = texture2D(m_RoughnessMap, newTexCoord).r * max(m_Roughness, 1e-4);

532 #else

533 float Roughness = max(m_Roughness, 1e-4);

534 #endif

535 #ifdef METALLICMAP

536 float Metallic = texture2D(m_MetallicMap, newTexCoord).r * max(m_Metallic, 0.0);

537 #else

538 float Metallic = max(m_Metallic, 0.0);

539 #endif

540 #endif

541

542 float alpha = albedo.a;

543

544 #ifdef DISCARD_ALPHA

545 if(alpha < m_AlphaDiscardThreshold){

546 discard;

547 }

548 #endif

549

550 // ***********************

551 // Read from textures

552 // ***********************

553 #if defined(NORMALMAP)

554 vec4 normalHeight = texture2D(m_NormalMap, newTexCoord);

555 //Note the -2.0 and -1.0. We invert the green channel of the normal map,

556 //as it's complient with normal maps generated with blender.

557 //see http://hub.jmonkeyengine.org/forum/topic/parallax-mapping-fundamental-bug/#post-256898

558 //for more explanation.

559 vec3 normal = normalize((normalHeight.xyz * vec3(2.0, NORMAL_TYPE * 2.0, 2.0) - vec3(1.0, NORMAL_TYPE * 1.0, 1.0)));

560 normal = normalize(tbnMat * normal);

561 //normal = normalize(normal * inverse(tbnMat));

562 #else

563 vec3 normal = norm;

564 #endif

565

566 #ifdef SPECGLOSSPIPELINE

567

568 #ifdef USE_PACKED_SG

569 vec4 specularColor = texture2D(m_SpecularGlossinessMap, newTexCoord);

570 float glossiness = specularColor.a * m_Glossiness;

571 specularColor *= m_Specular;

572 #else

573 #ifdef SPECULARMAP

574 vec4 specularColor = texture2D(m_SpecularMap, newTexCoord);

575 #else

576 vec4 specularColor = vec4(1.0);

577 #endif

578 #ifdef GLOSSINESSMAP

579 float glossiness = texture2D(m_GlossinessMap, newTexCoord).r * m_Glossiness;

580 #else

581 float glossiness = m_Glossiness;

582 #endif

583 specularColor *= m_Specular;

584 #endif

585 vec4 diffuseColor = albedo;// * (1.0 - max(max(specularColor.r, specularColor.g), specularColor.b));

586 Roughness = 1.0 - glossiness;

587 vec3 fZero = specularColor.xyz;

588 #else

589 float specular = 0.5;

590 float nonMetalSpec = 0.08 * specular;

591 vec4 specularColor = (nonMetalSpec - nonMetalSpec * Metallic) + albedo * Metallic;

592 vec4 diffuseColor = albedo - albedo * Metallic;

593 vec3 fZero = vec3(specular);

594 #endif

595

596 gl_FragColor.rgb = vec3(0.0);

597 vec3 ao = vec3(1.0);

598

599 #ifdef LIGHTMAP

600 vec3 lightMapColor;

601 #ifdef SEPARATE_TEXCOORD

602 lightMapColor = texture2D(m_LightMap, texCoord2).rgb;

603 #else

604 lightMapColor = texture2D(m_LightMap, texCoord).rgb;

605 #endif

606 #ifdef AO_MAP

607 lightMapColor.gb = lightMapColor.rr;

608 ao = lightMapColor;

609 #else

610 gl_FragColor.rgb += diffuseColor.rgb * lightMapColor;

611 #endif

612 specularColor.rgb *= lightMapColor;

613 #endif

614

615

616 float ndotv = max( dot( normal, viewDir ),0.0);

617 for( int i = 0;i < NB_LIGHTS; i+=3){

618 vec4 lightColor = g_LightData[i];

619 vec4 lightData1 = g_LightData[i+1];

620 vec4 lightDir;

621 vec3 lightVec;

622 lightComputeDir(wPosition, lightColor.w, lightData1, lightDir, lightVec);

623

624 float fallOff = 1.0;

625 #if __VERSION__ >= 110

626 // allow use of control flow

627 if(lightColor.w > 1.0){

628 #endif

629 fallOff = computeSpotFalloff(g_LightData[i+2], lightVec);

630 #if __VERSION__ >= 110

631 }

632 #endif

633 //point light attenuation

634 fallOff *= lightDir.w;

635

636 lightDir.xyz = normalize(lightDir.xyz);

637 vec3 directDiffuse;

638 vec3 directSpecular;

639

640 float hdotv = PBR_ComputeDirectLight(normal, lightDir.xyz, viewDir,

641 lightColor.rgb, fZero, Roughness, ndotv,

642 directDiffuse, directSpecular);

643

644 vec3 directLighting = diffuseColor.rgb *directDiffuse + directSpecular;

645

646 gl_FragColor.rgb += directLighting * fallOff;

647 }

648

649 #if NB_PROBES >= 1

650 vec3 color1 = vec3(0.0);

651 vec3 color2 = vec3(0.0);

652 vec3 color3 = vec3(0.0);

653 float weight1 = 1.0;

654 float weight2 = 0.0;

655 float weight3 = 0.0;

656

657 float ndf = renderProbe(viewDir, wPosition, normal, norm, Roughness, diffuseColor, specularColor, ndotv, ao, g_LightProbeData, g_ShCoeffs, g_PrefEnvMap, color1);

658 #if NB_PROBES >= 2

659 float ndf2 = renderProbe(viewDir, wPosition, normal, norm, Roughness, diffuseColor, specularColor, ndotv, ao, g_LightProbeData2, g_ShCoeffs2, g_PrefEnvMap2, color2);

660 #endif

661 #if NB_PROBES == 3

662 float ndf3 = renderProbe(viewDir, wPosition, normal, norm, Roughness, diffuseColor, specularColor, ndotv, ao, g_LightProbeData3, g_ShCoeffs3, g_PrefEnvMap3, color3);

663 #endif

664

665 #if NB_PROBES >= 2

666 float invNdf = max(1.0 - ndf,0.0);

667 float invNdf2 = max(1.0 - ndf2,0.0);

668 float sumNdf = ndf + ndf2;

669 float sumInvNdf = invNdf + invNdf2;

670 #if NB_PROBES == 3

671 float invNdf3 = max(1.0 - ndf3,0.0);

672 sumNdf += ndf3;

673 sumInvNdf += invNdf3;

674 weight3 = ((1.0 - (ndf3 / sumNdf)) / (NB_PROBES - 1)) * (invNdf3 / sumInvNdf);

675 #endif

676

677 weight1 = ((1.0 - (ndf / sumNdf)) / (NB_PROBES - 1)) * (invNdf / sumInvNdf);

678 weight2 = ((1.0 - (ndf2 / sumNdf)) / (NB_PROBES - 1)) * (invNdf2 / sumInvNdf);

679

680 float weightSum = weight1 + weight2 + weight3;

681

682 weight1 /= weightSum;

683 weight2 /= weightSum;

684 weight3 /= weightSum;

685 #endif

686

687 #ifdef USE_AMBIENT_LIGHT

688 color1.rgb *= g_AmbientLightColor.rgb;

689 color2.rgb *= g_AmbientLightColor.rgb;

690 color3.rgb *= g_AmbientLightColor.rgb;

691 #endif

692 gl_FragColor.rgb += color1 * clamp(weight1,0.0,1.0) + color2 * clamp(weight2,0.0,1.0) + color3 * clamp(weight3,0.0,1.0);

693

694 #endif

695

696 #if defined(EMISSIVE) || defined (EMISSIVEMAP)

697 #ifdef EMISSIVEMAP

698 vec4 emissive = texture2D(m_EmissiveMap, newTexCoord);

699 #else

700 vec4 emissive = m_Emissive;

701 #endif

702 gl_FragColor += emissive * pow(emissive.a, m_EmissivePower) * m_EmissiveIntensity;

703 #endif

704 gl_FragColor.a = alpha;

705

706 }

com.jme3.renderer.RendererException: compile error in: ShaderSource[name=Common/MatDefs/Light/PBRLighting.frag, defines, type=Fragment, language=GLSL110]

0:201(21): error: cannot construct `mat3' from a matrix in GLSL 1.10 (GLSL 1.20 or GLSL ES 1.00 required)

0:202(16): error: no function with name 'inverse'

at com.jme3.renderer.opengl.GLRenderer.updateShaderSourceData(GLRenderer.java:1476)

at com.jme3.renderer.opengl.GLRenderer.updateShaderData(GLRenderer.java:1503)

at com.jme3.renderer.opengl.GLRenderer.setShader(GLRenderer.java:1567)

at com.jme3.material.logic.SinglePassAndImageBasedLightingLogic.render(SinglePassAndImageBasedLightingLogic.java:254)

at com.jme3.material.Technique.render(Technique.java:166)

at com.jme3.material.Material.render(Material.java:1026)

at com.jme3.renderer.RenderManager.renderGeometry(RenderManager.java:614)

at com.jme3.renderer.queue.RenderQueue.renderGeometryList(RenderQueue.java:266)

at com.jme3.renderer.queue.RenderQueue.renderQueue(RenderQueue.java:305)

at com.jme3.renderer.RenderManager.renderViewPortQueues(RenderManager.java:877)

at com.jme3.renderer.RenderManager.flushQueue(RenderManager.java:779)

at com.jme3.renderer.RenderManager.renderViewPort(RenderManager.java:1108)

at com.jme3.renderer.RenderManager.render(RenderManager.java:1158)

at com.jme3.gde.core.scene.SceneApplication.update(SceneApplication.java:330)

at com.jme3.system.awt.AwtPanelsContext.updateInThread(AwtPanelsContext.java:200)

at com.jme3.system.awt.AwtPanelsContext.access$100(AwtPanelsContext.java:45)

at com.jme3.system.awt.AwtPanelsContext$AwtPanelsListener.update(AwtPanelsContext.java:69)

at com.jme3.system.lwjgl.LwjglOffscreenBuffer.runLoop(LwjglOffscreenBuffer.java:125)

at com.jme3.system.lwjgl.LwjglOffscreenBuffer.run(LwjglOffscreenBuffer.java:156)

at java.base/java.lang.Thread.run(Thread.java:834)

java.lang.NullPointerException

at com.jme3.system.awt.AwtPanel.drawFrameInThread(AwtPanel.java:169)

at com.jme3.system.awt.AwtPanel.onFrameEnd(AwtPanel.java:308)

at com.jme3.system.awt.AwtPanelsContext.updateInThread(AwtPanelsContext.java:203)

at com.jme3.system.awt.AwtPanelsContext.access$100(AwtPanelsContext.java:45)

at com.jme3.system.awt.AwtPanelsContext$AwtPanelsListener.update(AwtPanelsContext.java:69)

at com.jme3.system.lwjgl.LwjglOffscreenBuffer.runLoop(LwjglOffscreenBuffer.java:125)

at com.jme3.system.lwjgl.LwjglOffscreenBuffer.run(LwjglOffscreenBuffer.java:156)

at java.base/java.lang.Thread.run(Thread.java:834)

The other problem you ran into is fixed with a more recent jme version, so might try the 3.3 beta SDK release. It’s because you probably run mesa, which is strict on OpenGL 2.1 instead of allowing more (typically drivers allow even strange mixtures from 2.1 up until 4.4 (or whatever your card supports)).

You may want to look into it, I don’t know if you can change that, because otherwise you need to enforce a core profile for a specific version.

This was the commit solving that btw

I noticed this part in the Issue title but I cannot understand why is that happens when I tried copying the file also , creating a new project also displays the same error

I am using SDK 3.3.0 beta1 SNAPSHOT , may be that was the problem !?

Because the gltf file was in your blender projects folder at the time of the conversion, but I don’t think this is a problem. It just copied it.

And yes, only v3.3.2-stable-sdkpreview1 has the fix so far, unfortunately. But then jme/the SDK is still running on OpenGL 2.1 only mode.