That’s true!

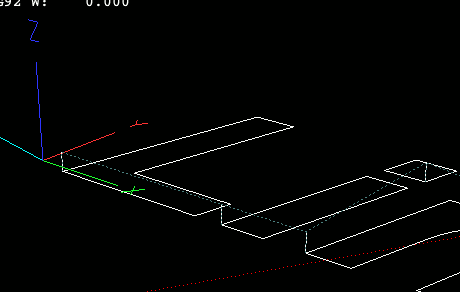

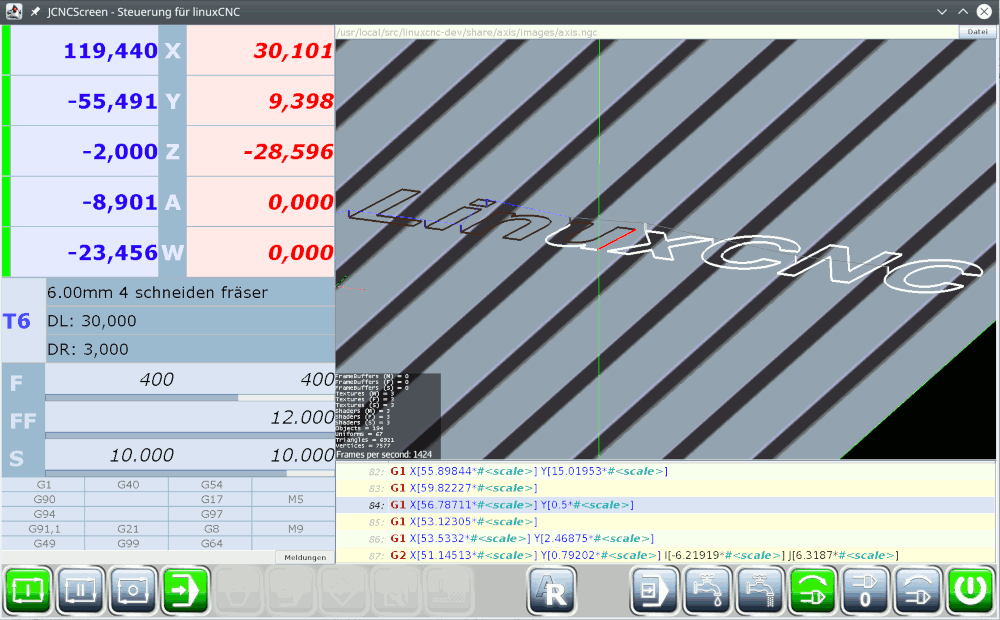

Situation is like this: you stand in front of a machine and want to look at a workpiece. For smaller workpieces you need a closer look, for bigger workpieces you need more distance.

Control should work for both extremes.

Well, that’s what I thought that ChaseCamera is doing. Therefore I thought, ChaseCamera would be the best place to start.

But there are too many things that are protected, hidden, not accessible …

What made me crazy is not the frustum handling. I don’t like the word, but I understand what it is doing.

The real drawback is the huge difference in scale. With “normal” camera you have to shift or rotate by values like 4-10

Doing the same with parallel projection, you need values of 2000

Both for the same model size!

That’s hard to figure out - if you never saw any similar.

I have to confess, I have no idea about gaming. I mean, I didn’t play a game - ever! So I’m much more extrinsic than a noob.

You see the difference?

For me, thinking in 3D orthogonal projection is daily work.

Everything is easier if you understand math. That’s one of my biggest drawbacks - I haven’t learned enuf in younger days and now its hard to understand even pretty easy things …

Meanwhile I read nearly everything from wiki, but the gap to my understanding is so HUGE …

… anyway: I started with parallel projection from scratch and think I’m on the way to understand a bit. First I tried to move the point, where the camera looks at, but it resulted in a rotation and not in a translation. So I had to learn to distinct, when to move the camera and when to modify orientation of the model.

Here’s my test code (without mouse for better separation of actions):

package jme3test.renderer;

import com.jme3.app.SimpleApplication;

import com.jme3.input.KeyInput;

import com.jme3.input.controls.ActionListener;

import com.jme3.input.controls.AnalogListener;

import com.jme3.input.controls.KeyTrigger;

import com.jme3.material.Material;

import com.jme3.math.ColorRGBA;

import com.jme3.math.FastMath;

import com.jme3.math.Quaternion;

import com.jme3.math.Vector3f;

import com.jme3.scene.Geometry;

import com.jme3.scene.Node;

import com.jme3.scene.debug.WireBox;

import com.jme3.scene.shape.Quad;

import com.jme3.util.JmeFormatter;

import java.util.logging.ConsoleHandler;

import java.util.logging.Handler;

import java.util.logging.Level;

import java.util.logging.Logger;

/**

* @author django

*/

public class TestMyCamera extends SimpleApplication implements AnalogListener, ActionListener {

public TestMyCamera(float[] limits) {

size = new Vector3f(limits[1] - limits[0], limits[5] - limits[4],

limits[3] - limits[2]);

l.log(Level.INFO, "initial size calculation: " + size);

rotation = new Quaternion();

}

@Override

public void onAction(String name, boolean isPressed, float tpf) {

if (RotateTrigger.equals(name)) {

rotate = isPressed;

}

}

@Override

public void onAnalog(String name, float value, float tpf) {

if (ZoomIN.equals(name)) {

zoomFactor -= 300f * tpf;

resizeView();

}

else if (ZoomOUT.equals(name)) {

zoomFactor += 300f * tpf;

resizeView();

}

if (rotate) {

if (KeyLeft.equals(name)) {

rotation.fromAngleAxis(tpf, Vector3f.UNIT_Y);

rotateMachine();

}

else if (KeyRight.equals(name)) {

rotation.fromAngleAxis(-tpf, Vector3f.UNIT_Y);

rotateMachine();

}

else if (KeyUp.equals(name)) {

rotation.fromAngleAxis(tpf, Vector3f.UNIT_X);

rotateMachine();

}

else if (KeyDown.equals(name)) {

rotation.fromAngleAxis(-tpf, Vector3f.UNIT_X);

rotateMachine();

}

}

else {

if (KeyLeft.equals(name)) {

camLoc.x += 150f * tpf;

moveCamera();

}

else if (KeyRight.equals(name)) {

camLoc.x -= 150f * tpf;

moveCamera();

}

else if (KeyUp.equals(name)) {

camLoc.z += 150f * tpf;

moveCamera();

}

else if (KeyDown.equals(name)) {

camLoc.z -= 150f * tpf;

moveCamera();

}

}

}

@Override

public void simpleInitApp() {

flyCam.setEnabled(false);

cam.setParallelProjection(true);

camLoc = new Vector3f(size.x * 0.5f + 50f, size.y * 2f, size.z *

0.5f + 50f);

// INFORMATION RootLogger 09:06:12 camera location: (-150.48007, 1000.0, -426.1831)

// INFORMATION RootLogger 09:06:12 camera direction: (-0.3407991, -0.6815982, -0.64751816)

// INFORMATION RootLogger 09:05:26 resizeView - aspect: 1.6 - zoomFactor: 1780.575

cam.setLocation(camLoc);

cam.lookAt(camLoc.negate(), camDir);

camLoc.x = -150f;

camLoc.z = -430f;

moveCamera();

registerInputs();

resizeView();

createMachine();

}

protected void createMachine() {

Geometry box = new Geometry("Box", new WireBox(size.x, size.y, size.z));

Geometry ground = new Geometry("Ground", new Quad(size.x * 2, size.z * 2));

Material m = new Material(assetManager, Unshaded);

l.log(Level.INFO, "workspace: " + new Vector3f(size.x, size.y, size.z));

m.getAdditionalRenderState().setWireframe(true);

m.setColor("Color", ColorRGBA.Green);

box.setMaterial(m);

box.setLocalTranslation(-(size.x * 0.5f), size.y, -(size.z * 0.5f));

m = new Material(assetManager, Unshaded);

m.setTexture("ColorMap", assetManager.loadTexture(

"Interface/Logo/Monkey.jpg"));

ground.setMaterial(m);

ground.setLocalRotation(new Quaternion().fromAngleAxis(-FastMath.HALF_PI,

Vector3f.UNIT_X));

ground.setLocalTranslation(-1.5f * size.x, 0, size.z * 0.5f);

machine = new Node("Machine");

machine.attachChild(box);

machine.attachChild(ground);

rootNode.attachChild(machine);

}

protected void moveCamera() {

l.log(Level.INFO, "camera location: " + camLoc);

l.log(Level.INFO, "camera direction: " + cam.getDirection());

cam.setLocation(camLoc);

}

protected void registerInputs() {

inputManager.addMapping(ZoomIN, new KeyTrigger(KeyInput.KEY_ADD));

inputManager.addMapping(ZoomOUT, new KeyTrigger(KeyInput.KEY_SUBTRACT));

inputManager.addMapping(KeyLeft, new KeyTrigger(KeyInput.KEY_LEFT));

inputManager.addMapping(KeyRight, new KeyTrigger(KeyInput.KEY_RIGHT));

inputManager.addMapping(KeyUp, new KeyTrigger(KeyInput.KEY_UP));

inputManager.addMapping(KeyDown, new KeyTrigger(KeyInput.KEY_DOWN));

inputManager

.addMapping(RotateTrigger, new KeyTrigger(KeyInput.KEY_LSHIFT),

new KeyTrigger(KeyInput.KEY_RSHIFT));

inputManager.addListener(this, ZoomIN, ZoomOUT, KeyLeft, KeyRight, KeyUp,

KeyDown, RotateTrigger);

}

protected void resizeView() {

float aspect = (float) cam.getWidth() / (float) cam.getHeight();

l.log(Level.INFO, "resizeView - aspect: " + aspect + " - zoomFactor: " +

zoomFactor);

// calculate Viewport

cam.setFrustum(-2000, 6000, -aspect * zoomFactor, aspect * zoomFactor,

zoomFactor, -zoomFactor);

}

protected void rotateMachine() {

l.log(Level.INFO, "machine orientation is: " + machine.getLocalRotation());

l.log(Level.INFO, ">>> rotate machine by: " + rotation);

l.log(Level.INFO, " machine's location: " + machine

.getLocalTranslation());

machine.rotate(rotation);

}

public static void main(String[] args) {

JmeFormatter formatter = new JmeFormatter();

Handler consoleHandler = new ConsoleHandler();

consoleHandler.setFormatter(formatter);

Logger.getLogger("").removeHandler(Logger.getLogger("").getHandlers()[0]);

Logger.getLogger("").addHandler(consoleHandler);

TestMyCamera app = new TestMyCamera(

// machine limits given from outside

new float[]{0, 900, 0, 1800, -500, 0});

app.start();

}

private Node machine;

private float zoomFactor = 1800f;

private boolean rotate;

private Quaternion rotation;

private Vector3f camLoc;

private final Vector3f size;

private static final Logger l;

private static final Vector3f camDir = new Vector3f(0, 1, 0);

private static final String Unshaded = "Common/MatDefs/Misc/Unshaded.j3md";

private static final String ZoomIN = "zoomIN";

private static final String ZoomOUT = "zoomOUT";

private static final String RotateTrigger = "trigRotation";

private static final String KeyLeft = "kLeft";

private static final String KeyRight = "kRight";

private static final String KeyUp = "kUp";

private static final String KeyDown = "kDown";

static {

l = Logger.getLogger("");

}

}

You can’t imagine, how long it took to get the monkey fit the bottom face of the Wirebox

Its that kind of inconsistency that drives me crazy.

but … well, everything is a challenge