I’m not sure if this should be discussed as a separate topic, so I’m creating this thread separately. Since there is some contention around that PR (see the discussion at the end of the PR), I’m temporarily adjusting it to “Draft” status until we finalize the public API for the FG system in JME3, at which point I’ll re-append the relevant code and put it back into “Ready for Review” at the appropriate time.

(FG is usually more important for the engine, and not that important for gameplay (not everyone will have the need to write a “custom pipeline”), however, I believe that when a new technology enters JME3, everyone should be made aware, so I created this post here.)

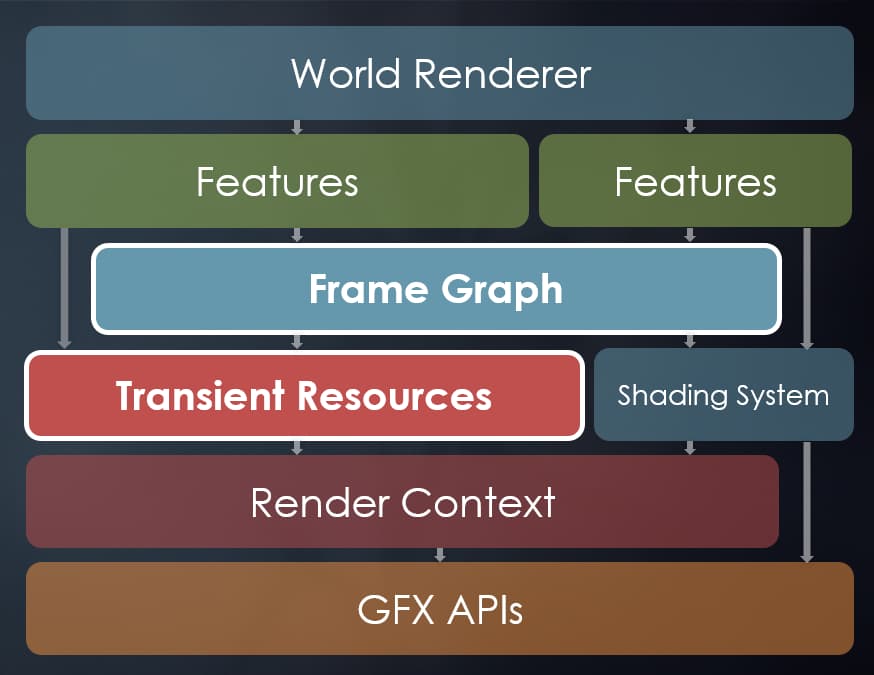

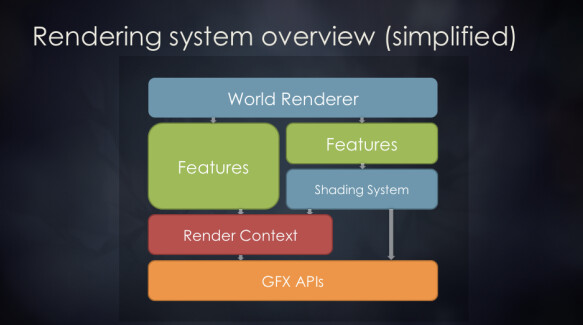

FrameGraph is used to optimize the entire rendering pipeline per-frame. FrameGraph aims to decouple the various rendering features of the engine from the upper layer rendering logic and lower level resources (shaders, render contexts, graphics APIs, etc.) to enable further decoupling and optimizations, with multi-threaded and parallel rendering being the most important.

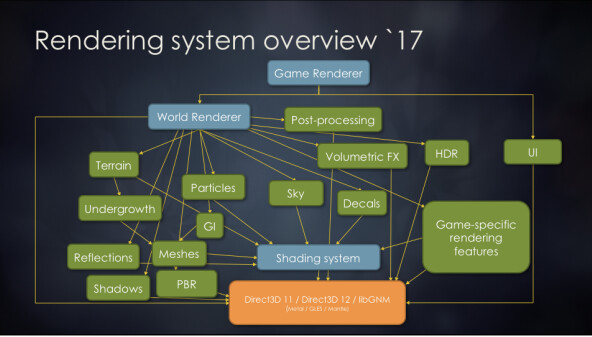

Below is the Frostbite engine architecture diagram from GDC 2017 showing this:

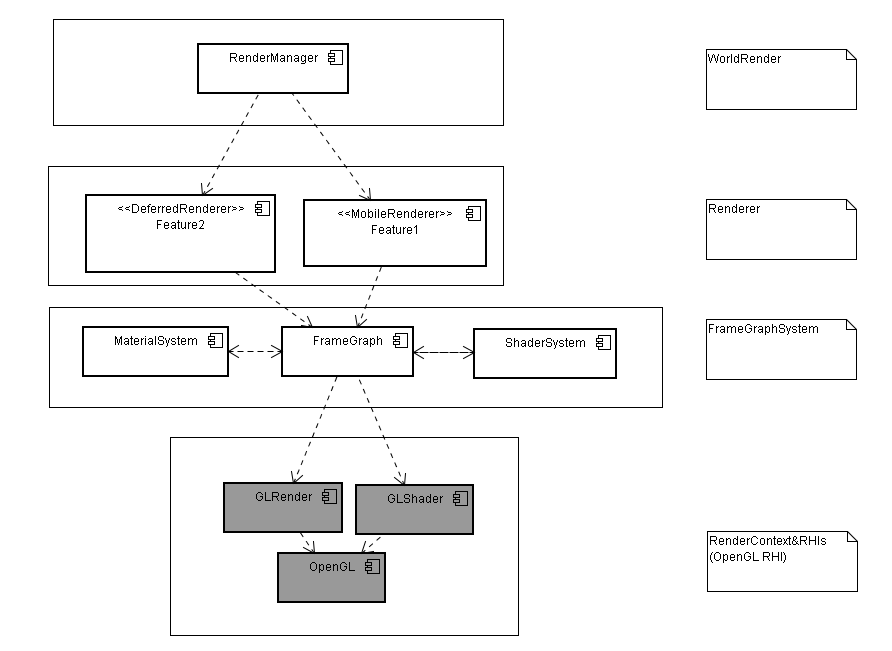

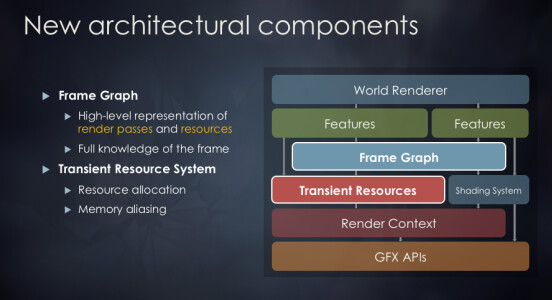

Since JME3 only has OpenGL, there are no actual GFX APIs (RHI APIs), so the conceptual mapping to JME3 in the future with some adjustments is as follows:

You may wonder why MaterialSystem and ShaderSystem have mutual dependencies with FG. Actually they don’t need to depend on each other, but consider the following scenario:

We want to develop a Feature that runs compute shaders and generates result data (ShaderResourceView, SRV), then Material->SetSRV(). But GameObj->Material doesn’t know about or have that SRV initially, so GameObj->Material can initially register dependent resources (SRV) with FG, then later when FG execution reaches a certain stage, it can dispatch the result data (SRV) to the corresponding GameObj->Material to use.

So there can be mutual dependencies between MaterialSystem, ShaderSystem, and FG to enable flexible resource management in scenarios like this.

The traditional approach is after FG finishes a frame, in the next frame findSRV(TargetSRVName) from FG, and manually iterate Scene->Node->Material->setSRV(). But this requires writing ugly loops in code, and it shouldn’t be related to business logic. Also, more often we may want to define the SRV in the MaterialDef, for example:

MaterialDef{

SRV0 = TargetSRV;

…

}

So with the mutual dependencies, FG can directly dispatch resources like SRV to the right Material instance that depends on it based on the MaterialDef, without requiring manual dispatching code.

Since FG is implemented slightly differently in different engines, I will briefly describe the FG in commercial engines I have used before (Unreal Engine and Unity).

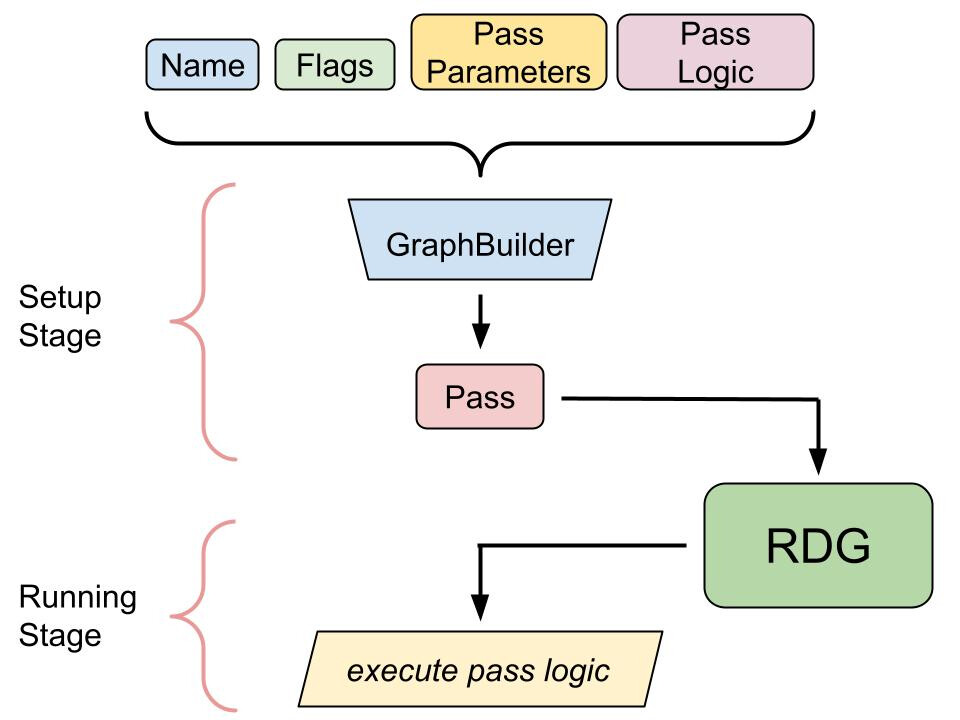

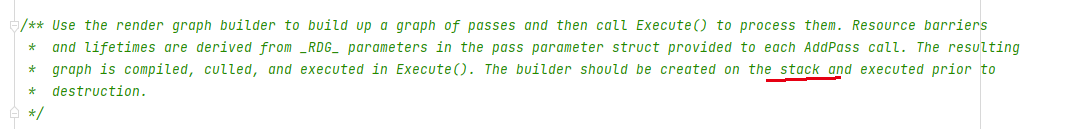

UnrealEngine RDG

In UE, FG is called RDG (Render Dependency Graph), and below is its basic structure:

// Instantiate the resources we need for our pass

FShaderParameterStruct* PassParameters = GraphBuilder.AllocParameters<FShaderParameterStruct>();

// Fill in the pass parameters

PassParameters->MyParameter = GraphBuilder.CreateSomeResource(MyResourceDescription, TEXT("MyResourceName"));

// Define pass and add it to the RDG builder

GraphBuilder.AddPass(

RDG_EVENT_NAME("MyRDGPassName"),

PassParameters,

ERDGPassFlags::Raster,

[PassParameters, OtherDataToCapture](FRHICommandList& RHICmdList)

{

// … pass logic here, render something! ...

}

As you can see, the standard UE RDG is created, used and destroyed on the stack every frame. Below is the logic of the main frame in the MobileRenderer in UE:

void FMobileSceneRenderer::Render(FRHICommandListImmediate& RHICmdList){

...

if (bDeferredShading)

{

...

FRDGBuilder GraphBuilder(RHICmdList);

ComputeLightGrid(GraphBuilder, bCullLightsToGrid, SortedLightSet);

GraphBuilder.Execute();

}

...

if (bShouldRenderVelocities)

{

FRDGBuilder GraphBuilder(RHICmdList);

FRDGTextureMSAA SceneDepthTexture = RegisterExternalTextureMSAA(GraphBuilder, SceneContext.SceneDepthZ);

FRDGTextureRef VelocityTexture = TryRegisterExternalTexture(GraphBuilder, SceneContext.SceneVelocity);

if (VelocityTexture != nullptr)

{

AddClearRenderTargetPass(GraphBuilder, VelocityTexture);

}

// Render the velocities of movable objects

AddSetCurrentStatPass(GraphBuilder, GET_STATID(STAT_CLMM_Velocity));

RenderVelocities(GraphBuilder, SceneDepthTexture.Resolve, VelocityTexture, FSceneTextureShaderParameters(), EVelocityPass::Opaque, false);

AddSetCurrentStatPass(GraphBuilder, GET_STATID(STAT_CLMM_AfterVelocity));

AddSetCurrentStatPass(GraphBuilder, GET_STATID(STAT_CLMM_TranslucentVelocity));

RenderVelocities(GraphBuilder, SceneDepthTexture.Resolve, VelocityTexture, GetSceneTextureShaderParameters(CreateMobileSceneTextureUniformBuffer(GraphBuilder, EMobileSceneTextureSetupMode::SceneColor)), EVelocityPass::Translucent, false);

GraphBuilder.Execute();

}

....

FRDGBuilder GraphBuilder(RHICmdList);

{

for (int32 ViewIndex = 0; ViewIndex < Views.Num(); ViewIndex++)

{

RDG_EVENT_SCOPE_CONDITIONAL(GraphBuilder, Views.Num() > 1, "View%d", ViewIndex);

PostProcessingInputs.SceneTextures = MobileSceneTexturesPerView[ViewIndex];

AddMobilePostProcessingPasses(GraphBuilder, Views[ViewIndex], PostProcessingInputs, NumMSAASamples > 1);

}

}

GraphBuilder.Execute();

...

...

}

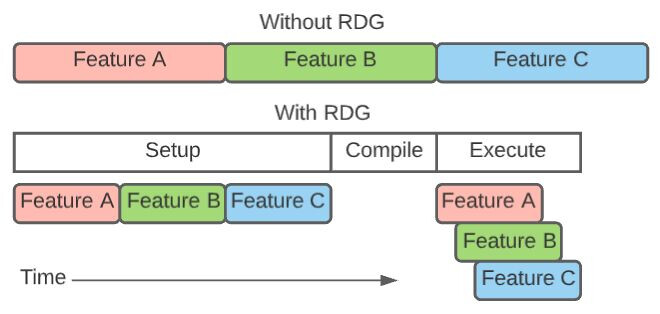

You can see that for UE, you can create Passes on the stack via multiple different FGs. You may wonder when execution happens? For UE, one purpose of using RDG is to utilize multi-threading, as follows:

In the diagram without RDG, rendering features are written on a single timeline where both setup and execution are done in place. RHI commands are recorded and submitted directly in line with pipeline branching and resource allocation.

With RGD, the setup code is separated from execution through user-supplied pass execution lambdas. RDG performs an additional compilation step prior to invoking the pass execution lambdas, and execution is performed across several threads, calling into the lambdas to record render commands into RHI command lists.

As you can see, FG utilizes multi-threading to compile resources (SRVs) in parallel. One advantage of modern graphics APIs is parallel resource handling. For the OpenGL RHI, UE does SRV compilation serially internally but can parallelize it by launching multiple GL contexts.

After that, FG executes the user’s lambda expressions, submitting commands to the RHIThread (note FG runs on the RenderThread). The RHIThread will try to execute more graphics commands in the next frame or when idle.

UE’s RDG system is deeply integrated into many modules, not just rendering. Below is one common usage:

class Actor : UActorComponent{

public:

void Tick(){

{

// compute task

FRDGBuilder GraphBuilder(RHICmdList);

FMyCustomSimpleComputeShaderTask* PassParameters = GraphBuilder.AllocParameters<FMyCustomSimpleComputeShaderTask>();

PassParameters->RectMinMaxBuffer = RectMinMaxBuffer;

....

GraphBuilder.AddPass(

RDG_EVENT_NAME("MyCustomSimpleCustomShaderTask"),

PassParameters,

ERDGPassFlags::Copy,

[PassParameters, RectMinMaxToRenderSizeInBytes, RectMinMaxToRenderDataPtr](FRHICommandListImmediate& RHICmdList)

{

void* DestBVHQueryInfoPtr = RHILockVertexBuffer(PassParameters->RectMinMaxBuffer->GetRHIVertexBuffer(), 0, RectMinMaxToRenderSizeInBytes, RLM_WriteOnly);

FPlatformMemory::Memcpy(DestBVHQueryInfoPtr, RectMinMaxToRenderDataPtr, RectMinMaxToRenderSizeInBytes);

RHIUnlockVertexBuffer(PassParameters->RectMinMaxBuffer->GetRHIVertexBuffer());

});

GraphBuilder.Execute();

}

{

FRDGBuilder GraphBuilder(RHICmdList);

...other FG Logic

GraphBuilder.Execute();

}

}

}

Two RDGs are executed and destroyed on the stack, while the actual content is submitted to the RenderThread for parallel setup/compilation/optimization/culling, and callbacks the user lambda to submit commands to the RHIThread for execution.

So RDGs provide a way to parallelize and optimize work across threads before final submission to the GPU via the RHIThread. By executing and destroying them on the stack each frame, it avoids persistence of state across frames.

For resources, they are not created and compiled every frame. RDG will automatically manage and release them. If you don’t want resources to be created every frame, you can lookup previously registered or created SRV resources via RegisterExternalXXX() and TryRegisterExternalXXX() for use across multiple frames, such as for GBuffer.

Unity3d RenderGraph

Next I will explain the RenderGraph (FG) in Unity3d. In Unity3d, since we use C#, by default it is the same as Java and does not allow object allocation on the stack (although C# can achieve stack object allocation through stackalloc). So Unity3d’s FG interface is not like UE where stack object allocation is used to create and destroy per-frame. Below is a sample custom Pipeline in Unity3d:

public class MyRenderPipeline : RenderPipeline

{

RenderGraph m_RenderGraph;

void InitializeRenderGraph()

{

m_RenderGraph = new RenderGraph(“MyRenderGraph”);

}

void CleanupRenderGraph()

{

m_RenderGraph.Cleanup();

m_RenderGraph = null;

}

}

The RenderGraph in Unity3d is relatively simple. It divides the resources for Passes into external and internal resources. External resources can be passed to the current Pass from other places (like another Pass or global resources), while internal resources can only be accessed by the current Pass.

After that, you can create a Pass by adding a lambda expression, or you can create a custom Pass by adding a static function, like below:

RenderGraph.AddRenderPass("Custom Pass", () => {

// Pass logic

});

// Or

static void CustomPass(RenderGraphContext context) {

// Pass logic

}

RenderGraph.AddRenderPass<CustomPass>();

Consistent with Unreal Engine, most SRVs in Unity3d are created by the RenderGraph, as shown in the following functions:

RenderGraph.CreateTexture(desc);

RenderGraph.CreateSharedTexture(desc);

RenderGraph.CreateUniformBuffer(xxx);

RenderGraph.CreateCreateComputeBuffer(xxx);

Since Unity3d’s source code is not open sourced and only commercially licensed, I cannot provide internal details here. But it should be much simpler compared to Unreal Engine (which has pros and cons - it may not be as thoroughly optimized and powerful as Unreal Engine).

Regarding the RenderGraph in Unity3d, there is a simple description, please refer to:Benefits of the render graph system | Core RP Library | 10.2.2

In Unity3D, the RenderGraph is part of the Scriptable Render Pipeline (SRP) rendering pipeline.

The RenderGraph allows defining rendering passes and representing resource dependencies between passes within the SRP pipeline.

Some key points:

- The RenderGraph is built on top of the SRP pipeline to define passes and resources.

- Passes are added via RenderGraph.AddRenderPass.

- Texture resources are created via APIs like RenderGraph.CreateTexture.

- The RenderGraph handles scheduling and execution of passes.

- Resources persist across frames by default, unlike UE which destroys them each frame.

So the Unity3D RenderGraph is built internally within the SRP pipeline to define pass workflow and resources, simplifying custom rendering pipeline development. It has some different design tradeoffs compared to UE’s RDG but solves the same core problems.

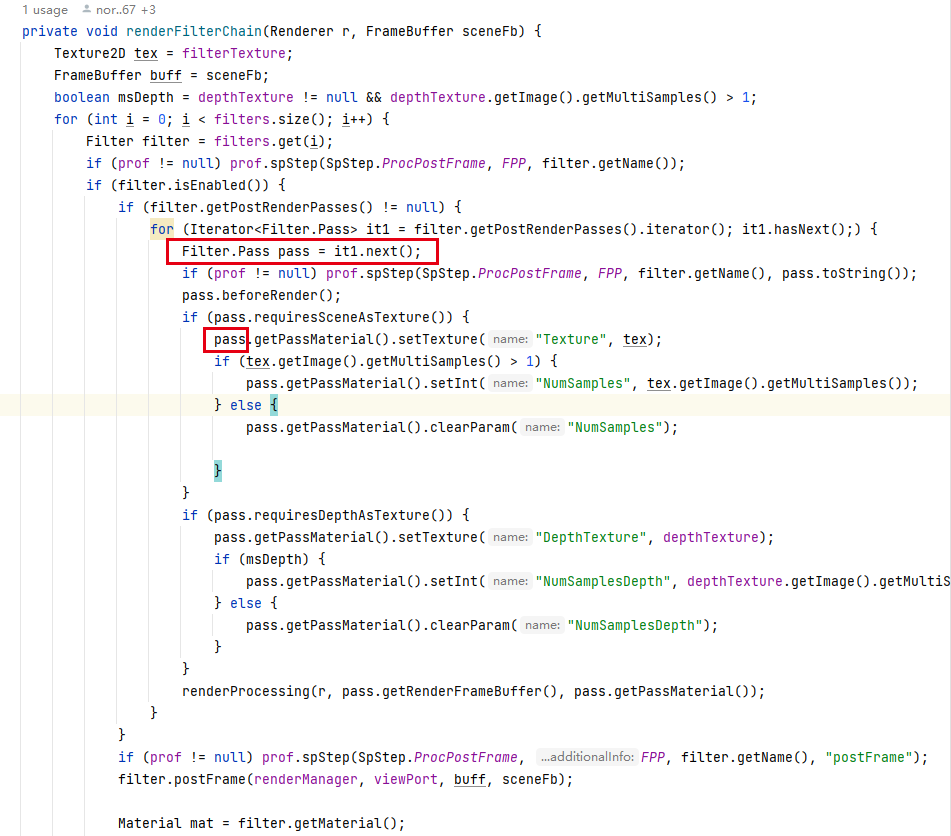

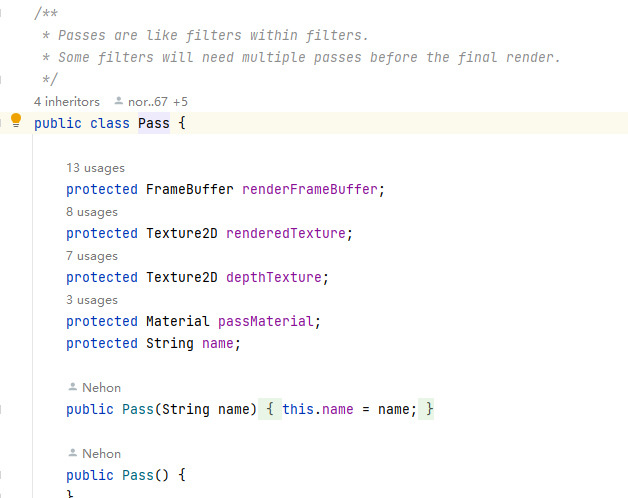

JME3 FG

JME3 FG, alright, there is not yet a complete JME3 FG at the moment. I only added a basic framework in this PR, and have not exposed public APIs to the application layer yet. So regarding this point, I can describe my views on the design of the JME3 FG system.

Since Java cannot allocate objects on the stack, although I can provide interfaces similar to lambda expressions for adding Passes, the actual efficiency is uncertain.

Additionally, due to the lack of fine-grained memory control in Java, regarding public API design, I lean towards having the FrameGraph as a member variable, and register and create required CustomPasses and Parameters at the beginning, then look up the registered or created Passes, Parameters each frame, reorganize and execute the FrameGraph per frame.

Below is the basic intended usage in the current design:

public class MyCustomPass extends FGRenderQueuePass{

// todo:use FG SRVs(The best approach is for the FG (FrameGraph) to create and manage resources directly, but for now, we can only use the native methods to call GL to create them.

)

private FGFrameBuffer prePassBuffer;

private FGUAV prePassColor = null;

private FGUAV prePassDepth = null;

// use native SRVs

private FrameBuffer prePassBuffer;

private Texture2D prePassColor = null;

private Texture2D prePassDepth = null;

MyCustomPass(){

// todo:Creating and registering resources through FG:

prePassColor = FGBuilderTools.findOrAddSRV<FGUAV>("prePassColor", Desc);

// Creating directly using native methods (not recommended)

prePassColor = new Texture2D(w, h, Image.Format.RGBA16F);

...

// register SRVs(Indicates that this SRV can be exposed externally through this Pass)

registerSource(new FGRenderTargetSource(srvName, prePassColor));

...

// register Sinks(Similar to passParameters in UE, but UE allocates objects on the stack every frame, Java can't do this (not memory friendly), so passParameters are added this way instead.)

registerSink(new FGTextureBindableSink<FGRenderTargetSource.RenderTargetSourceProxy>(sinkName, sinkData);

}

@Override

public void prepare(FGRenderContext renderContext){

//todo:At this stage, if using APIs like Vulkan or DX12, we could compile the SRVs (shader resource views) or other resources required by the passes in parallel.

}

@Override

public void dispatchPassSetup(RenderQueue renderQueue) {

// todo:At this stage, determine which objects from the RenderQueue can enter this pass and generate MeshDrawCommands for them.

}

@Override

public void execute(FGRenderContext renderContext) {

// todo:At this stage, the commands could be submitted to another thread (e.g. the RHIThread), then execute multi-threaded command drawing. However, currently JME3 only has single-threaded OpenGL rendering.

}

}

public class TestMyRenderPipeline extent SimpleApplication{

FrameGraph fg;

MyCustomPass myCustomPass;

public TestMyRenderPipeline(){

fg = new FrameGraph(FGRenderContext);

myCustomPass = new MyCustomPass("MyFirstCustomPass");

// or ↓

myCustomPass = FGBuilderTools.findOrAdd<MyCustomPass>("MyFirstCustomPass");

}

public void simpleUpdate(float tpf){

// Referencing ue, unity3d, FG clears and rebuilds every frame. For Java, we can't allocate objects on the stack, so we implement it through member variables and inherit FGRenderQueuePass to implement a custom Pass, rather than implementing through creating Lambda expression objects every frame (I'm not sure what the actual overhead of this approach is for Java).

fg.reset();

if(usePrePass){

// sinkData is an SRV that can be obtained from other Passes, or from global resources (FGBuilderTools.FindOrAdd)

myCustomPass.setSinkLinkage(sinkName, sinkData);

fg.addPass(myCustomPass);

}

... Adding other passes

fg.finalize();

fg.execute();

}

}

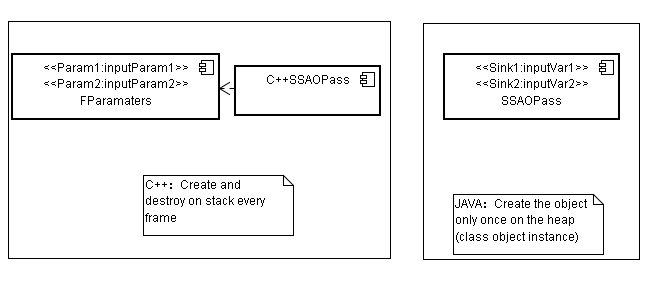

You can see FG in JME3 contains FGSink and FGSource, essentially they are PassParamters and PassOutputs in UE, Unity3d. C++ allows stack allocation so in UE you can directly create PassParamters and PassOutputs in local scope each frame, but for Java this may cause performance issues. So I split them into class fashion (FGSink and FGSource), then you can inherit and add custom FGSink and FGSource to implement custom PassParamters and PassOutputs. More on how to use them will not be elaborated here for now.

It’s worth noting that here we’re only discussing the public API of FG in JME3. The internal implementation details I may further reference UnrealEngine’s encapsulation. For this, we need some understanding of this new thing (so we know what modules in future JME3 need adjustment for custom pipeline and FG system - adjust MaterialSystem to define SRVs in MaterialDef, adjust ShaderSystem to associate it with FG, initiate SRV compilation, shader compilation through FG, provide unified interface for subsequent parallel compilation processing (although OpenGL doesn’t support parallel compilation, may need to create multiple glContexts, but from a FG design perspective, it should be oriented for multi-threaded architecture)).

I will finalize the FG public API in this post. Once the public API is finalized, I may implement these public APIs later and submit them to this PR for review again. If you have any better suggestions, feel free to provide feedback. ![]()