think some people should know better than me that the JME renderer has not received major updates for a long time. Therefore, in my to-do list, the first things are:

I plan to add RenderPipeline to JME, which includes:

ForwardPipeline:BasicForward,Forward++,

DeferredPipeline: Deferred, TiledBasedDeferred.

The bigger goal is to add mainstream global illumination (GI) support on this basis, which may include:

LPV (Light Propagation Volumes), LightFieldProbes, SDF.

I have consulted a small group of people privately to see if it is possible. I originally planned to discuss this topic after completing a preliminary previewable version by myself, so that everyone can know what is going to be discussed. But I don’t know if anyone else is doing similar things, so I decided to start an open topic.

If you have ideas or suggestions, you can post them here, thank you!

(Note: I don’t know the final result of this topic, but in any case, I will try to push the update of the JME renderer part by myself) ![]()

There’s a feature request : Use Google Angle Backend, I don’t know if it may help or not, but they may have preconfigured setups for these rendering techniques.(btw, Google webgl on chrome uses Angle too and supply for both OGL and vulkan contexts).

Thanks for your advice. I have followed this project and it looks perfect. I am not sure if it can be used in the JME engine through JNI technology.

In addition, my current main to-do item is to add some new features to the existing JME architecture to make it compatible with the current JME project. Maybe others will be interested in the suggestions you mentioned.

There was a scriptable rendering pipeline in progress a while ago by Riccardo, but since it’s been a while since I’ve heard any updates on that project, I’m not sure whether it’s still being worked on or not. @RiccardoBlb got any updates on the status of the project?

Hi, Grizeldi. Yes, I know this. I briefly communicated with Ricc in private, and probably took a look at Ricc’s system. Although it is still in the prototype stage, the overall idea is still very good.

But considering that it takes a long time to rebuild the entire renderer, this should be a bigger goal (such as JME4.0 or JME5.0).

Currently, I am considering adding new renderer features to the existing JME architecture so that it can be compatible with existing JME3 projects.

As for the larger goal (JME4.0), I personally feel that the time has not yet arrived.

Honestly I have no idea what it is all about.

I give it a guess.

The techniques in use are old and maybe outdated?

They dont support modern (new) hardware and features that came with them ?

Or is it that some things, you mentioned GI, is not available yet

Even if I dont understand what is realy going on, I support the idea to update and upgrade the existing way of rendering.

@JhonKkk, in case it helps you, previously there were some attempts to implement deferred rending into JME, maybe you can get some idea looking at those projects.

Hi Aufrier, nothing big happened. As most people know, in fact, the graphics rendering part of JME3 has not been really updated for a long time. It is far from the current mainstream graphics technology, such as the RenderPipeline, GI (and some time ago) NV’s RTX interface brings VXGI), I just want to be able to synchronize JME with this era, I also know that most people are very busy, and I can’t force others to do this, because this is an open source engine, so , I try my best to do what I can (for the development of JME).

Thank you, Ali_RS, I have read the sharing of several of them, and there are others in the community who have tried these a long time ago, but in the end they were not really integrated with the JME3 core.

I want to wait until I finish the version at this stage before discussing more in-depth topics.

If you have any questions regarding yadef feel free to drop me a message. I spent quite a lot time on optimization.

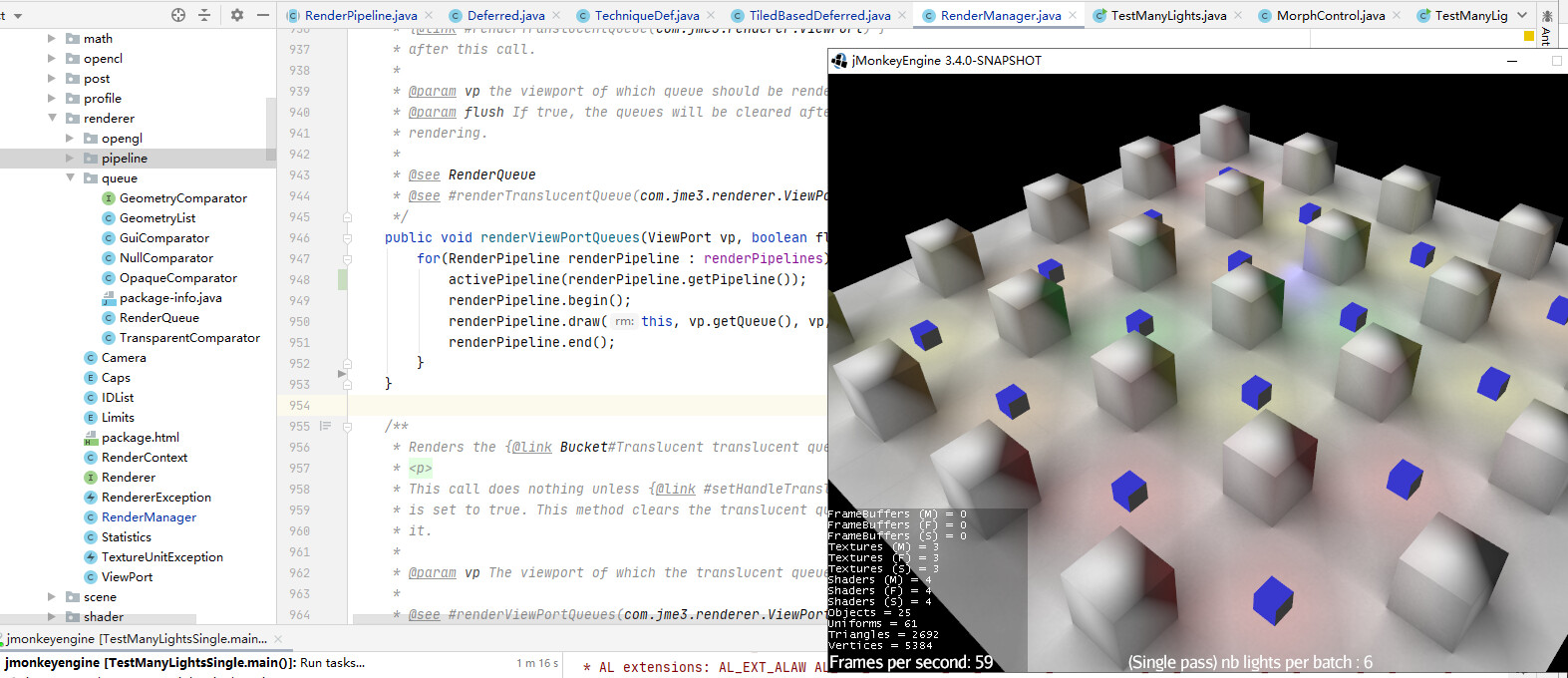

The technique used to draw lots of spot/pointlights in one drawcall worked wonders

Using the stencil buffer saved lot of mem bandwith/fillrate on scenes with unlit background

Please post questions here so we can all learn from the answers.

FWIW I also played with the idea to implement clustered rendering in hybrid mode for forward rendering as well.

I stopped working on that for now, because there were a few changes from @Samwise and/or the SSBO PR from Ricc to implement: SSBO/UBO, Compute Shaders, …

That means the first pass should be determining the requirements and getting the concepts into the engine first.

Another thing: One article I read called our approach at forward rendering old and there seems to be improvements to the standard forward render as well, which are probably a faster and more important goal for us than deferred (because deferred has a few downsides and especially PBR/IBL obsolotes “many light” scenarios).

Also if we’d really build a one-size-fits-all hybrid render, then it cannot be slowed down by the forward passes.

Hi, Darkchaos, the direct lighting part of JME’s PBR material will not be affected by DeferredShading, but the current indirect lighting (IBL) part of JME is designed for ForwardPipeline, because there are three LightProbes for shading objects, so my idea is to use Better global illumination technology such as (Light Field Probes (technology used by Unity3D), SDF (technology used by Godot4.0, UE4 also uses this)) is used on the independent Pass of DeferredShading. My idea is to include both ForwardPipeline (BasicForward and Forward++) and DeferredPipeline (StandaryDeferred and TiledBasedDeferred). And I’m implementing it, maybe it can be used for testing soon.

In addition, global illumination is very important, even PhongLighting, plus global illumination can get a good lighting effect.

You are worried that DeferredPipeline will be affected by ForwardPipeline, right? But my idea is to transition the current JME3 version first.

Because the development of an independent JME4.0 (larger target) version requires more discussion.

Initially adjust the rendering logic code, add pipeline to control rendering, and test some JME demonstration cases, which seems to be compatible. Set up the pipeline as follows. The existing JME materials are initialized as forwarding by default:

Hey. I’ve heard you like walls of text, so here is your wall of text

This is my updated code for the pipeline i was working on:

It was based on the master branch of at least 1 year ago, so I rebased it on the current master and tired to fix the merge issues as quick as possible, I removed some parts of the engine that were not concerned with the pipeline, I will do a better merge at a later time when i’ll have time to do it properly.

However the code is runnable and it contains an example here: SimpleRender.java that shows how to implement as pipeline passess what we now have hardcoded in Simple/LegacyApplication.

Obviously this is a lot of complex code that the average developer won’t need to write, since we can create a generic pipeline and let the developer add its own custom passes on certain stages, eg. postprocessing, and the possibility to replace the entire pipeline will be there only for devs that really need it.

So, let me introduce first with the general idea behind this approach… we have some sort of separation currently between the various execution stages in the engine, however there are some problems and limitations that sometimes require workarounds or weird approaches or are just not possible without resorting to hacks:

- Fixed render/execution order,

- The engine is too much tied to OpenGL specific logic, there is no issue in implementing different types of opengl backends, but adapting the engine to something that is totally different is pretty hard

- The Renderer logic leaks in the engine logic, the NativeObjects map 1:1 with something in the renderer, however this might not always be the case with non opengl renderers

- Unclear way to define game logic, unclear distinction between gamelogic and render logic. Controls? appstates? why do controls have a render() method??

- No easy way to extend the render pipeline or have things that use previous frame data (eg the temporal stuff, reprojection etc)

- Fixed set of geometry’s buckets, what if i need to render something over something else?

- And more that i don’t remember now…

So the idea of this pipeline work was to fix all the above and more, by removing all the hardcoded fixed stuff and provide an alternative (that could be still used to implement the hardcoded fixed stuff to provide backward compatibility). A pipeline is just a queue made of passes that are executed in order and they might use the output of any of the previous passes, the attempt is to do so by somewhat standardizing the way passes are created, while providing the freedom to the developer to do whatever it wants inside the pass as long as it provides some common methods to interface with the pipeline, this means we can have also passes that contains a local pipeline or use multithreading.

We can also have multiple pipelines in the same app, and they can be run by the same runner in sequence or by multiple runners in different threads.

So, some of the approaches here are:

-

Introdution of StatefulObject: objects that store a state associated to something (object,class,key… whatever, just think to it like a fast spatial.set/getUserData). A renderer or whatever module in the engine (audio render also), can store a state on what we now call NativeObjects (eg. an Image object) that will contain all the context of said object in the renderer/module. For example an Image will have a GLImage state that contains its ID on opengl.

-

SmartObject: created by the PipelinePointerFactory are kinda like “pointers” but actually empty objects that represent a real object that is somewhere else, the pipeline can resolve them every frame and obtain the real object they are pointing to. They can point to an absolute object or they can point to an object relative to the pipeline (eg. previous frame). They can also change the object they are pointing to when they are resolved, eg. textures can be resized or its filtering can change.

-

No more buckets or viewports: just send a parent Node,camera, filter and sorter to a GeometriesExtractorPass, it will extract the geomery queues, sort them and return them, then connect the queues to a RenderPass and they will be rendered to a framebuffer.

-

No more techniques: The techniques are implemented in the renderpass, there might be a ForwardGeometriesRenderPass or a DeferredLightingGeometriesRenderPass, the developer can attach and detach them, use them together with different geometries (eg. opaque objects can use deferred lighting, while transparent objects must use forward, remember the filter before, you can filter them out like so)

-

Use of WorldParams BufferObjects: instead of having several confusing params in shaders, this class can convert some objects into corresponding BufferObjects that are bound to structs in the shaders, this translates to things like

Geomety.worldMatrixinstead ofm_WorldMatrixand is generally faster, since only the data that changes will be uploaded to the gpu everytime. -

Controls for game logic: i know many people here like ECS, and that’s fine, however, i think controls can provide a valid approach for implementing gamelogic, in the pipeline we have a pass that extracts the controls from the scene and a pass that executes them. I was also thinking about multispatial controls, where a single control can have multiple instances attached to many spatials, the extractor will figure that out and run it only once for an array of spatials (ie. something like ecs systems), the Spatial.setUserData can also be optimized to store integer keys up to a certain number into an int[], this can provide a fast way for multispatial controls to store data on each spatial they are attached to.

The pipeline package contains also a version of bullet that supports thread safe non blocking asynchronous execution.

You can see here how much better the performance of asynchronous execution (i call it detached in the video) are vs parallel (that is synched at the end of each frame), this doesn’t come for free, as the developer will have to know how to keep physics related code separated for the gamelogic or handle interaction in a thread safe manner. I’ve been using this approach in my project and it is actually quite easy to do so, once you know the problem exists.

The bullet pass is implemented as a pipeline pass, so the thread where it is executed depends on the pipeline or runner to which it is attached, in the example i use the same runner to execute rendering, logic and physics, so it will work as the SEQUENTIAL physics space in jme3.

There is also the shell of a DeferredPBR pass that i was working on when i had to move to something else.

I know this is a lot of stuff, and some of it is weird, hard to understand or unconventional, and probably might need a rework, and sadly i can’t do it in the short term. However the code is there, it works™, if you think some ideas are worth looking into, or you want to change, continue it or port some part of it to a different system, feel free to do as you wish, also if you want to ask something i can reply in public or private, it’s not like i don’t have time, it’s just that i don’t have time to work on this consistently.

BONUS:

I rewrote some of the post processing effects, but i wanted to make them applicable to multiple textures/renderoutputs in the same pass, so i added a for preprocessing directive for the GLSL loader

#for i=0..6 ( #ifdef SCENE_$i $0 #endif )

uniform sampler2D m_Scene$i;

#endfor

this will be compiled in

#ifdef SCENE_0

uniform sampler2D m_Scene0;

#endif

#ifdef SCENE_1

uniform sampler2D m_Scene1;

#endif

#ifdef SCENE_2

uniform sampler2D m_Scene2;

#endif

.... etc from 0 to 6

Obviously this is optional and can be implemented in the shader, it just saves some code and makes the shader look nicer

Hi Rick, I agree with you. Have you considered completing this idea in the next major version of JME (including refactoring the Material system and the Render system)?

But yes, I also considered refactoring, including the ones you mentioned, as well as the material system. For example, developers can not only define a series of Techniques in MaterialDef, but also include multiple Passes in a Technique to implement complex rendering configurations.

However, since this requires a lot of design documents to organize the whole idea, I wonder if you are considering implementing these ideas in the next major version (such as JME4 or JME5)? Maybe I can help.

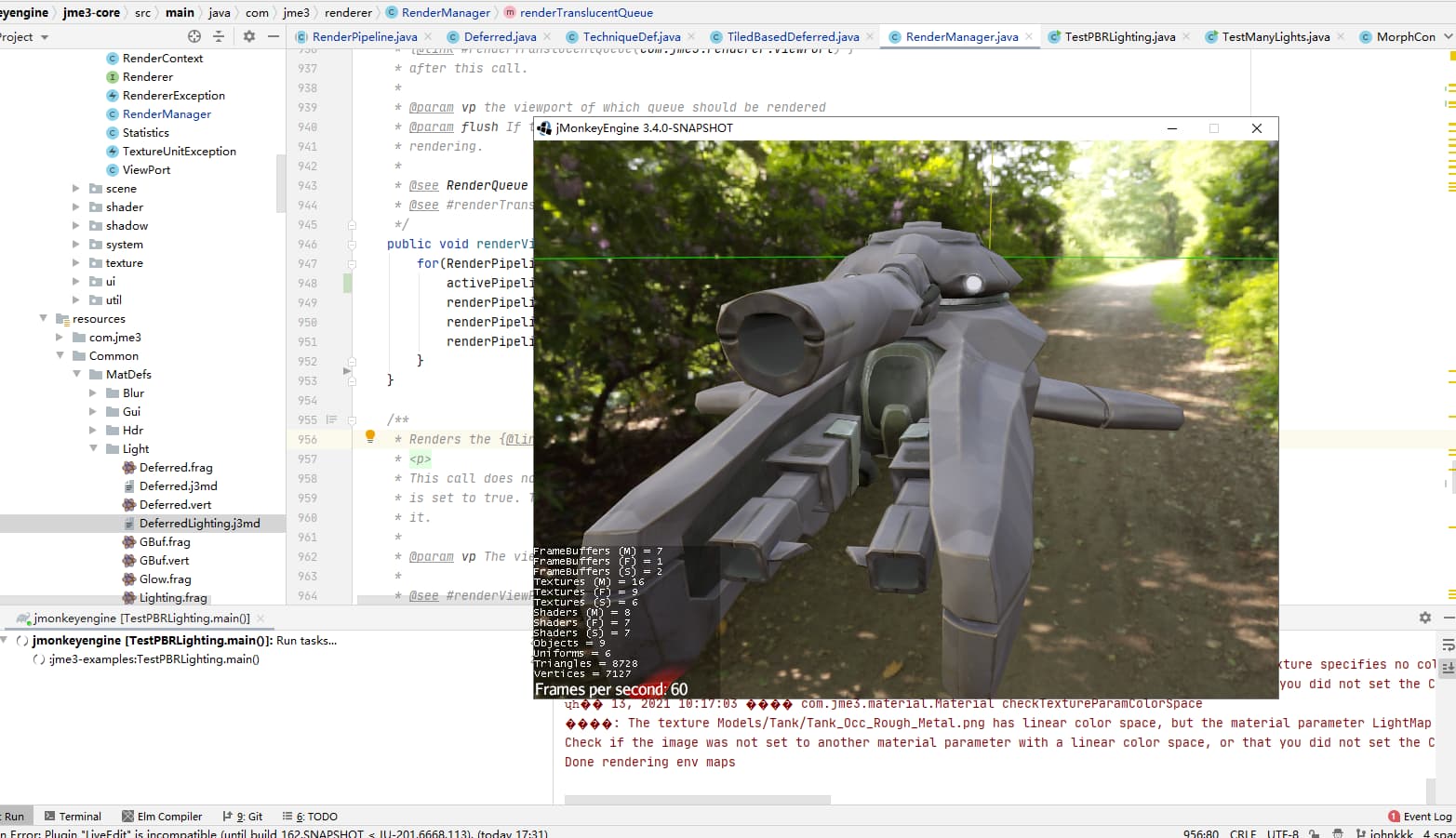

But at the moment, I am doing something. In the current JME architecture, the rendering pipeline has been adjusted, and the mainstream global illumination technology is planned to be introduced into the current JME renderer. The reason for considering adjustments based on the current JME architecture is to be compatible with most current existing JME projects.

Therefore, after completing the current to-do list, I will have time to refactor the next big JME version with you (if you are willing to let me help…).

Of course, it is also possible not to use the working method of MaterialDef at all, that is, there are no more Techniques, but a series of SubPass. Developers can define a Program as a combination of a series of SubPass.

Currently, I have encapsulated a part of the API, but I want to know what some people think, whether to directly add Forward and Deferred to Lighting.j3md and PBRLighting.j3md, or to separate them into DeferredLighting.j3md and DeferredPBRLighting.j3md? If it is added to Lighting.j3md and PBRLighting.j3md, it means that the JME project will be more convenient to switch the rendering mode, because there is no need to replace the material separately for each Geometry.