Please post any initial feedback for the PBR FAQ thread in here, so we can keep that thread a bit cleaner,

cheers

TT

Please post any initial feedback for the PBR FAQ thread in here, so we can keep that thread a bit cleaner,

cheers

TT

Can I use this for wiki?

Normals are needed for lighting. They are not used for face culling. Only winding is used for face culling.

But to know how much light is hitting a surface, you need to know which way it’s facing, ie: the normal.

Common next question might be why this isn’t just calculated in the shader or whatever:

Since polygonal surfaces are only approximations of potentially curved surfaces, normals cannot generally be calculated from a triangle directly as there needs to be some understanding of the curvature of the surface. (Even a dumb straight average of the shared triangle normals is not enough.)

A tangent helps orient the texture in 3D space. It should essentially point in the ‘x axis’ of the texture in 3D space. Again, this is necessary because surfaces curve and/or triangles may be oriented in any direction relative to the square texture. The binormal is orthogonal to both the normal and the tangent and can generally be calculated from them.

The normal, tangent, and binormal represent the 3D axes of ‘texture space’… where the tangent is x (or u), the binormal is y (or v), and the normal is depth.

I think even in PBR you only really need them if you have normal maps or bump maps… because these will need to know how to orient those bumps/local normals properly and so on. Maybe roughness needs them? (I feel like not since global illumination should be in world space and not texture space. Texture space is only needed to interpret textures, ie: normal map textures, bump map textures.)

TYVM, added.

Of course.

Right now it’s a living document so may be worth holding off a bit.

OK I have a question … Can I back the environment camera’s snapshot into the scene for load optimization? Let me elaborate … I have a space game … I take a snapshot with the environment camera, load it into the light probe, and then save the .j3o file that has the light probe. When I load the .j3o file again will the ligh probe’s image be saved properly and re-loaded? I don’t care about taking snapshots of the ships in the scene. I just want the env map of the solar system and a light probe so that I can get a nice chrome texture on the ships. Seems like without a light probe all I get is shinny plastic looks on the ships.

Question #2. Does it mater where the light probe is in the scene? Or can it just be in 0,0,0?

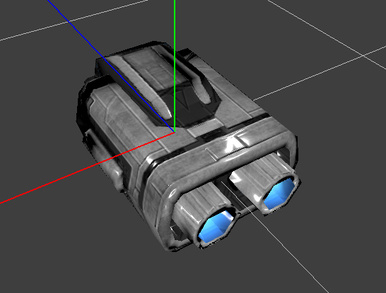

EDIT: Here is an example of what I mean by shinny plastic look. This is regardless of what I tweek the roughness and metallic values to.

We’ll need more information. That looks pretty glossy to me.

I’ve had no problems getting non-glossy stuff with PBR… to the point of having no specular at all, even.

@thetoucher Might want to add texture haven to the list of sites where you can get PBR textures/materials https://texturehaven.com/

I was going for a realistic chrome / polished aluminum look. I got a glossy plastic look instead.

I believe PBR was added between JME 3.1 and JME 3.2.0, so there was no support in 3.1 .

TY, added.

Thanks for clarification, updated

Can someone tell me why i got this issue? It’s fresh added skeleton with just walk animation, never had problem like this before, but now i use .gltf and got issue. Blender 2.79b standard(KhronosGroup) gltf exporter plugin (for this version)

screenshots below (Error when making .j3o from .gltf):

not a PBR issue, a GLTF issue, wrong thread.

I’ve noticed that the first link below is broken due to a typo: