Alright, I scrapped what I had from above and tried a new approach, and I think I have it:

First, I took the initContextFirstTime() method from LwjglContext and delegated the logic into a new method, initContext(boolean). This method does pretty much the same thing if the first parameter is true. However, if false, it will skip the creation of the renderer (instead just calling its initialize method) and skip the input initialization.

private void initContext(boolean first) {

if (!GLContext.getCapabilities().OpenGL20) {

throw new RendererException("OpenGL 2.0 or higher is "

+ "required for jMonkeyEngine");

}

int vers[] = getGLVersion(settings.getRenderer());

if (vers != null) {

if (first) {

GL gl = new LwjglGL();

GLExt glext = new LwjglGLExt();

GLFbo glfbo;

if (GLContext.getCapabilities().OpenGL30) {

glfbo = new LwjglGLFboGL3();

} else {

glfbo = new LwjglGLFboEXT();

}

if (settings.getBoolean("GraphicsDebug")) {

gl = (GL) GLDebug.createProxy(gl, gl, GL.class, GL2.class, GL3.class, GL4.class);

glext = (GLExt) GLDebug.createProxy(gl, glext, GLExt.class);

glfbo = (GLFbo) GLDebug.createProxy(gl, glfbo, GLFbo.class);

}

if (settings.getBoolean("GraphicsTiming")) {

GLTimingState timingState = new GLTimingState();

gl = (GL) GLTiming.createGLTiming(gl, timingState, GL.class, GL2.class, GL3.class, GL4.class);

glext = (GLExt) GLTiming.createGLTiming(glext, timingState, GLExt.class);

glfbo = (GLFbo) GLTiming.createGLTiming(glfbo, timingState, GLFbo.class);

}

if (settings.getBoolean("GraphicsTrace")) {

gl = (GL) GLTracer.createDesktopGlTracer(gl, GL.class, GL2.class, GL3.class, GL4.class);

glext = (GLExt) GLTracer.createDesktopGlTracer(glext, GLExt.class);

glfbo = (GLFbo) GLTracer.createDesktopGlTracer(glfbo, GLFbo.class);

}

renderer = new GLRenderer(gl, glext, glfbo);

}

renderer.initialize();

} else {

throw new UnsupportedOperationException("Unsupported renderer: " + settings.getRenderer());

}

if (GLContext.getCapabilities().GL_ARB_debug_output && settings.getBoolean("GraphicsDebug")) {

ARBDebugOutput.glDebugMessageCallbackARB(new ARBDebugOutputCallback(new LwjglGLDebugOutputHandler()));

}

renderer.setMainFrameBufferSrgb(settings.isGammaCorrection());

renderer.setLinearizeSrgbImages(settings.isGammaCorrection());

if (first) {

// Init input

if (keyInput != null) {

keyInput.initialize();

}

if (mouseInput != null) {

mouseInput.initialize();

}

if (joyInput != null) {

joyInput.initialize();

}

}

}

Now, whenever I restart the context, LwjglDisplay does this:

@Override

public void runLoop(){

// This method is overriden to do restart

if (needRestart.getAndSet(false)) {

try {

createContext(settings);

} catch (LWJGLException ex) {

logger.log(Level.SEVERE, "Failed to set display settings!", ex);

}

listener.reshape(settings.getWidth(), settings.getHeight());

if (renderable.get()) {

reinitContext();

} else {

assert getType() == Type.Canvas;

}

logger.fine("Display restarted.");

} else if (Display.wasResized()) {

int newWidth = Display.getWidth();

int newHeight = Display.getHeight();

settings.setResolution(newWidth, newHeight);

listener.reshape(newWidth, newHeight);

}

super.runLoop();

}

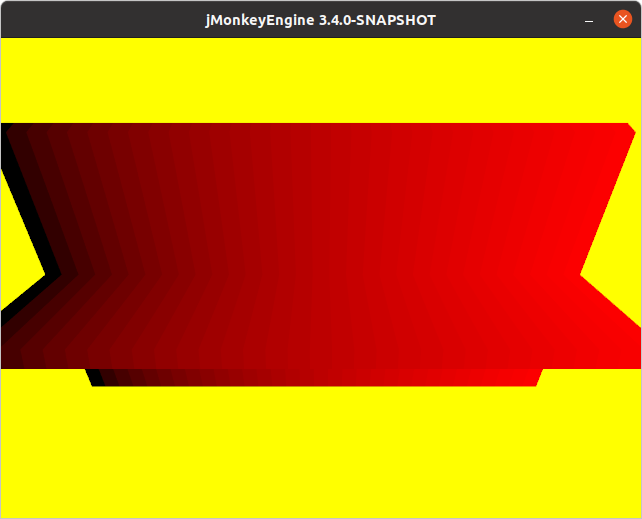

That said, I remembered the duplication issue from before, so I went and checked the memory usage, and it looks like there is an increase in memory usage every time I do a restart, so it looks like something isn’t being cleared. However, the controls I attached to my boxes in my example aren’t triggering more often than they should, so whatever is being duplicated at least isn’t being processed like before.

While trying to find a better stress test, I ran into TestLeakingGL. Apparently, it is supposed to create and destroy 900 spheres every tick (the documentation says 400, but the code generates 900). Ideally, memory usage shouldn’t change. However, when I ran it, memory steadily increased and the FPS continued to drop. It makes me wonder if this means that the engine isn’t properly clearing the objects. This applies to both jme3-lwjgl and jme3-lwjgl3.

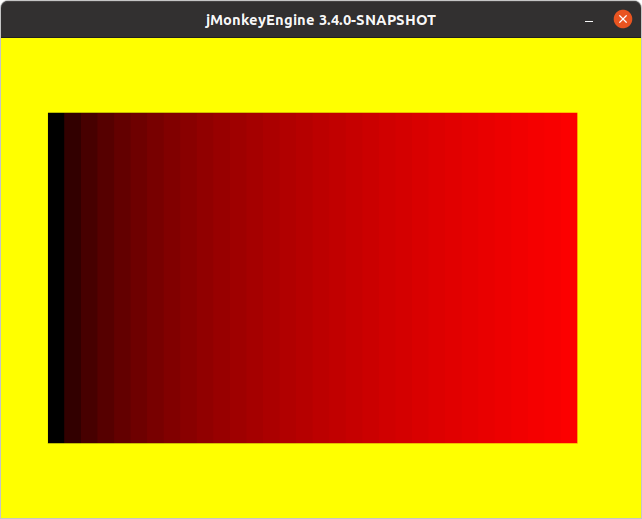

Besides the memory issue, though, context restarting now seems to be working great on jme3-lwjgl, so there’s that!

EDIT: Change seems to work on jme3-lwjgl3 as well.

EDIT 2:

I’ve pushed the changes to GitHub, if anyone is interested in checking them out. However, I’m a little hesitant to create a PR until we can track down this memory issue. I’m pretty sure that it is unrelated to my changes, but it would be good to look into it.

On the side, a pull request from here would override #1524.

EDIT 3: I’ve been looking into the memory issue, and TestLeakGL hasn’t worked since before JME 3.1. Anytime before that is hard to test, considering that JME 3.0 was a rather different project then.