Hi Monkeys,

It’s been a while since I’ve had anything I felt worth worthy to contribute, now on my 3rd attempt at Volumetric Lighting I have finally arrived at something I’m happy with. I learned a bunch while working on this project, some of which I would like to share with those who are interested.

First things first:

The Project

The source is on google code. I’ve also created a full download of the source and test scene zipped up that comes in at a whopping 27.4 KB.

Video of The Test Scene

[video]http://www.youtube.com/watch?v=1fXvX10SJXs[/video]

3 “Volumetric Spot Lights” buzzing around… There is no real lighting in the scene, nor are there any real shadows, it is simply showing off the volumetric light.

Earlier video testing in a real scene (Village Showcase courtesy of @destroflyer) using shadows and a real spot light

[video]http://www.youtube.com/watch?v=JnvidKsiFtU[/video]

My Philosophy on Open Source Software

Here is what I made, it's open source, this is how I made it, there is nothing to hide. Now you take it away, learn from it, add to it, improve it, then bring it back and show me what you did, so I can learn too.

Background

I played Allan Wake recently, I was blown away by the graphics and story telling, but especially loved the flash light effect, so I wanted to recreate it using real-time Volumetric Lighting.My first 2 failed attempts:

A few years ago I had a rough ray marching approach in my head, gave it a crap attempt, which failed, so trashed that and moved on.

Then within the last few months, I attempted to use what I’ve been calling the “Mitchel Light Shafts” approach, pioneered by Dobashi and Nishita, and very nicely summed up in this pdf.

This method basically uses screen aligned billboard that fill the light frustum, which looks like this:

… I got this up and running, but my attempt looked crap and was not what I was after… when the light shaft is thin, like a flash light, and many angles there are simply not enough billboards to fill the volume efficiently and it left many gaps:

The blue box is the light source.

I did learn a lot about matrices and moving geometry around relative to a camera and bounding volume, so it wasn’t a complete loss.

So I did loads of research, read roughly 20 mind bending papers all covering different aspects of creating real time volumetric lighting, mashed everything I learned together and came up with a kind of hybrid approach, which is roughly described in the Real Time Volumetric Shadows using Polygonal Light Volumes paper.

So How Does It Work

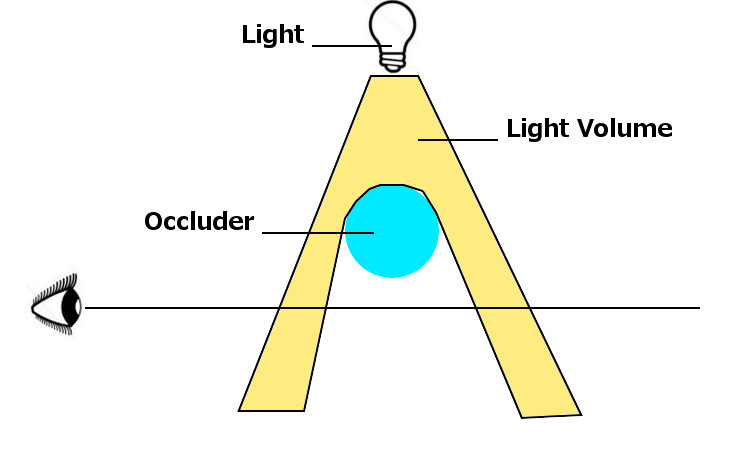

First thing is to make a “Light Volume” or a closed mesh that represents all the air we want to illuminate. I treated the light volume as if it were a camera frustum, with a subdivided plane as the far plane. A depth map is rendered from the light’s perspective (the same way JME shadows work) in

VLRenderer.java, which is a cut down version of BasicShadowRenderer.java, utilising just the depth map gathering portion (if the Volumetric Lighting is integrated into the existing lighting systems, this step could be bypassed) and the subdivide far plane is “vacume formed” over that depth map in the vertex shader, which means each vertex is displaced along the z access by the depth texture …

This is a really neat way of forming a lighting volume (like a reverse shadow volume) that doesn’t rely on manipulating the scene geometry, while not as accurate as other methods, accuracy is not super important given the nature of volumetric light. So we start with a custom mesh that looks like this (super low resolution mesh 8x8):

and end up with a mesh that looks roughly like this (crappy example I know, get over it) :

arrayList.get(column).get(row), after some initial testing I found the mesh generation to be painfully slow, and after some investigation I found that reading from this lookup was very expensive, when I switched to using a simple integer calculation (roughly index = columnNumber * NumberOfColumns + rowNumber), and it was 231x faster!!. I found it interesting that reading values from ArrayLists to be so slow, perhaps someone out there has some insight?

Because spotlights are round, there is no need to deal with any vertices that fall outside the light cone, so came up with a neat little trick to catch those …

if (length(posInPLS.xy) < posInPLS.w) {

which roughly translates to: if when looking from the point of view of the light (Projected Light Space) the distance of the point from the middle middle of the view , length(posInPLS.xy), is greater than half the width (or height) of the view, posInPLS.w, then exclude it… (.w is a magical value I don’t completely understand, but it behaves like that in this case).

I did some of the calculations on the CPU once and passed them to my shaders, to avoid needing to perform this calculation on the GPU every frame, or worse every vert, or even worse every fragment, in my shaders.

[java]

public Vector2f getLinearDepthFactors(Camera cam) {

float near = cam.getFrustumNear();

float far = cam.getFrustumFar();

float a = far / (far - near);

float b = far * near / (near - far);

return new Vector2f(a,b);

}

[/java]

Now that there is a closed mesh representing the light volume, we need to calculate how much light this volume will contribute to the scene, this is figured out by solving the scattered air light integral, or, how much light bounces off the little bits of dust and crap inside the volume, that we see as light shafts. This calculation has some brutal maths, the best approach I have found is A Practical Analytic Single Scattering Model for Real Time Rendering and it makes my brain hurt! I found a super useful blog post from Miles Macklin, which really helped me get my head around the complex mathematics involved in solving the integral, and presented a much simpler model. But first, taking a step back, we need to figure out the thickness of the light volume at any given point. In my solution, this needs to be done on the GPU since the volume mesh has been manipulated by the vertex shader, so the CPU does not know about the changes made to the volume, so it will be both far quicker than having to transmit this information to the GPU every frame, and the GPU is optimised for these kinds of calculations.

Calculating Geometry "Thickness" on the GPU

This is done with a simple bit of vector maths, which I can best described using the following scenario (please excuse the crappy diagrams):

This is looking at the side of a simple scene, we are interested in calculating the depth of the volume along the ray coming from the eye.

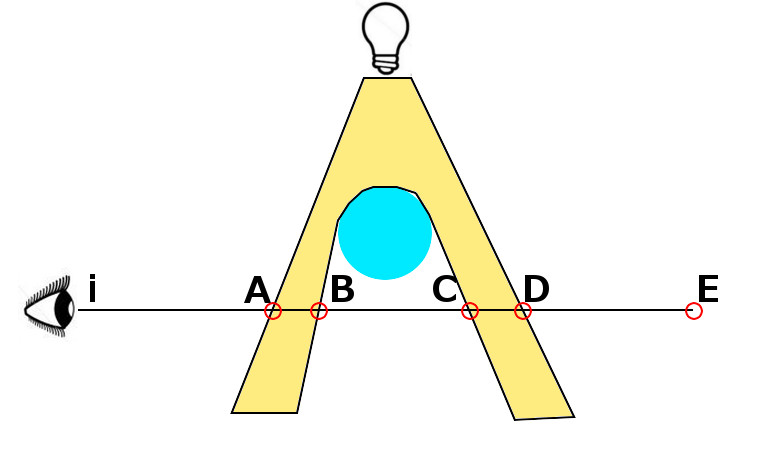

We assign the following points i A B C D and E to the points where the ray starts, intersects the volume and finishes.

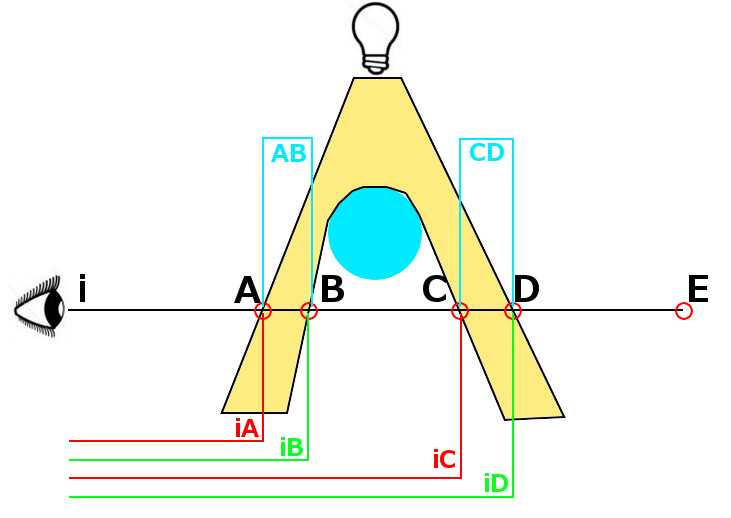

These vectors are labelled iA iB iC etc ... iE can be ignored as it has no effect on the calculations. We are interesting in calculating the length of AB + CD.

We can figure out the thickness by subtracting iA and iC from iB and iD. Interestingly, iB and iD are both back faces of the mesh, and iA and iC are both front faces. Now we can simply solve the air light scattering integral at at all the back faces, and subtract the solved values at the front faces!

To do this with the volume mesh, we need to setup the light volume’s material, and by setting FaceCullMode to None, and setting Depth Testing to Off:

All faces of the volume mesh will be rendered. It is now possible to calculate the thickness at each face for each “light shaft”, and incidentally add or subtract them from the a texture using BlendMode.Additive.

The volume light is rendered in a post Filter.Pass after the scene has been rendered…

[java]lightVolumePass.init(renderManager.getRenderer(), w, h, Format.RGBA32F, Format.Depth, 1, true);[/java]

This pass needs to use a non standard texture type: Format.RGBA32F, which allows us to store larger and negative values.

gl_FrontFacing

Rendering Selected Geometry in an Addition Filter Pass

I have figured out 2 methods to do this, the first uses materials with specific render techniques, which I explained in my post about screen space displacement filter .

I figured out a simpler method after looking at some of @pspeed 's work… have a reference to the geometry in the filter, and render only that …

[java]

renderManager.getRenderer().setFrameBuffer(lightVolumePass.getRenderFrameBuffer());

renderManager.getRenderer().clearBuffers(true, true, true);

renderManager.renderGeometry(lightVolume);

[/java]

Since needed my geometry to be positioned using LocalTranslations, I needed to add the spatial it to the rootNode, and to make sure it is ignored during the initial scene render, I set CullHint to Always.

I was fooled again when I attempted to add 3 separate VolumetricLightFilters…only the first filter was being rendered, the other two appeared not to render, but were hidden in the bottom left corner of the screen.

So before rendering a pass, make sure the camera is set correct eg renderManager.setCamera(viewPort.getCamera(), false);

Current Limitations

Changing the spot Light Radius, near distance or far distance, requires the volume mesh to be regenerated on the CPU, so this is not supported. This could be performed on the GPU and allow for real-time updates, but it would also require many addition calculations per vertex, per frame, which seamed like a waste to me, so this functionality was simply not included. If there is a decent use case for this, there is no reason why it could not be added.

The “fog” that is causing the light to scatter, so the light shafts can be seen, is considered to be an isotropic or homogeneous media, which is a fancy way of saying its consistent, like water with some milk completely mixed in. The current integral calculation simply does not allow for a non homogeneous media, like a smoke coming from a fire, or a cloudy sky,. The maths to calculate this is waaaaay beyond me at this stage. It is possible to simulate this effect using particles, preferably soft particles, I may look into this a bit later.

A colored Gobo or Cookie are not possible using the current integration, more on this further down.

Future plans and thoughts

- There is room to add a simple cookie or Gobo which would be used to control the shape of emitted light. At the moment it is assumed the shape is a circle since it is being emitted from a spot light. I will add this shortly as it's rather trivial.

- This effect is pretty dull without an actual Spot Light to illuminate surfaces, and Spot Light often looks silly without a shadow renderer, plus the fact the shadow filter and the volumetric light filter both share the same SceneProccessor it only makes sense to make the VolumetricLightFilter and extension of the SpotShadowRenderer.

- 6 of these filters could be linked together to create an omni or point light volumetric light, and extend the PointLightShadowFilter.

- I would like to create a cut down version that renders a simple cone volume that does not create shafts, this would bypass the shadowRenderer scene processor and would be a lot faster since there is no addition depth render, and there would never be more than 2 faces to render per fragment.

- I was wondering if it was possible to use the LightViewProjectionMatrix to figure out the light position within the shader. I tried multiplying vec4(0.0) and vec4(1.0) by it but that didn't work, surely this is possible, and it would avoid the need to pass in light and camera positions... anyone ?

- I bit of blur would help mask any aliasing artefacts from low volume resolutions.

- The often horribly abused post effect Bloom is an ideal partner for this filter, as it could be used to simulate the scattering of diffuse light from brightly lit surfaces.

So here is my Volumetric Lighting Filter, that is how I made it, links to the source are listed above, learn from it, add to it, improve it, then hit up this thread with your ideas and improvements so we can add a slick new filter to the project =)

Cheers

James

/edit to the end of this pages url and it gave me editing access, may work for you.

, can you please help to fix this ?

, can you please help to fix this ?