Hello!

I have a rather complex problem (I think) that I hope you can help me with! ![]()

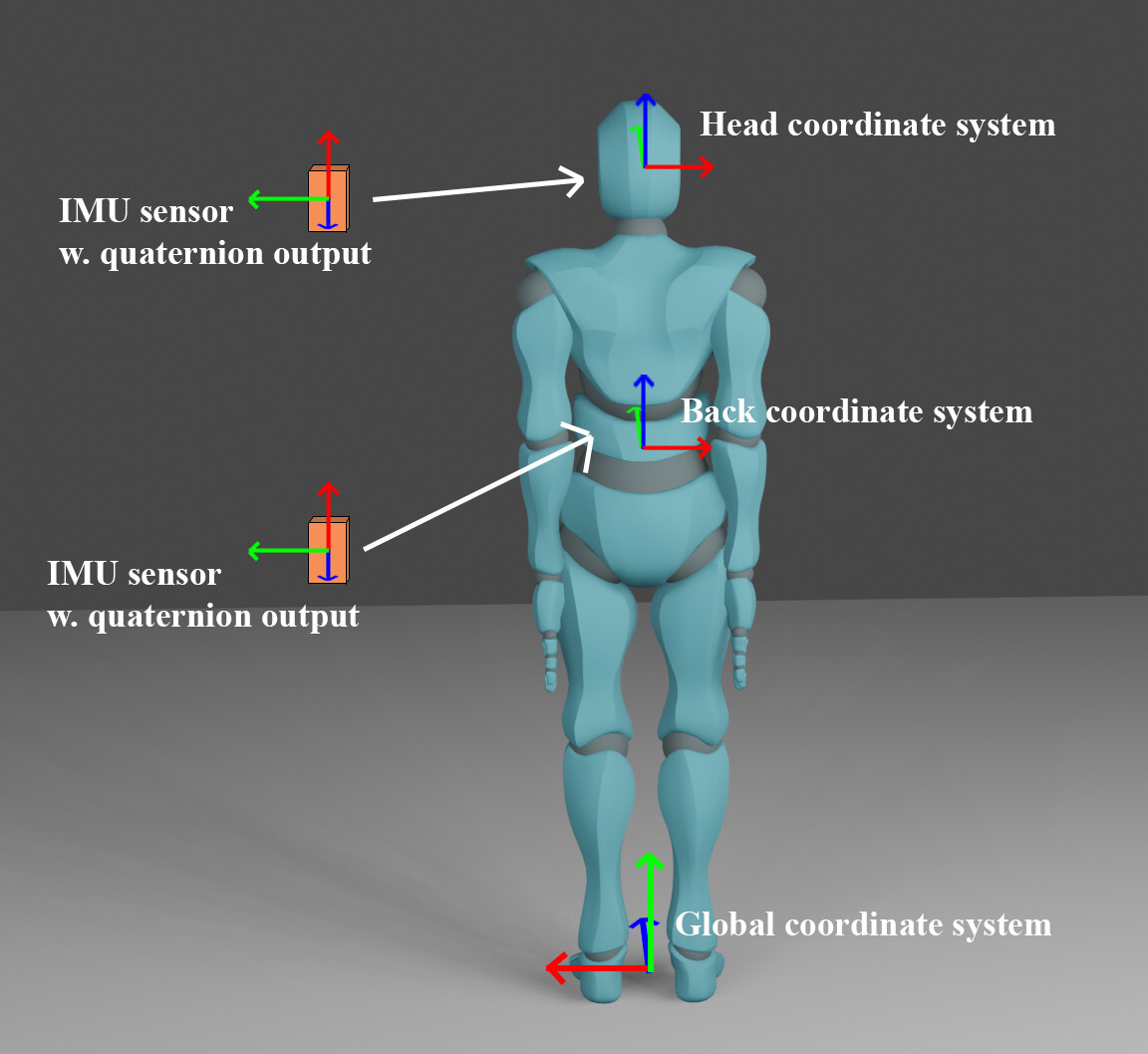

In short, I am creating a motion capture app using wearable IMU sensors w. quaternion output, applied to bodyparts, to rotate a model using JME SkinningControl.

So what I have done so far is to import a .j3o model (ybot from Mixamo in N-pose) and build an app where two quaternions are applied to back and head joints of the model to rotate the body. That all works (thanks to previously help from you guys ![]() )

)

Now what I would like to achieve is to apply the correct rotation to the model. E.g. when I tilt the head sensor forward, then the head should do the same movement, and when rotate, then the head should rotate and so on… This is where I could use some help.

What I have done to try to achieve this is:

- Create offset quaternion at first frame

- Multiply the offset quaternion to each following quaternion

- Apply the new quaternion to the joint with setLocalRotation

Code example:

public class test {

private Quaternion back_offset = new Quaternion;

private Quaternion head_offset = new Quaternion;

private Joint back;

private Joint head;

// JME3 model is initiated

public void setupModel() {

Node human = model.getRootNode();

SkinningControl sc = findAnimRoot(human).getControl(SkinningControl.class);

back = sc.getArmature().getJoint("mixamorig:Spine1");

head = sc.getArmature().getJoint("mixamorig:Head");

}

public void onDataUpdate(float[] back_sensor, float[] head_sensor) {

// Quaternion comes in continuously

Quaternion back_quat = new Quaternion(back_sensor); // Quaternion (XYZW) output from back sensor

Quaternion head_quat = new Quaternion(head_sensor); // Quaternion (XYZW) output from head sensor

// Perform offset calibration at first frame, for each bodypart

if (back_offset == null && head_offset == null) {

back_offset = new Quaternion(0, 0, 0, 1).mult(quaternion.inverse());

head_offset = new Quaternion(0, 0, 0, 1).mult(quaternion.inverse());

} else {

// After first frame, then offset quaternions are multiplied to the new incoming quaternions

Quaternion back_quat_calib = back_offset.mult(back_quat);

Quaternion head_quat_calib = head_offset.mult(head_quat);

// Apply transformed quaternions to joints on JME3 model

back.setLocalRotation(new Quaternion(quat_calib.getX(), quat_calib.getY(), quat_calib.getZ(), quat_calib.getW()));

head.setLocalRotation(new Quaternion(quat_calib.getX(), quat_calib.getY(), quat_calib.getZ(), quat_calib.getW()));

}

}

This results in a nice N-pose, but when I rotate the head, then the head will tilt. So the coordinate systems dont match. How should I approach to allign the coordinate systems? You can see an illustration of the setup below?

Hope you can help!