This is my first time tinkering with the shader. I don’t want to use tex.setWrap (WrapMode.Repeat) in the texture;. I thought to myself, that I could simply reset the texCoord1 in the Frag Shader instead. It is best to understand using a numerical example:

a) The texture should be a 4x4 tile large atlas.

b) A tile is then 0.25f wide.

c) texCoord1.x is taken from [0.25, 0.75[

d) but repetition = 2

e) then texCoord1a.x would be from [0.25, 0.50[ + then either [0.25, 0.50[, simply always subtract a width of 0.25 started from the first 0.50.

f) the repetition is calculated like this:

int repatX = int (texCoord1.z);

vec2 texCoord1a = vec2 (texCoord1.x - repatX * 0.25, texCoord1.y - repatY * 0.25);

where texCoord1.z is from [0, 2[. (I define texCoord1 as vec4)

g) then color * = texture2D (m_ColorMap, texCoord1a);

Analogue bogged down in y with texCoord1.w.

Fun actually works great, but I have unsightly effects on the line between the tiles. Suspect something with biliner rendering or something like that.

How can I solve the problem?

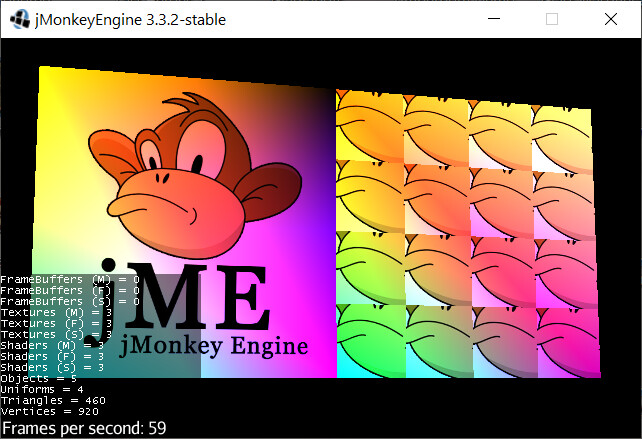

Here is the picture, a bit colorful, because I sat on my previous gradient test.

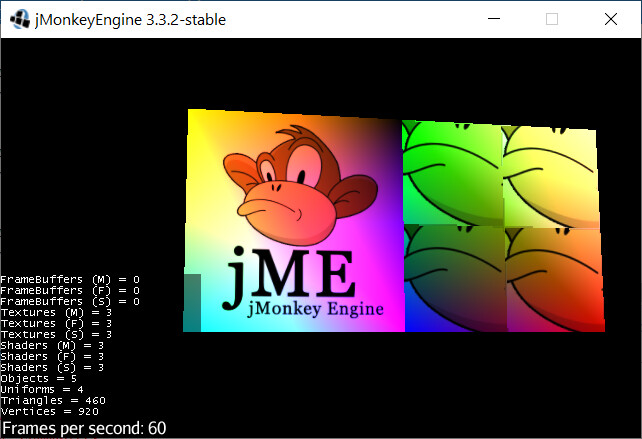

It is not due to the colors, retest with

colors [4] = new Vector4f (1, 1, 1, 1);

colors [5] = new Vector4f (1, 1, 1, 1);

colors [6] = new Vector4f (1, 1, 1, 1);

colors [7] = new Vector4f (1, 1, 1, 1);

the weird line (medium - dark - light - medium transition):

from the square on the right:

code, java:

package test.jme;

import com.jme3.app.SimpleApplication;

import com.jme3.material.Material;

import com.jme3.math.Vector3f;

import com.jme3.math.Vector4f;

import com.jme3.scene.Geometry;

import com.jme3.scene.Mesh;

import com.jme3.scene.VertexBuffer;

import com.jme3.texture.Texture;

import com.jme3.util.BufferUtils;

public class TestGradient extends SimpleApplication {

private Material mat;

public static void main(final String[] args) {

final TestGradient app = new TestGradient();

app.start();

}

@Override

public void simpleInitApp() {

// the points in 3d space where the geometry will be

final Vector3f[] vertices = new Vector3f[8];

// one square

vertices[0] = new Vector3f(0, 0, 0);

vertices[1] = new Vector3f(3, 0, 0);

vertices[2] = new Vector3f(0, 3, 0);

vertices[3] = new Vector3f(3, 3, 0);

// a second square

vertices[4] = new Vector3f(3, 0, 0);

vertices[5] = new Vector3f(6, 0, 0);

vertices[6] = new Vector3f(3, 3, 0);

vertices[7] = new Vector3f(6, 3, 0);

// combine those vetexes into triangles

final int[] indexes = {

// first square

2, 0, 1, 1, 3, 2,

// second square

6, 4, 5, 5, 7, 6 };

// we're not using a texture but if we were this would define whtich parts of

// the image are where

final Vector4f[] texCoord = new Vector4f[8];

texCoord[0] = new Vector4f(0, 0, 0, 0);

texCoord[1] = new Vector4f(1, 0, 0, 0);

texCoord[2] = new Vector4f(0, 1, 0, 0);

texCoord[3] = new Vector4f(1, 1, 0, 0);

texCoord[4] = new Vector4f(0.25f, 0.40f, 0, 0);

texCoord[5] = new Vector4f(0.75f, 0.40f, 2, 0);

texCoord[6] = new Vector4f(0.25f, 0.90f, 0, 2);

texCoord[7] = new Vector4f(0.75f, 0.90f, 2, 2);

final Vector4f[] colours = new Vector4f[8];

// these are Vector4f because we have a red, green, blue and transparency per

// vertex

colours[0] = new Vector4f(0, 2, 1, 1);

colours[1] = new Vector4f(1, 0, 2, 1);

colours[2] = new Vector4f(2, 1, 0, 1);

colours[3] = new Vector4f(0, 0, 0, 1);

colours[4] = new Vector4f(0, 0, 1, 1);

colours[5] = new Vector4f(1, 0, 0, 1);

colours[6] = new Vector4f(0, 1.5f, 0, 1);

colours[7] = new Vector4f(2, 2, 2, 1);

// now we have all the data we create the mesh

// for more details

// https://jmonkeyengine.github.io/wiki/jme3/advanced/custom_meshes.html

final Mesh mesh = new Mesh();

mesh.setBuffer(VertexBuffer.Type.Position, 3, BufferUtils.createFloatBuffer(vertices));

mesh.setBuffer(VertexBuffer.Type.Index, 3, BufferUtils.createIntBuffer(indexes));

mesh.setBuffer(VertexBuffer.Type.TexCoord, 4, BufferUtils.createFloatBuffer(texCoord));

mesh.setBuffer(VertexBuffer.Type.Color, 4, BufferUtils.createFloatBuffer(colours));

mesh.updateBound();

final Geometry geom = new Geometry("mesh", mesh);

//mat = new Material(assetManager, "Common/MatDefs/Misc/Unshaded.j3md");

mat = new Material(assetManager, "assets/matdefs/JaReUnshaded.j3md");

mat.setBoolean("VertexColor", true);

final Texture monkeyTex = assetManager.loadTexture("Interface/Logo/Monkey.jpg");

mat.setTexture("ColorMap", monkeyTex);

geom.setMaterial(mat);

rootNode.attachChild(geom);

}

@Override

public void simpleUpdate(final float tpf) {

}

}

code frag shader:

#import "Common/ShaderLib/GLSLCompat.glsllib"

#if defined(HAS_GLOWMAP) || defined(HAS_COLORMAP) || (defined(HAS_LIGHTMAP) && !defined(SEPARATE_TEXCOORD))

#define NEED_TEXCOORD1

#endif

#if defined(DISCARD_ALPHA)

uniform float m_AlphaDiscardThreshold;

#endif

uniform vec4 m_Color;

uniform sampler2D m_ColorMap;

uniform sampler2D m_LightMap;

varying vec4 texCoord1; //!JaRe!

varying vec2 texCoord2;

varying vec4 vertColor;

void main(){

vec4 color = vec4(1.0);

//!JaRe! begin

int repatX = int (texCoord1.z);

int repatY = int (texCoord1.w);

vec2 texCoord1a = vec2(texCoord1.x - repatX*0.25, texCoord1.y - repatY*0.25);

//!JaRe! ende

#ifdef HAS_COLORMAP

color *= texture2D(m_ColorMap, texCoord1a); //!JaRe!

#endif

#ifdef HAS_VERTEXCOLOR

color *= vertColor;

#endif

#ifdef HAS_COLOR

color *= m_Color;

#endif

#ifdef HAS_LIGHTMAP

#ifdef SEPARATE_TEXCOORD

color.rgb *= texture2D(m_LightMap, texCoord2).rgb;

#else

color.rgb *= texture2D(m_LightMap, texCoord1a).rgb; //!JaRe!

#endif

#endif

#if defined(DISCARD_ALPHA)

if(color.a < m_AlphaDiscardThreshold){

discard;

}

#endif

gl_FragColor = color;

}

code vert shader:

#import "Common/ShaderLib/GLSLCompat.glsllib"

#import "Common/ShaderLib/Skinning.glsllib"

#import "Common/ShaderLib/Instancing.glsllib"

#import "Common/ShaderLib/MorphAnim.glsllib"

attribute vec3 inPosition;

#if defined(HAS_COLORMAP) || (defined(HAS_LIGHTMAP) && !defined(SEPARATE_TEXCOORD))

#define NEED_TEXCOORD1

#endif

attribute vec4 inTexCoord; //!JaRe!

attribute vec2 inTexCoord2;

attribute vec4 inColor;

varying vec4 texCoord1; //!JaRe!

varying vec2 texCoord2;

varying vec4 vertColor;

#ifdef HAS_POINTSIZE

uniform float m_PointSize;

#endif

void main(){

#ifdef NEED_TEXCOORD1

texCoord1 = inTexCoord;

#endif

#ifdef SEPARATE_TEXCOORD

texCoord2 = inTexCoord2;

#endif

#ifdef HAS_VERTEXCOLOR

vertColor = inColor;

#endif

#ifdef HAS_POINTSIZE

gl_PointSize = m_PointSize;

#endif

vec4 modelSpacePos = vec4(inPosition, 1.0);

#ifdef NUM_MORPH_TARGETS

Morph_Compute(modelSpacePos);

#endif

#ifdef NUM_BONES

Skinning_Compute(modelSpacePos);

#endif

gl_Position = TransformWorldViewProjection(modelSpacePos);

}