It’s not done yet. I thought about it for several days and i don’t see why it would not work. And i know it’s not the fastest way to do it.

Here is what i want to do :

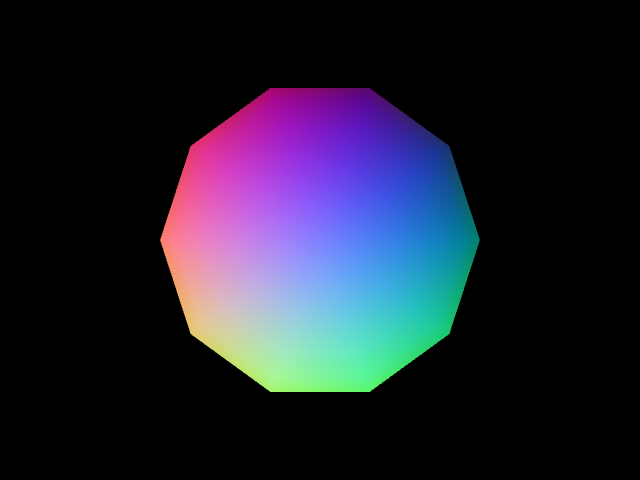

- render scene A.

- throw away the depth buffer (or set it to infinity for every pixel).

- render the portal, but only its depth. So, at this point you still have the original scene in the color buffer but the depth buffer of the scene if the depth buffer of your “portal” (can be anything, any shape).

- render the scene B (where the player is). Pixels of scene B that are behind pixels in your buffer should be discarded (as normal). So you end up with the scene B and in it a “window” to the scene A. If you move the camera in both scene at the same time, the illusion should be perfect.

For it to works, you need to don’t have a “hole” in scene B. I.E. if there is a pixel in scene B that is not rendered, then you’ll see there a pixel from scene A. This can be solved by adding a skybox in scene B.

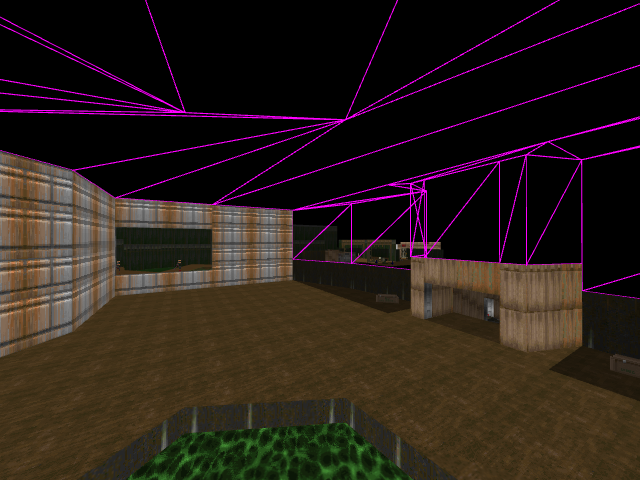

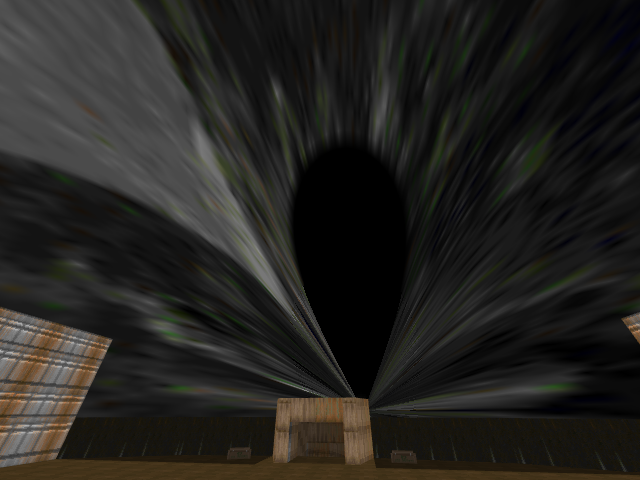

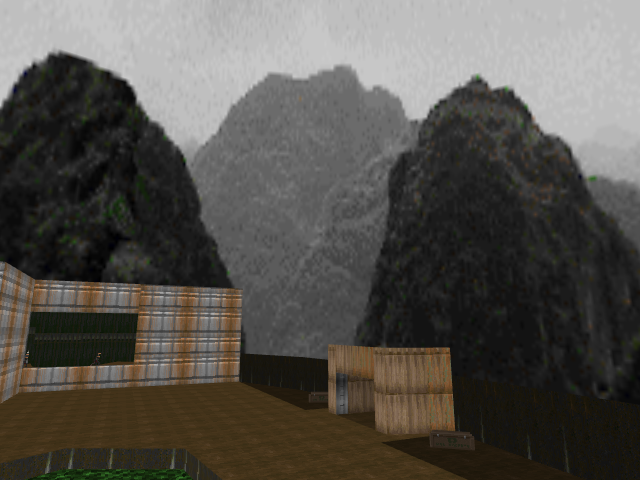

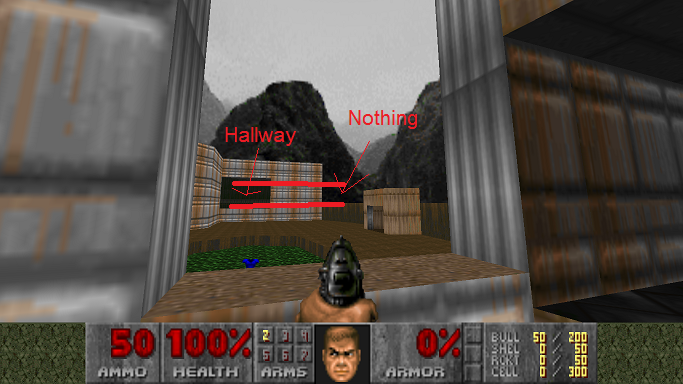

What I want to do with that … well, i need that in my doom engine. It’s a bit hard to explain but the sky in doom is not a skybox : you can have a hallway behind the sky. A picture will make this clearer :

(This picture is taken from GZDoom).

If you only render the sky as a classical skybox (i.e. : a cube of 1m or something like that around the player, that follow it and that is rendered in the sky bucket) you’ll see the hallway “floating” in the sky.

Sky in doom is more a texture applied to solid walls, which display sky with no perspective or something like that (i don’t know how to describe a “sky” effect).

So, i can either :

- do some calculation to project vertex on the camera with correct interpolation (or do that in the fragment shader)

- or do what i said : render the skybox as usual, then render only the depth of these walls and ceilings (the one that should let you see the sky), then render the rest of the scene.

I thought that i could achieve that by just disable color writing in the material of these walls/ceilings. However, when i do that i also discard existing colors, so i see the color of the bacckground of the viewport. I think i did something wrong.

So my questions are :

- is it possible to do what i said.

- am i too generic (did i miss something important like someone saying “well, we only need to detect the face in the picture, then resolve some np-complete problem then”. I really think that the steps i described was as close as possible to “pseudo-code” and i only miss the actual lines of code to perform that).

- am i going the wrong way ? I think that even without this doom thing, the portal effect i described looks cool.

Thx for help and comments.