Hello, first of all sorry for my english.

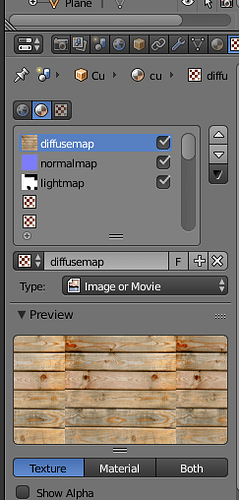

I use a lightmap and texture for my various models.

To, using two coordinates levels (UVMap), for a texture model and a (AtlasMap) for lightmap. This works without problem by activating the “SeparateTexCoord” option in Jmonkey Editor Material. I would like now also use a normal map on some model to give relief. Unfortunately a third level UVMap does not. Can you tell me if this is possible? If so how to do it. For information, I use Blender as software for modeling. Thank you in advance.

Yes. Do you want the the 3rd UV channel for the normal map only?

In fact, the normal map also uses the same UVMap the DiffuseMap. Yet when I want to use the normalmap in addition DiffuseMap and Lightmap, it does not work

Are you using pointlights, or spotlights in your scene as well as a lightmap?

My lightmap was created with pointlights with blender

A normal map reacts to real time lights. So if you had a pointlight, the normal map will lighten/darken parts depending on the angle of the surface (defined by the normal map) relative to the light sources position/direction or w/e. If you do not have any realtime light sources in JME it will not be able to use the normal map since there is no way for it to know the position of the lights you used in blender.

In one of my projects I use lightmaps, and I gave the character a torch specifically so I could see normal maps having an effect when I shine them on things.

Hello, I have yet a very dynamic light source, I have attached a spotlight on camera and she goes to the front of the camera. It simulates a flashlight. Yet the model with the normalmap and lightmap is totally black. I found a topic on Unity forum where apparently the person to the same problem. I attached the link to the topic for more understanding.

https://forum.unity3d.com/threads/lightmapping-and-normal-maps.74656/

If I understand correctly, it is not possible to apply the lightmap and normalmap

Thank you for your help

Usually the normal map uses the same texture coordinates as the diffuse map. So you should use the first set of texture coordinates for the normal map.

Yes it and what I did, (at least I think). I have the same UVMap for DiffuseMap and normalmap and UVMap for Lightmap. I use Blender to do that,

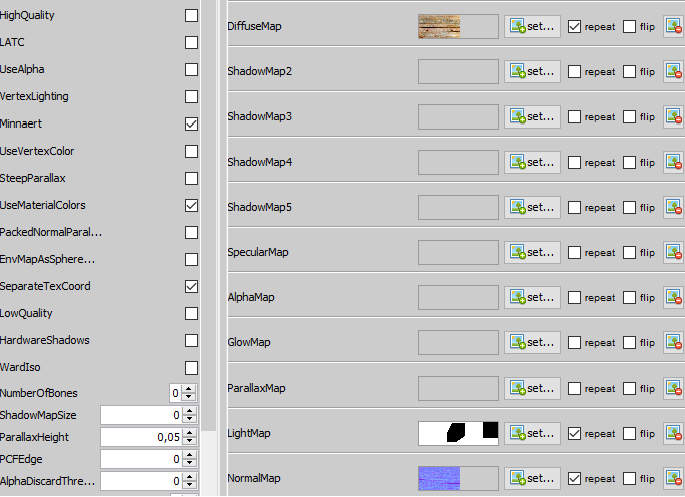

In JMonkeyEngine editor, I use the shader lighting.j3md like this:

I enabled the “SeparateTexCoord” to use the second level UVMap

Can you tell me where I’m wrong? Thank you

SeparateTexCoord only work for LightMaps. So you did what you should.

Though, now I wonder what is wrong with the result because you never said it in your post…

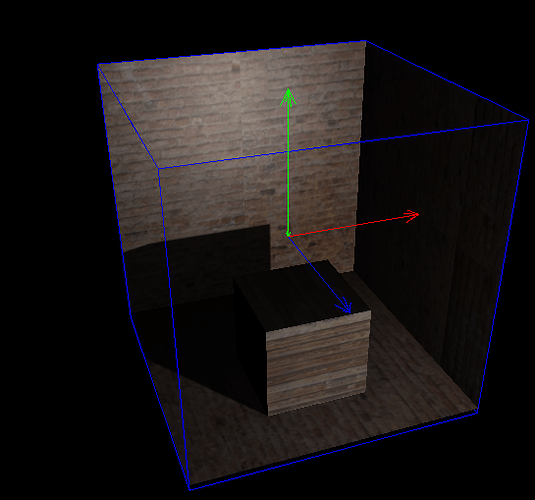

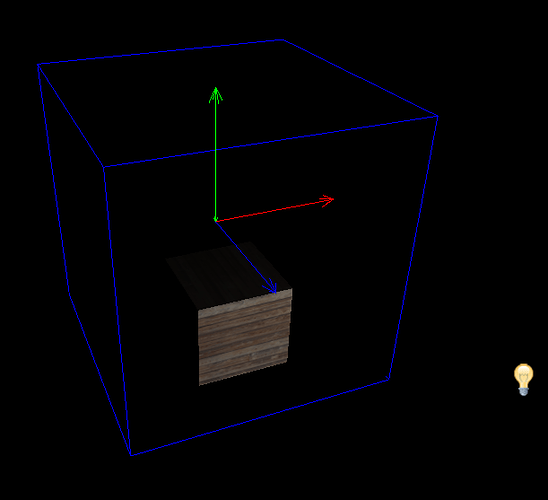

It gets dark, I made a small stage to explain. I would like to use a lightmap on two models (for the wall and the cube) and have normalmap (relief) on the wall. When I activate both in the shader, it becomes black

here without using the normalmap:

here with activation normalmap to the brick wall, it becomes black .:

alright you should have started with this.

You have to generate the tangents for the models (they are mandatory for normal mapping).

TangentBinormalGenerator.generate(model);

It works, thank you very much for your answers. ![]()