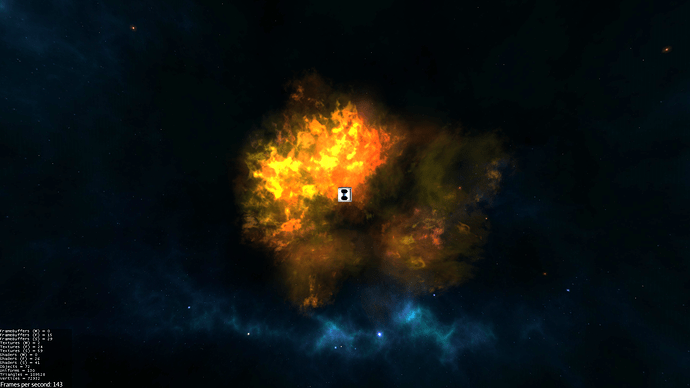

Okay so let me start with a small demonstration. Looking at this transparent nebula from afar renders it at 144 fps (capped).

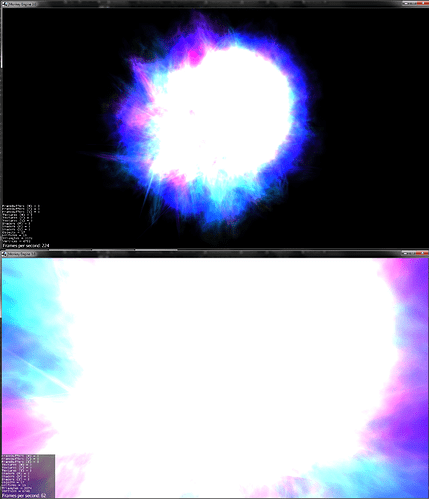

But, getting up close and personal drops the framerate by more than half.

Disregard the few new objects created by a particle emitter.

#Question

So the question is: Why does a single transparent geometry with one material and one mesh render so badly up close and so well from afar? Is there any way to make this work better? Should I just give up and wait for 2026 when GPUs can finally render this without breaking down in tears?

I know that going up close makes the frag shader run more times than usual, but it’s unshaded.frag! Why is the difference so damn large?

#FAQ

-

Alpha discarding? Helps slightly, but not much.

-

Custom shader slowdown? The additional calculations I’m making have minuscule impact on performance and switching to an unshaded.j3md yelds about the same fps.

-

Depth testing slowdown? Disabling it has no impact on framerate, just messes up the rendering order.

-

Too large textures? Nearly the same fps from 16x16 to 2048x2048.

-

You are rendering so many quads, you should use GeometryBatchFactory to batch them! I already told you that the nebula is a single batched mesh running one material. Read the question above the FAQ.

-

Are you using any filters that could slow it down? Only the bloomfilter essentially, but moving it to translucent gives little to no performance boost and the mcve still has the problem without any filters.

#MCVE

So since this is just about making me go insane in the brain I’ve made a MCVE for you guys to mess around in, no external assets required.

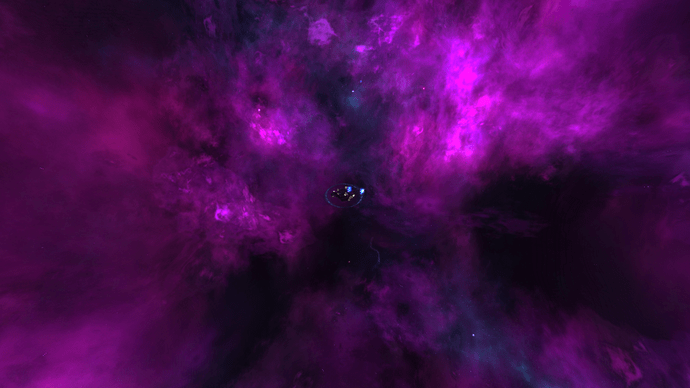

It spawns a single nebula (running unshaded.j3md with vertex colors) with WAAAY too many quads, but since there isn’t anything else to render we need to do that to show the difference.

What it does is:

-

makes the transparent material with the default jme flame.png texture as the cloud, sets same parameters as the in-game nebula materials have

-

generates the quads with randomized vertex colors and places them into a node

-

optimizes the node using GeometryBatchFactory, extracts the batched geometry and attaches it to rootnode

The class:

import com.jme3.app.SimpleApplication;

import com.jme3.material.Material;

import com.jme3.material.RenderState.BlendMode;

import com.jme3.material.RenderState.FaceCullMode;

import com.jme3.math.ColorRGBA;

import com.jme3.math.FastMath;

import com.jme3.math.Vector3f;

import com.jme3.renderer.queue.RenderQueue.Bucket;

import com.jme3.renderer.queue.RenderQueue.ShadowMode;

import com.jme3.scene.Geometry;

import com.jme3.scene.Mesh;

import com.jme3.scene.Node;

import com.jme3.scene.VertexBuffer.Type;

import com.jme3.scene.shape.Quad;

import com.jme3.util.BufferUtils;

import jme3tools.optimize.GeometryBatchFactory;

public class MCVE extends SimpleApplication{

public static void main(String[] args) {

MCVE app = new MCVE();

app.start();

}

@Override

public void simpleInitApp(){

boolean batchQuads = true;

float size = 80000;

flyCam.setMoveSpeed(size*2f+1000);

cam.setFrustumPerspective(70f, (float) cam.getWidth() / cam.getHeight(), 1f, 800000f);

cam.setLocation(Vector3f.UNIT_Z.mult(size*4f));

ColorRGBA template = new ColorRGBA(0.0f,0.0f,0.5f,1.2f);

Quad[] plates = new Quad[4];

Quad side = new Quad(size*2.5f,size*2.5f);

side.setBuffer(Type.TexCoord, 2, new float[]{0,0.5f, 0.5f,0.5f, 0.5f,0, 0,0});

plates[0]= side;

side = new Quad(size*2.5f,size*2.5f);

side.setBuffer(Type.TexCoord, 2, new float[]{0.5f,1, 1,1, 1,0.5f, 0.5f,0.5f});

plates[1]= side;

side = new Quad(size*2.5f,size*2.5f);

side.setBuffer(Type.TexCoord, 2, new float[]{0.5f,0.5f, 1,0.5f, 1,0, 0.5f,0});

plates[2]= side;

side = new Quad(size*2.5f,size*2.5f);

side.setBuffer(Type.TexCoord, 2, new float[]{0,1, 0.5f,1, 0.5f,0.5f, 0,0.5f});

plates[3]= side;

Material tex = new Material(assetManager,"assets/shaders/rings/Unshaded.j3md");

tex.setTexture("ColorMap", assetManager.loadTexture("Effects/Explosion/flame.png"));

tex.setTransparent(true);

tex.setBoolean("VertexColor", true);

tex.getAdditionalRenderState().setDepthWrite(false);

tex.getAdditionalRenderState().setDepthTest(true);

tex.getAdditionalRenderState().setBlendMode(BlendMode.AlphaAdditive);

tex.getAdditionalRenderState().setFaceCullMode(FaceCullMode.Off);

tex.setFloat("AlphaDiscardThreshold", 0.01f);

Node batch = new Node();

for (int i = 0; i < size/100; i++) {

float rand1 = (float)(Math.random()*size);

float rand2 = (float)(Math.random()*size);

float rand3 = (float)(Math.random()*size);

Mesh m = plates[FastMath.nextRandomInt(0, 3)].clone();

ColorRGBA dif = template.clone();

float [] vertices = new float[16];

for(int k = 0, j = 0; j < 4; k+=4, j++) {

ColorRGBA add = new ColorRGBA(FastMath.nextRandomFloat()-0.5f, FastMath.nextRandomFloat()-0.5f, FastMath.nextRandomFloat()-0.5f,0);

add = dif.add(add.mult(0.85f));

vertices[k] = add.r;

vertices[k+1] = add.g;

vertices[k+2] = add.b;

vertices[k+3] = add.a;

}

m.setBuffer(Type.Color, 4, BufferUtils.createFloatBuffer(vertices));

Geometry plate = new Geometry("nb",m);

plate.setMaterial(tex);

plate.setQueueBucket(Bucket.Transparent);

plate.setLocalTranslation(-size*0.5f+rand1, -size*0.5f+rand2, rand3);

plate.setShadowMode(ShadowMode.Off);

Node n = new Node();

n.attachChild(plate);

n.getLocalRotation().fromAngleAxis(i*36, randomVector3f());

batch.attachChild(n);

}

if(batchQuads)

{

GeometryBatchFactory.optimize(batch);

Geometry part = (Geometry) batch.getChild(batch.getChildren().size()-1);

part.setQueueBucket(Bucket.Transparent);

part.setShadowMode(ShadowMode.Off);

rootNode.attachChild(part);

batch.detachAllChildren();

}

else

rootNode.attachChild(batch);

}

public static Vector3f randomVector3f() {

Vector3f rand = new Vector3f(FastMath.rand.nextFloat()*2f-1f,FastMath.rand.nextFloat()*2f-1f,FastMath.rand.nextFloat()*2f-1f);

return rand.normalizeLocal();

}

}

Or on pastebin for your copying convenience:

#Instructions:

-

Run without vsync or you won’t notice the fps drop.

-

Adjust

float size = 80000;to something that has your system running the starting scene at ~200 fps. Larger is more demanding. -

Use the flycam to go inside the nebula and observe the sudden fps drop or back out to see it restored.

Just for interest:

Further on, try disabling the batching of these thousands of quads using

boolean batchQuads = false;

Note how the framerate stays pretty much the same. Why are we batching all of this stuff again?

Thanks!