I have transparent layers like the image:

I have put the textures on quads and layered them in the 3D scene so there are some clouds behind the mountains.

To make the clouds move from right to left in a pretty way (show the user that the cloud texture is continuous) I have put two adjacent layers of the same texture and I moved them from the update loop. The problem is when the quad center is outside the camera view the cloud layer gets blended with the viewport background even if there is a layer behind it (the viewport color shouldn’t be shown because there is an opaque background layer behind all layers). This is the code:

Material material = new Material(assetManager, "Common/MatDefs/Misc/Unshaded.j3md");

material.getAdditionalRenderState().setBlendMode(RenderState.BlendMode.Alpha);

material.getAdditionalRenderState().setFaceCullMode(RenderState.FaceCullMode.Off);

Geometry quad = new Geometry("Layer", new Quad(1, 1));

quad.setQueueBucket(RenderQueue.Bucket.Transparent);

quad.setCullHint(CullHint.Never);

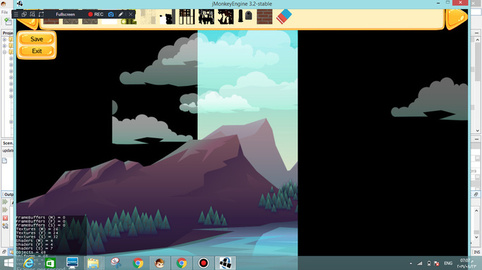

The rendered result:

The previous image is complicated because there is four cloud layers.

The expected:

This result is rendered in the first time before cloud moves and the quad center reachs outside the camera view zone.