I have been creating a library for VR applications (Tamarin) and I wanted to (a) let people know about it and (b) get some early feedback on it. I intend to continue putting “enabling code” into JMonkey itself and have more utility style stuff within Tamarin

Library Features:

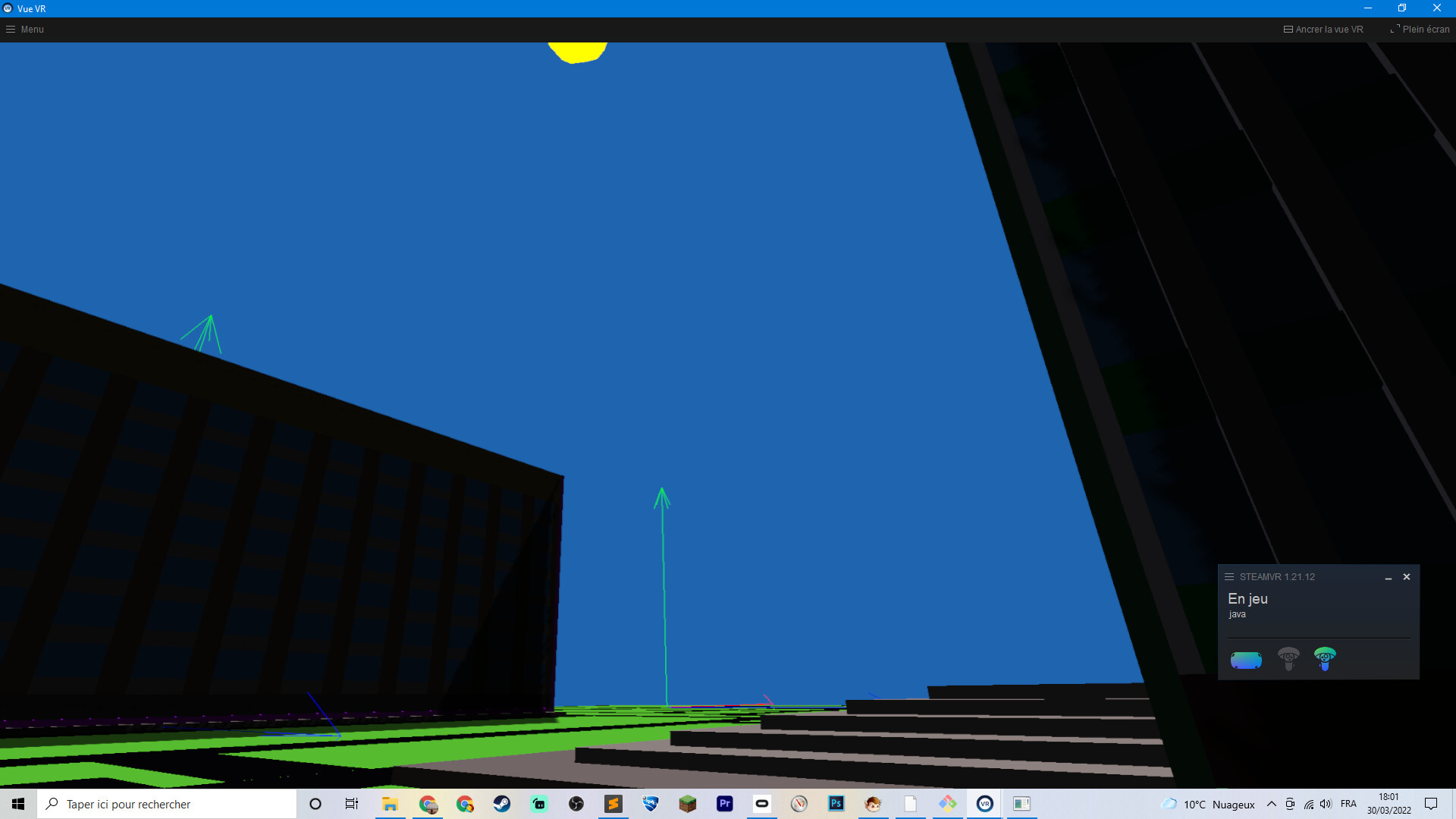

Backporting Action based OpenVr into JMonkey 3.5

Recently I put in partial support for action based OpenVr (aka semi modern VR) into JMonkey but that will not be available till 3.5.1 or 3.6. Tamarin also contains that functionality (as well as a lot of other OpenVr functionality that I intend to put into JMonkey engine as soon as I get around to it).

Action based VR allows for far better cross system compatibility. Actions are basically abstract versions of button presses that are bound to specific controller buttons (and can be redefined by the user if necessary)

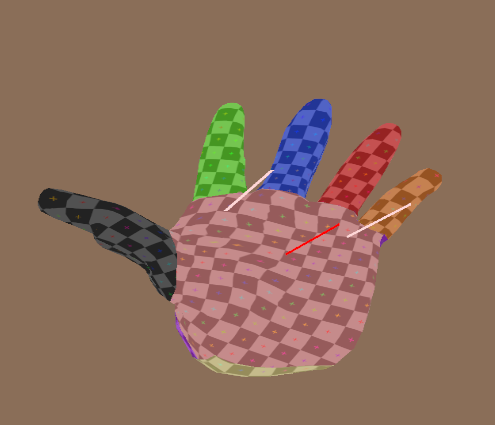

Support for binding a hand model to the VR controllers

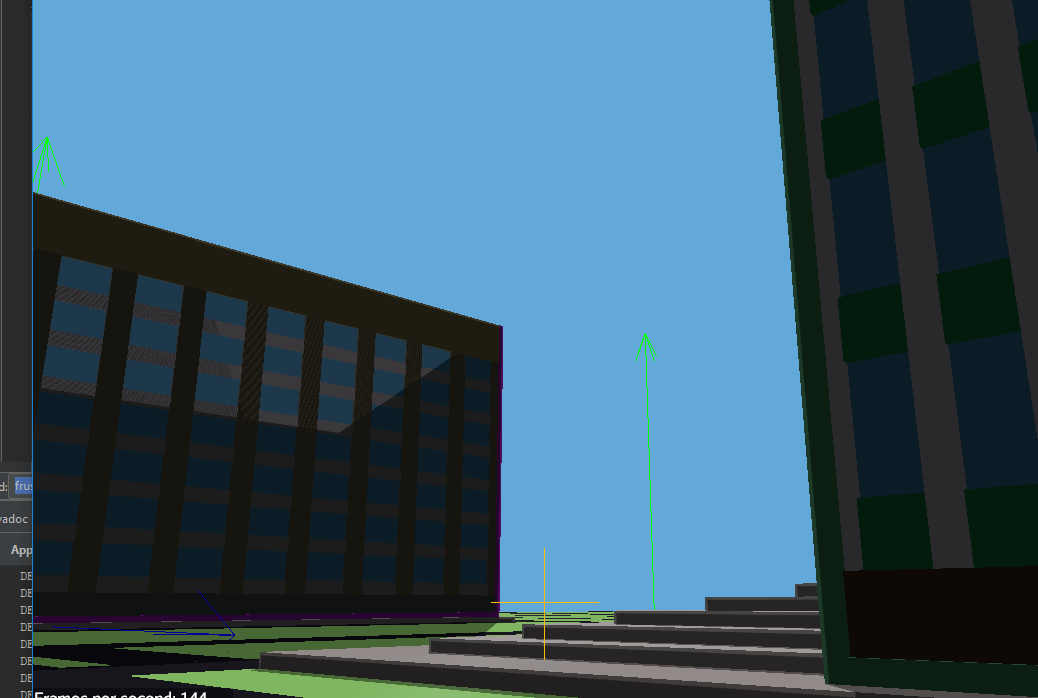

The raw calls to openVr and getting back pose positions, bone positions etc are all in JMonkey itself (or the backported code), but the VRHandsAppState provides a more persistent way to bind a hand model (with an appropriate armature) such that it tracks the users hand positions (and finger positions) on an ongoing basis. The Bound hands also contain a number of other useful features:

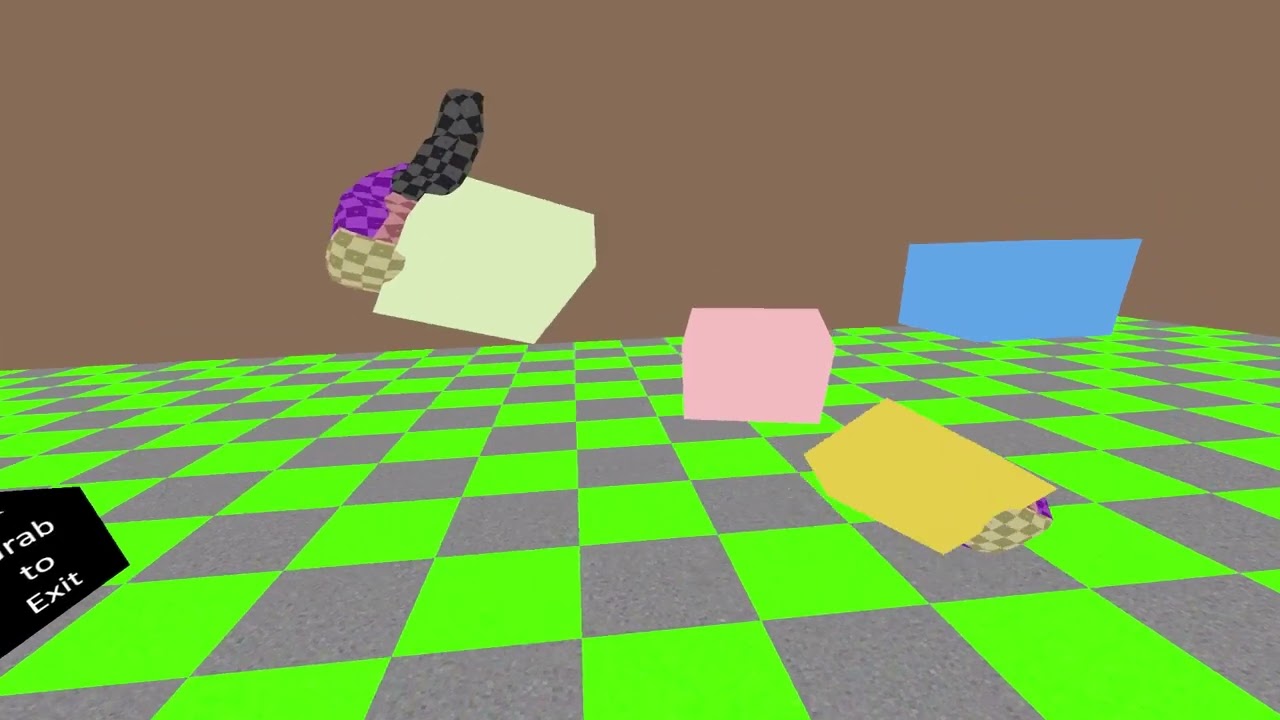

Grab support

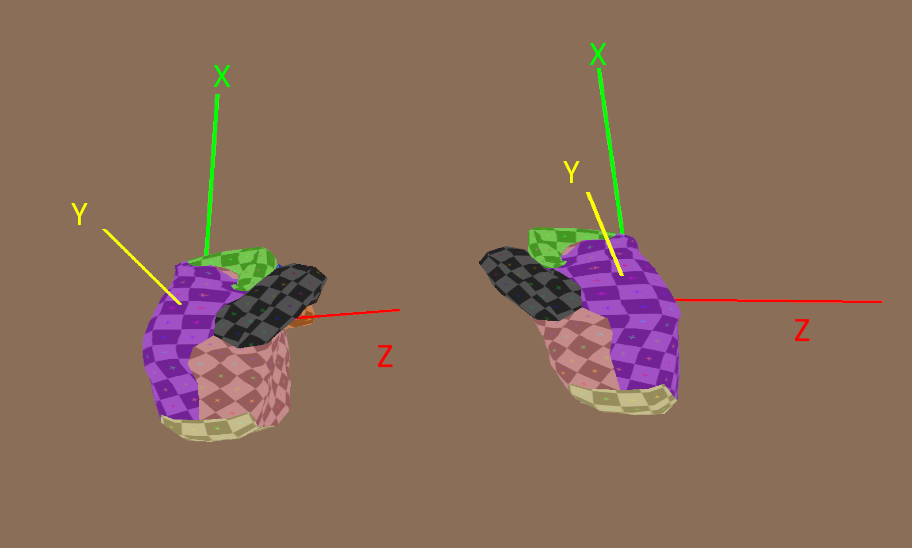

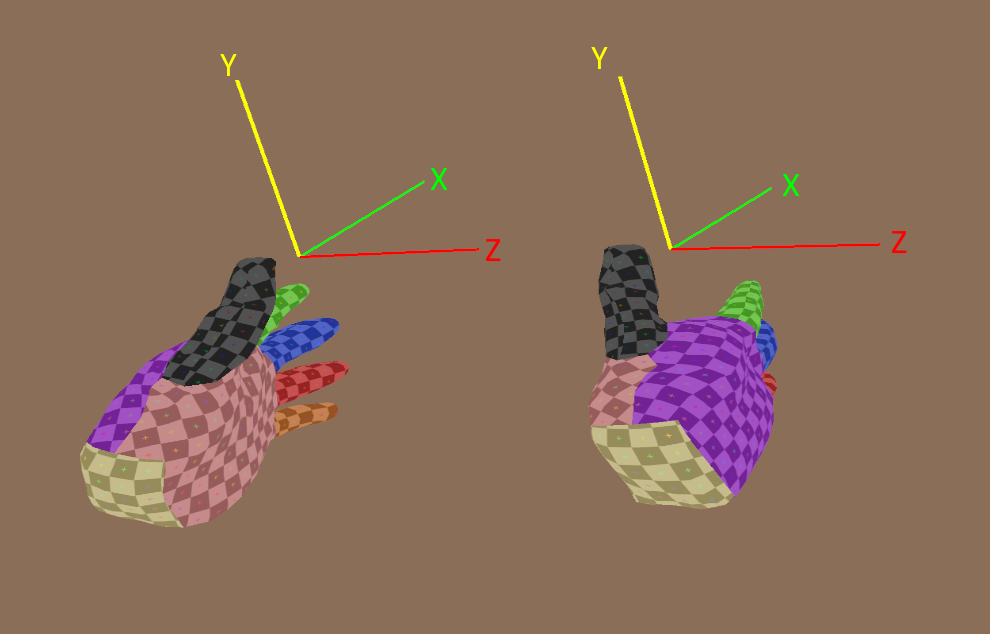

The bound hands can have a grab action bound to them, when that grab action is triggered geometry picking is used to detect geometries near the hands (see the red and pink lines above to see the areas scanned by default). Any spatial that is detected is scanned for an AbstractGrabControl (parents of the geometry also scanned). If one is found then it is informed of the grab event. AutoMovingGrabControl is a concrete implementation of a grab control that allows a geometry to be moved around when grabbed then remain where it is when released.

Picking support

Raw picking from a ray coming from the palm or a ray coming from just in front of the thumb is supported, giving CollisionResults as the return (just in front of the thumb is where a gun would fire from)

Nodes to hang things off

The bound hand has 2 key nodes supporting 2 coordinate systems. The palm coordinate system (good to attach held items to if not using the AbstractGrabControl). The zero point of this coordinate system is the middle of the metacarpal bone of the middle finger

The other coordinate system being the xPointing coordinate system. Which has its zero at the point openVr puts its zero, just in front of the thumb (Although the default rotation of OpenVr seemed a bit mad, with z pointing up at a 45 degree angle, that system is also available, but seems like a huge pain)

(Note that because the hands distort the x,y & z of these systems will not perfectly align, although they are similar)

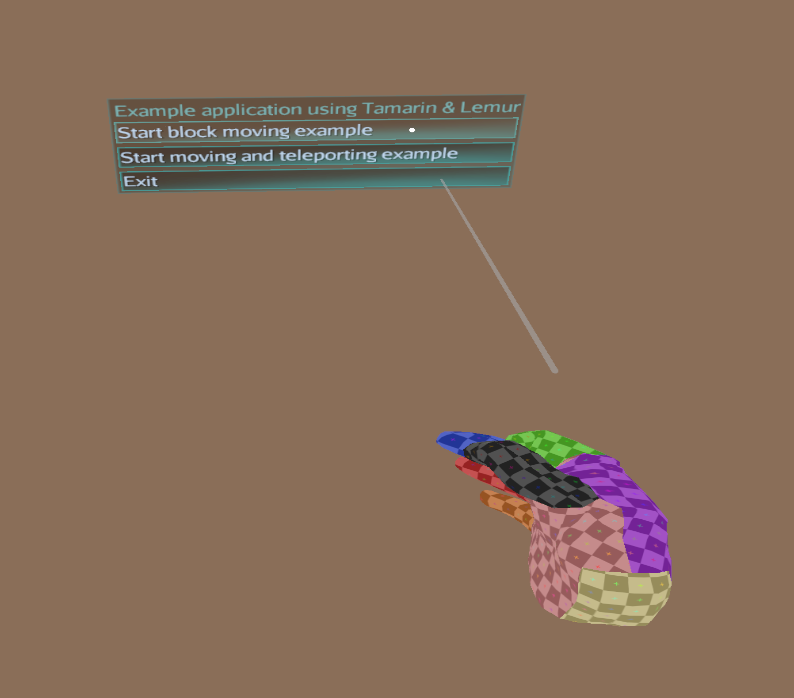

Lemur support

Lemur is optional, a project that doesn’t have lemur will work fine with Tamarin, but if Lemur is on the class path then additional functionality is available to have interaction with 3D UIs

Lemur support allows for a tracking cursor to be projected onto whatever is currently being pointed at (effectively a mouse pointer in 3d). If a “click” is called on the bound hands then if either a MouseListener is attached to the Lemur UI object or its a button then that click is passed to Lemur (its a limited click, with no X,Y coordinates). Also shown is a picking line attached to the xPointing coordinate system.

Test bed

An example application using all the above features is at GitHub - oneMillionWorlds/TamarinTestBed: An example project that uses Tamarin to produce a simple VR game

Basic hand models

Also within Tamarin are some hand models (and reference textures for them). They are “fine, not great” but good enough to get started with. Equally I have included their blender files within the git project if anyone wants to start with them but make something better. Getting the bones right was a huge pain so I hope this will save people time.

License

I went back and forth between an MIT license and a BSD-3 license, its currently a BSD-3 license to copy what JMonkey has, but I have no strong feelings about it other than wanting minimal requirements on end users.

Controversial decisions

Compile only dependencies

Both JMonkey and lemur are compileOnly dependencies of Tamarin, I did this because I consider Tamarin extending both of those, not “using” them and I don’t want to pin people to a particular version (or require them to do excludes in their gradle files)

VRHands app state not controls

I considered the players hands as global things, so the hands are controlled by an AppState, not by controls on the hand geometries. I liked this because it means they are easily available anywhere rather than references to them having to be passed around, but I can see the argument the other way

Current availability

Currently this is only available to build from source from GitHub - oneMillionWorlds/Tamarin: A VR utilities library for JMonkeyEngine

What’s next

Maven central

I’m in the process of getting ownership of the groupId com.onemillionworlds on maven central. Once I do I’ll publish the library there

[Edit; now released to maven central]

JMonkey store & wiki

Similarly once I’ve got this on maven central I’ll add this to the JMonkey store and add documentation in the user contributions section to the wiki.

Add to JMonkey core

Doing the real test application I realised I needed a lot more of the OpenVr stuff than I though. I’ll put that core stuff into jme-vr so it’ll be available for 3.6, and then I can remove the backporting stuff from Tamarin

OpenXR

Obviously I’m aware that OpenXR will replace OpenVr at some point, at that point I’ll need to do some further work to update all of this, probably creating a version 2 at that point. I can imagine a similar backporting exercise into Tamarin happening then, but if I don’t write that myself I’ll obviously ask first

).

). I thought exactly the same that it would be useful. If a spatial (and I mean spatial, not just geometries) have an

I thought exactly the same that it would be useful. If a spatial (and I mean spatial, not just geometries) have an